Initial commit

parents

Showing

Too many changes to show.

To preserve performance only 1000 of 1000+ files are displayed.

.gitattributes

0 → 100644

.gitignore

0 → 100644

LICENSE.md

0 → 100644

This diff is collapsed.

MinerU_CLA.md

0 → 100644

README.md

0 → 100644

README_ori.md

0 → 100644

This diff is collapsed.

README_zh-CN.md

0 → 100644

This diff is collapsed.

SECURITY.md

0 → 100644

assets/layout.png

0 → 100644

439 KB

assets/result.png

0 → 100644

345 KB

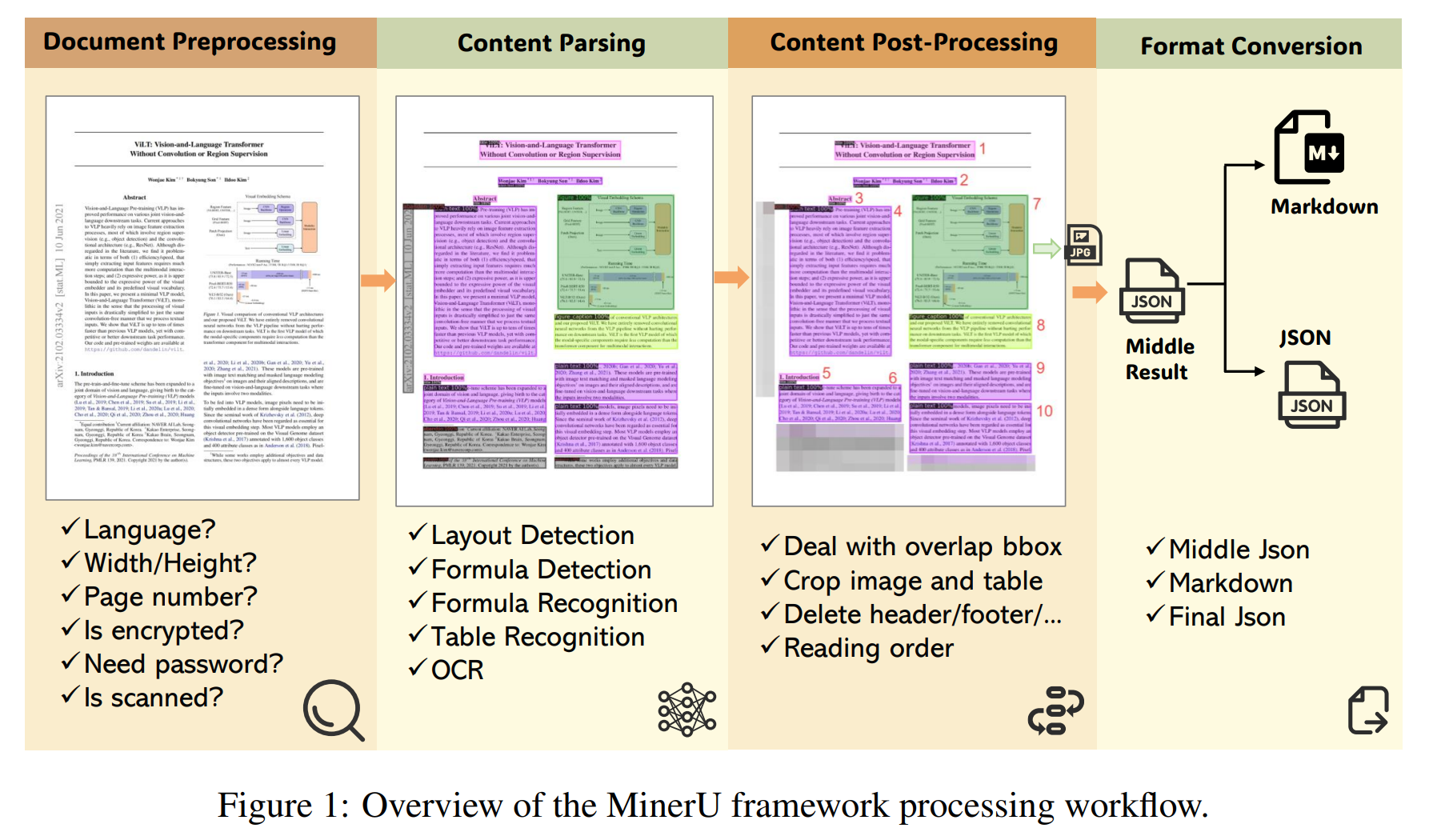

assets/workflow.png

0 → 100644

821 KB

demo/demo.py

0 → 100644

demo/pdfs/demo1.pdf

0 → 100644

File added

demo/pdfs/demo2.pdf

0 → 100644

File added

demo/pdfs/demo3.pdf

0 → 100644

File added

demo/pdfs/small_ocr.pdf

0 → 100644

File added

docker/china/Dockerfile

0 → 100644

docker/compose.yaml

0 → 100644

docker/global/Dockerfile

0 → 100644

docs/FAQ_en_us.md

0 → 100644