First commit

Showing

This diff is collapsed.

This diff is collapsed.

data/select_dataset.py

0 → 100644

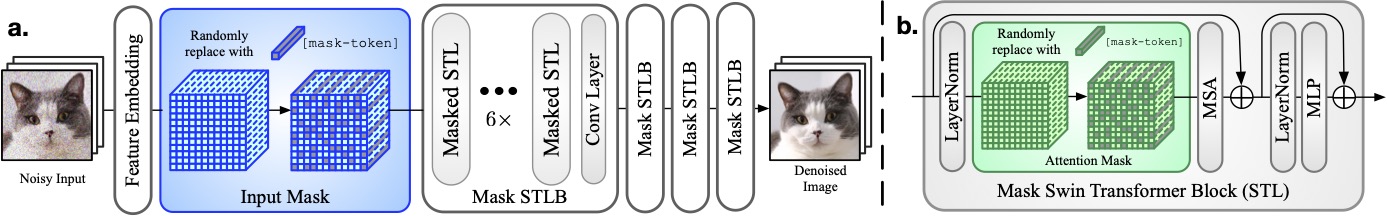

doc/method.jpg

0 → 100644

108 KB

doc/origin.png

0 → 100644

650 KB

doc/results.png

0 → 100644

533 KB

docker/Dockerfile

0 → 100644

gen_data.py

0 → 100644

main_test_swinir.py

0 → 100644

main_test_swinir_x8.py

0 → 100644

main_train_psnr.py

0 → 100644

matlab/Cal_PSNRSSIM.m

0 → 100644

matlab/README.md

0 → 100644

matlab/center_replace.m

0 → 100644

matlab/modcrop.m

0 → 100644

matlab/shave.m

0 → 100644

matlab/zoom_function.m

0 → 100644