added llama_inference_pytorch

Showing

LICENSE

0 → 100644

This diff is collapsed.

README.md

0 → 100644

README_en.md

0 → 100644

README_zh.md

0 → 100644

config/deepspeed_config.json

0 → 100644

config/llama_13b_config.json

0 → 100644

config/llama_30b_config.json

0 → 100644

config/llama_65b_config.json

0 → 100644

config/llama_7b_config.json

0 → 100644

doc/llama-inf.jpg

0 → 100644

71.5 KB

generate.py

0 → 100644

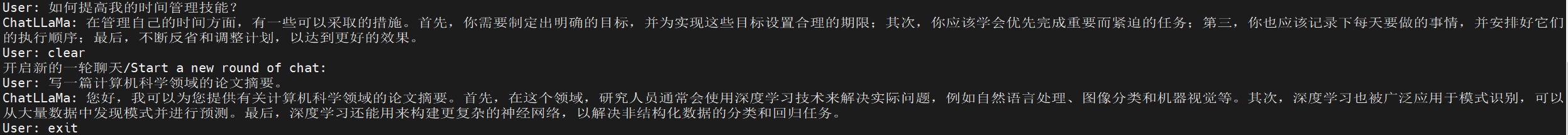

llama_dialogue.py

0 → 100644

llama_gradio.py

0 → 100644

llama_infer.py

0 → 100644

llama_server.py

0 → 100644

model.properties

0 → 100644

model/llama.py

0 → 100644

model/norm.py

0 → 100644

model/rope.py

0 → 100644