"csrc/vscode:/vscode.git/clone" did not exist on "9dd24ecd724301361a479658e50b5a05537def53"

Delete infer_vllm.py and update README“

Showing

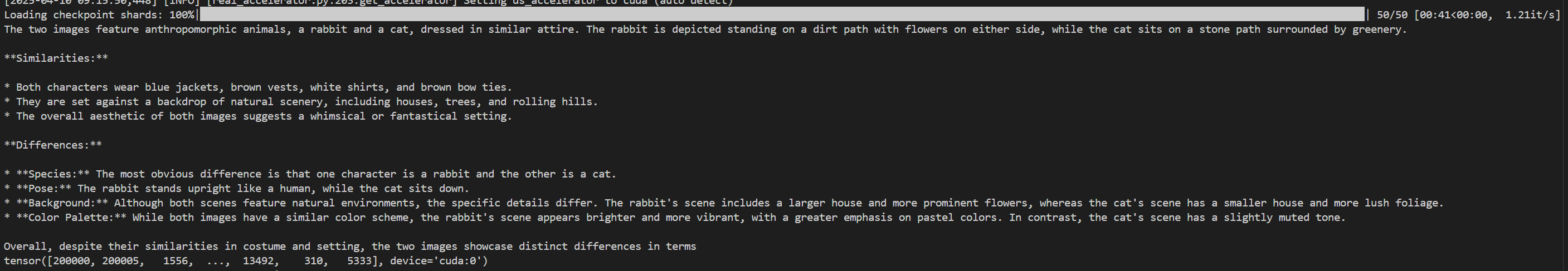

doc/transformers_results.jpg

0 → 100644

69.8 KB

infer_vllm.py

deleted

100644 → 0

requirements.txt

deleted

100644 → 0

| # This file was autogenerated by uv via the following command: | ||

| # uv export --frozen --no-hashes --no-emit-project --output-file=requirements.txt | ||

| annotated-types==0.7.0 | ||

| certifi==2025.1.31 | ||

| charset-normalizer==3.4.1 | ||

| idna==3.10 | ||

| jinja2==3.1.6 | ||

| markupsafe==3.0.2 | ||

| pillow==11.1.0 | ||

| pydantic==2.10.6 | ||

| pydantic-core==2.27.2 | ||

| pyyaml==6.0.2 | ||

| regex==2024.11.6 | ||

| requests==2.32.3 | ||

| tiktoken==0.8.0 | ||

| typing-extensions==4.12.2 | ||

| urllib3==2.3.0 | ||

| \ No newline at end of file |