instantid

Showing

gradio_demo/model_util.py

0 → 100644

gradio_demo/requirements.txt

0 → 100644

infer.py

0 → 100644

infer_full.py

0 → 100644

ip_adapter/resampler.py

0 → 100644

ip_adapter/utils.py

0 → 100644

model.properties

0 → 100644

This diff is collapsed.

This diff is collapsed.

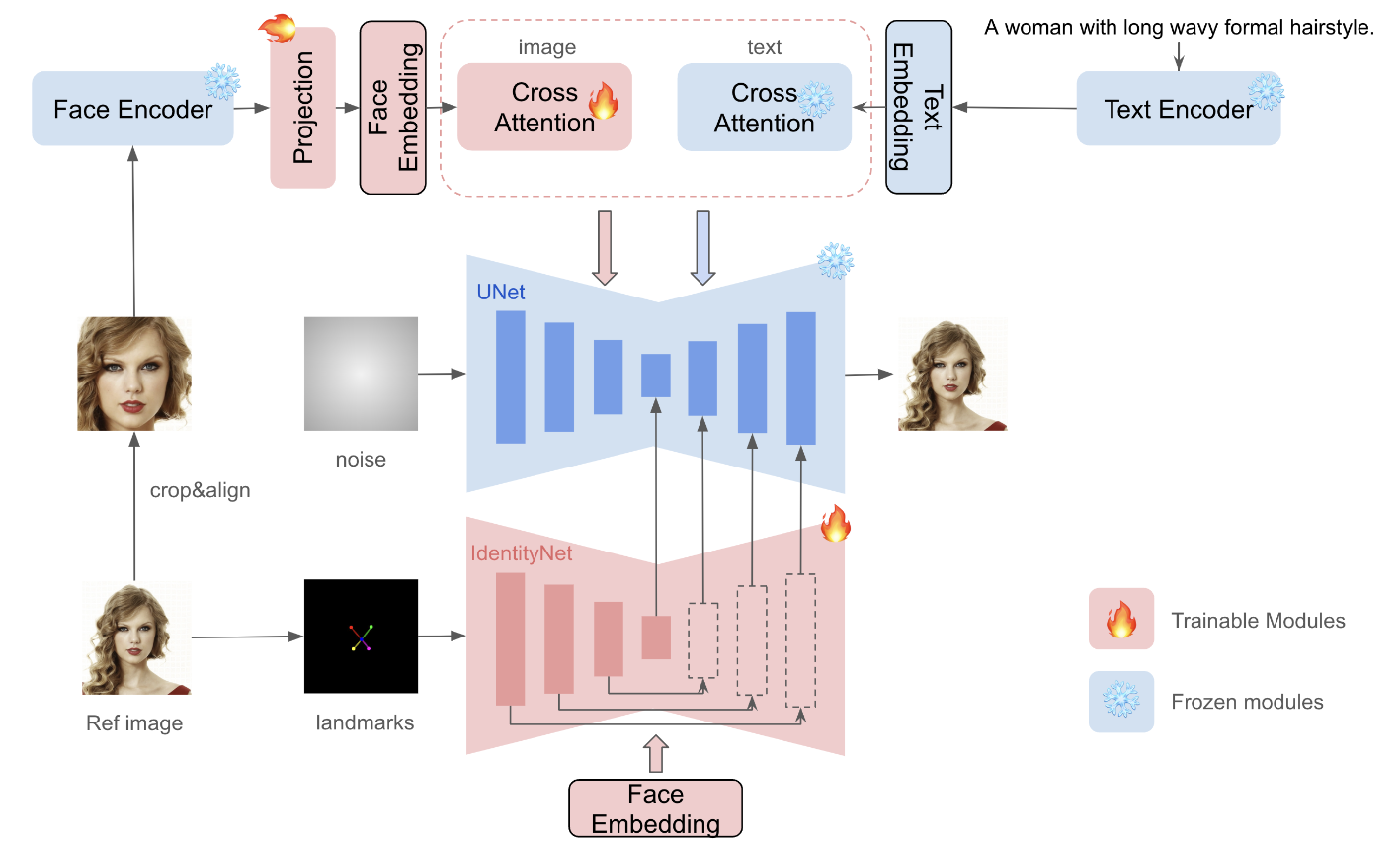

readme_imgs/image-1.png

0 → 100644

238 KB

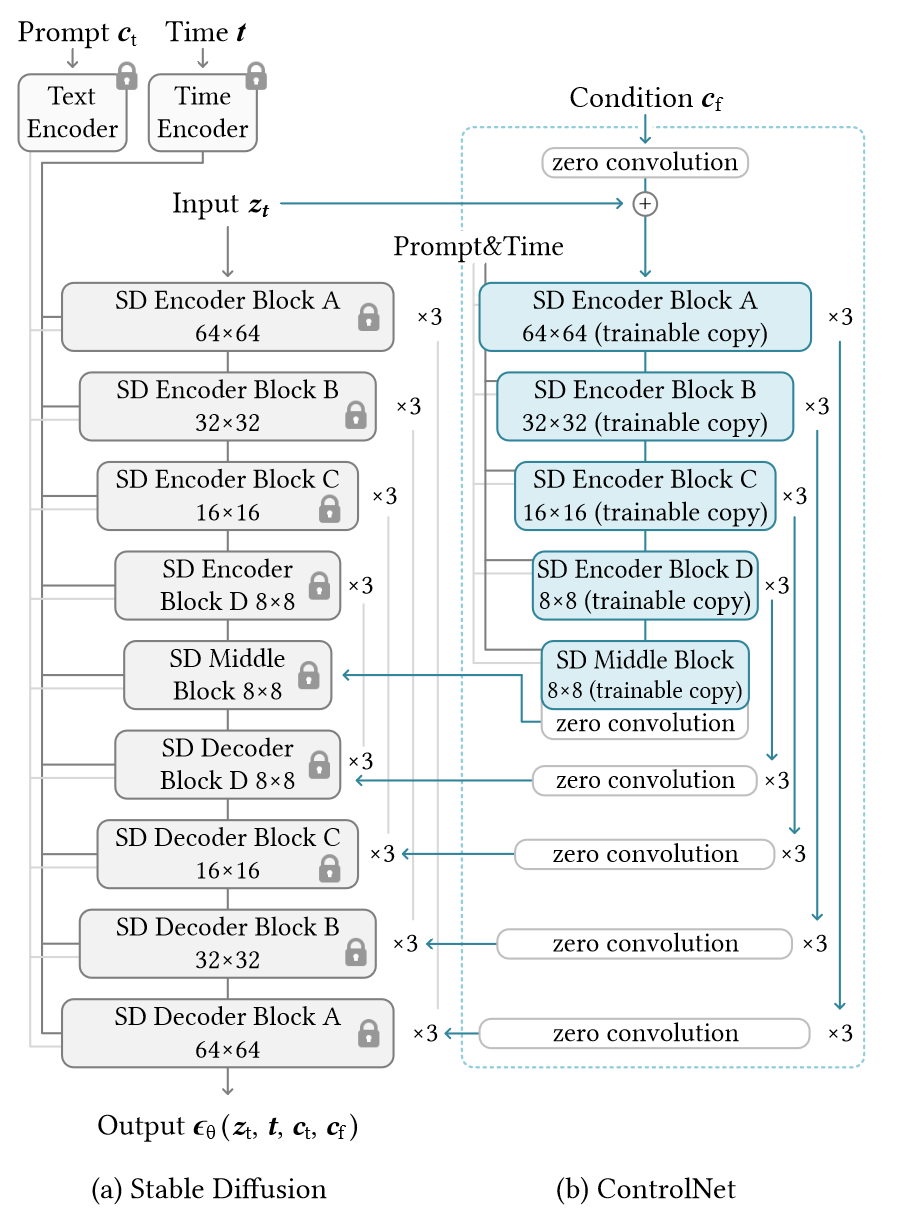

readme_imgs/image-2.png

0 → 100644

162 KB

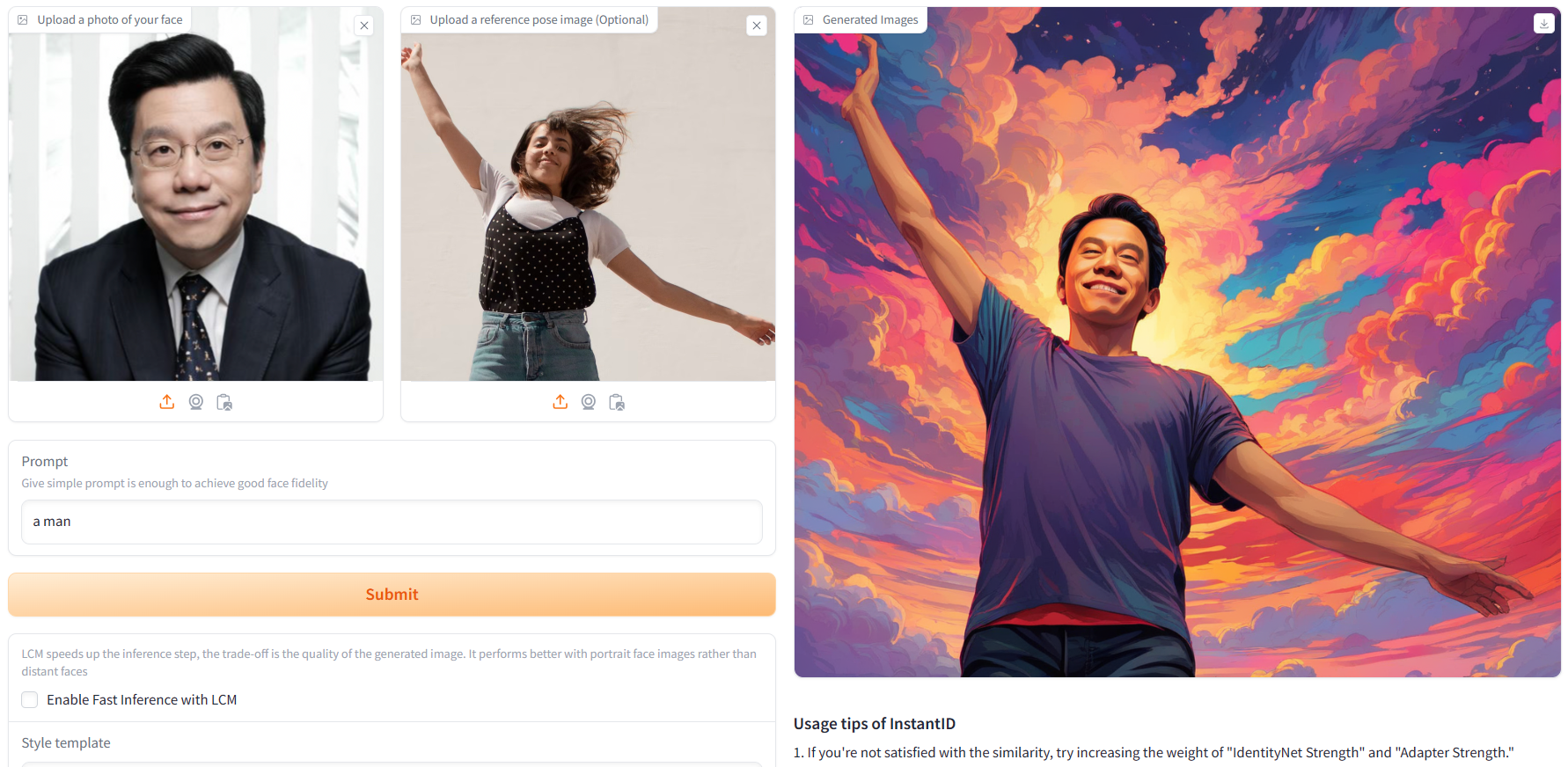

readme_imgs/image-3.png

0 → 100644

1.39 MB

readme_imgs/image-4.png

0 → 100644

1.73 MB

requirements.txt

0 → 100644

| torch==1.13.1 | ||

| torchvision==0.14.1 | ||

| diffusers==0.25.1 | ||

| opencv-python | ||

| transformers | ||

| accelerate | ||

| insightface | ||

| omegaconf | ||

| gradio | ||

| peft | ||

| controlnet_aux | ||

| \ No newline at end of file |