First commit

Showing

datasets/__init__.py

0 → 100644

datasets/coco.py

0 → 100644

datasets/coco_eval.py

0 → 100644

datasets/coco_panoptic.py

0 → 100644

datasets/data_prefetcher.py

0 → 100644

datasets/panoptic_eval.py

0 → 100644

datasets/samplers.py

0 → 100644

datasets/transforms.py

0 → 100644

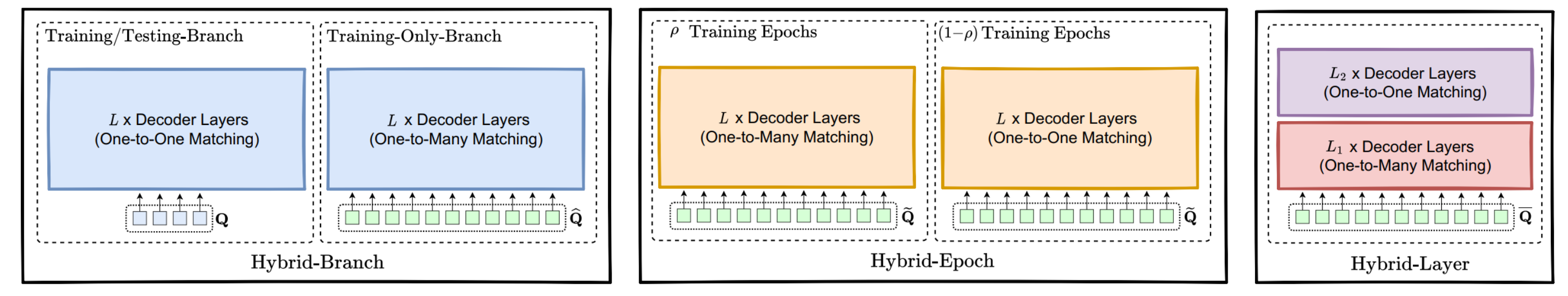

doc/hybrid.png

0 → 100644

413 KB

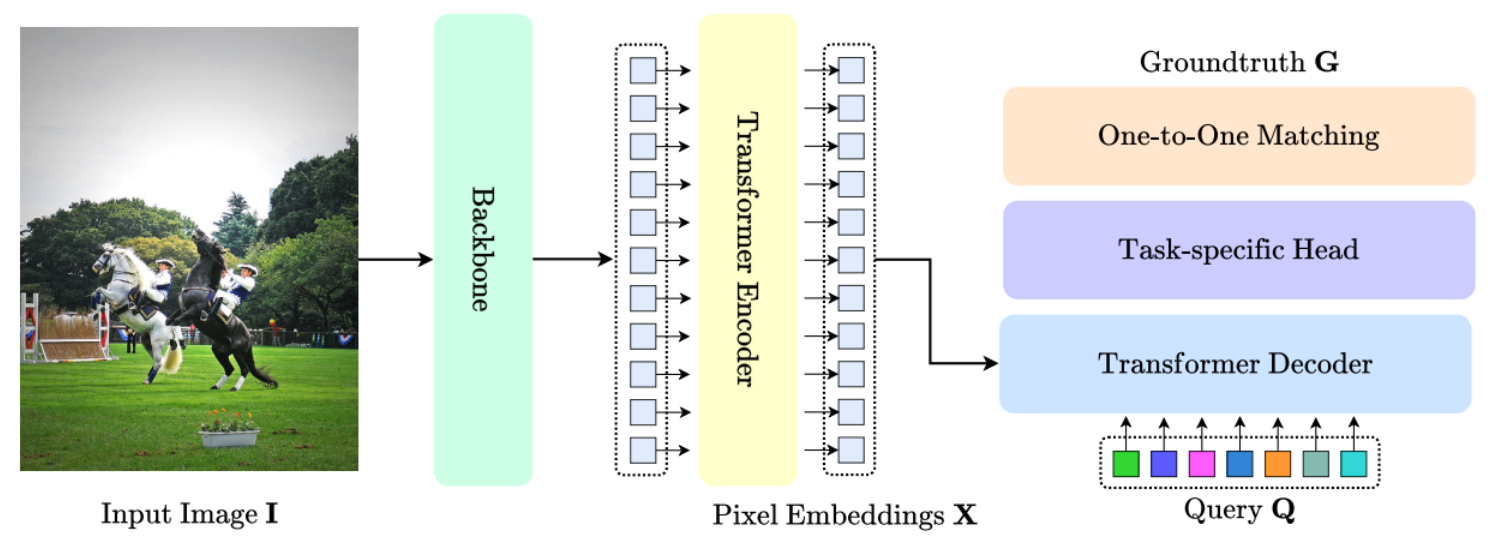

doc/methods.png

0 → 100644

390 KB

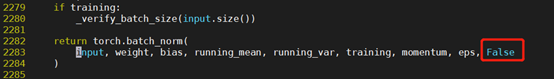

doc/native_bn.png

0 → 100644

26.6 KB

doc/results.jpg

0 → 100644

107 KB

docker/Dockerfile

0 → 100644

engine.py

0 → 100644