Merge branch 'develop' into 'master'

Develop See merge request !2

Showing

File deleted

| W: | H:

| W: | H:

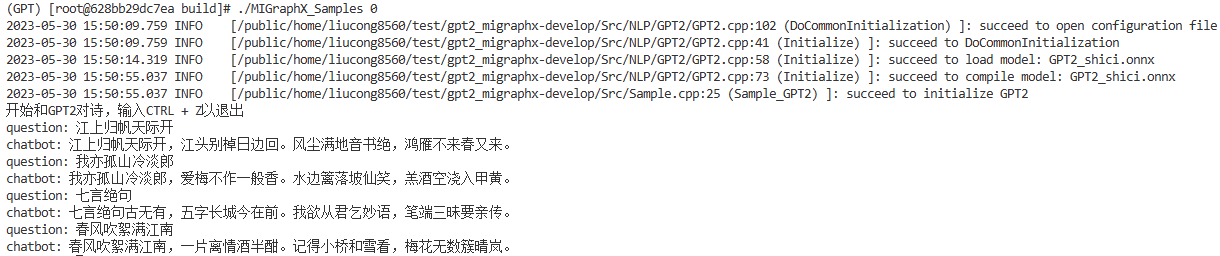

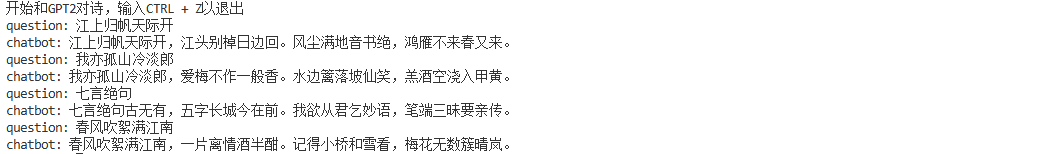

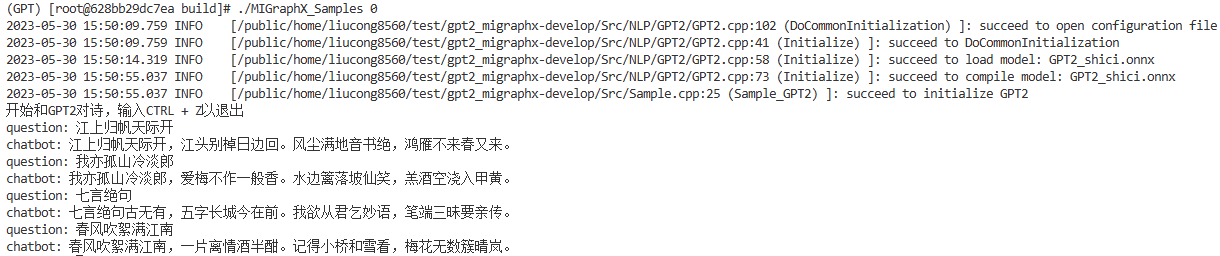

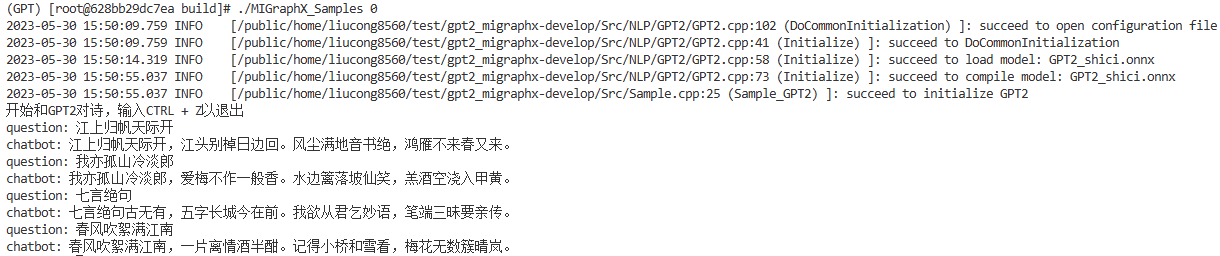

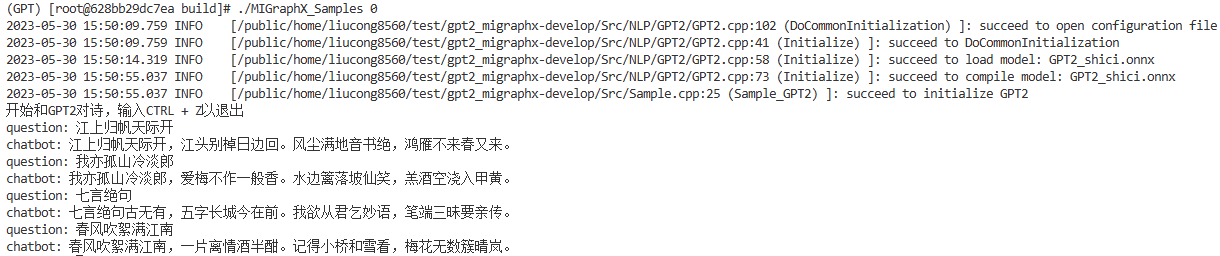

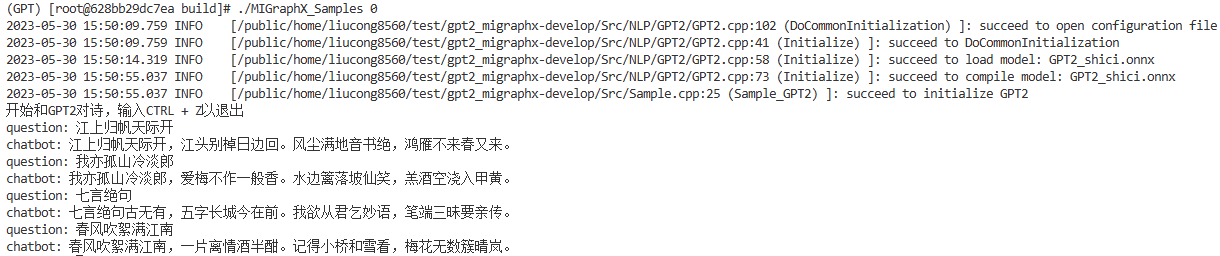

Src/Sample.cpp

deleted

100644 → 0

Src/Sample.h

deleted

100644 → 0

Develop See merge request !2

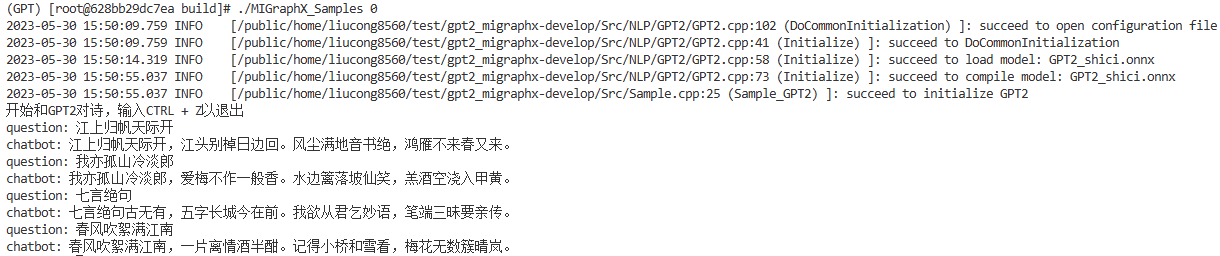

35.3 KB | W: | H:

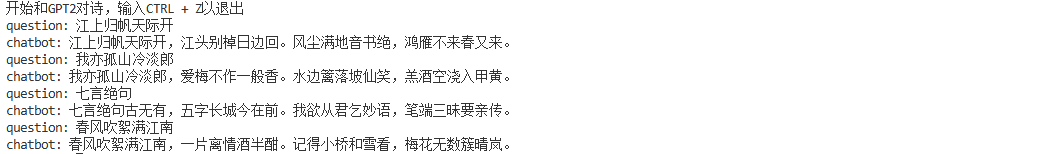

14.1 KB | W: | H: