gme

Showing

.gitignore

0 → 100644

Dockerfile

0 → 100644

README.md

0 → 100644

examples/image1.png

0 → 100644

1.74 MB

examples/image2.png

0 → 100644

1.97 MB

gme_inference.py

0 → 100644

inference.ipynb

0 → 100644

model.properties

0 → 100644

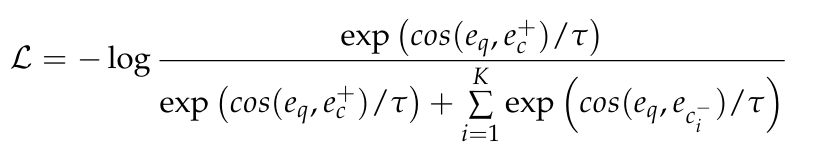

readme_imgs/alg.png

0 → 100644

23.9 KB

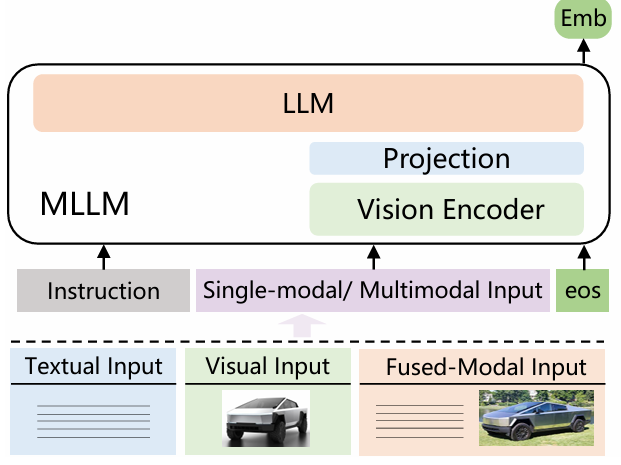

readme_imgs/arch.png

0 → 100644

61.9 KB

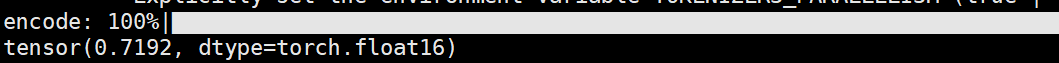

readme_imgs/result.png

0 → 100644

6.22 KB

requirements.txt

0 → 100644

| transformers==4.46.3 | ||

| \ No newline at end of file |