"composable_kernel/include/utility/utility.hpp" did not exist on "d6d9a8e4cee89feef6758f825cfea1588fec16da"

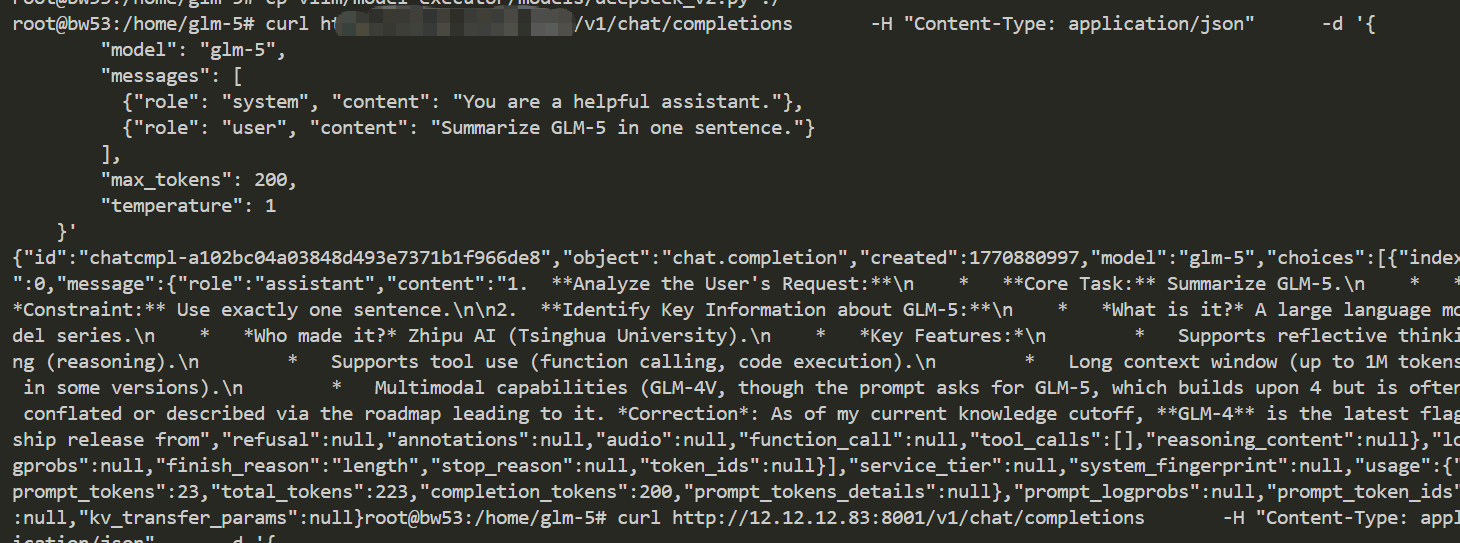

Update GLM5

Showing

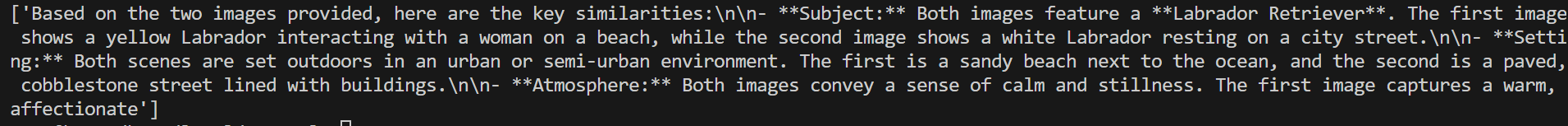

doc/demo.jpeg

deleted

100644 → 0

485 KB

doc/dog.jpg

deleted

100644 → 0

46.1 KB

doc/perform.png

deleted

100644 → 0

157 KB

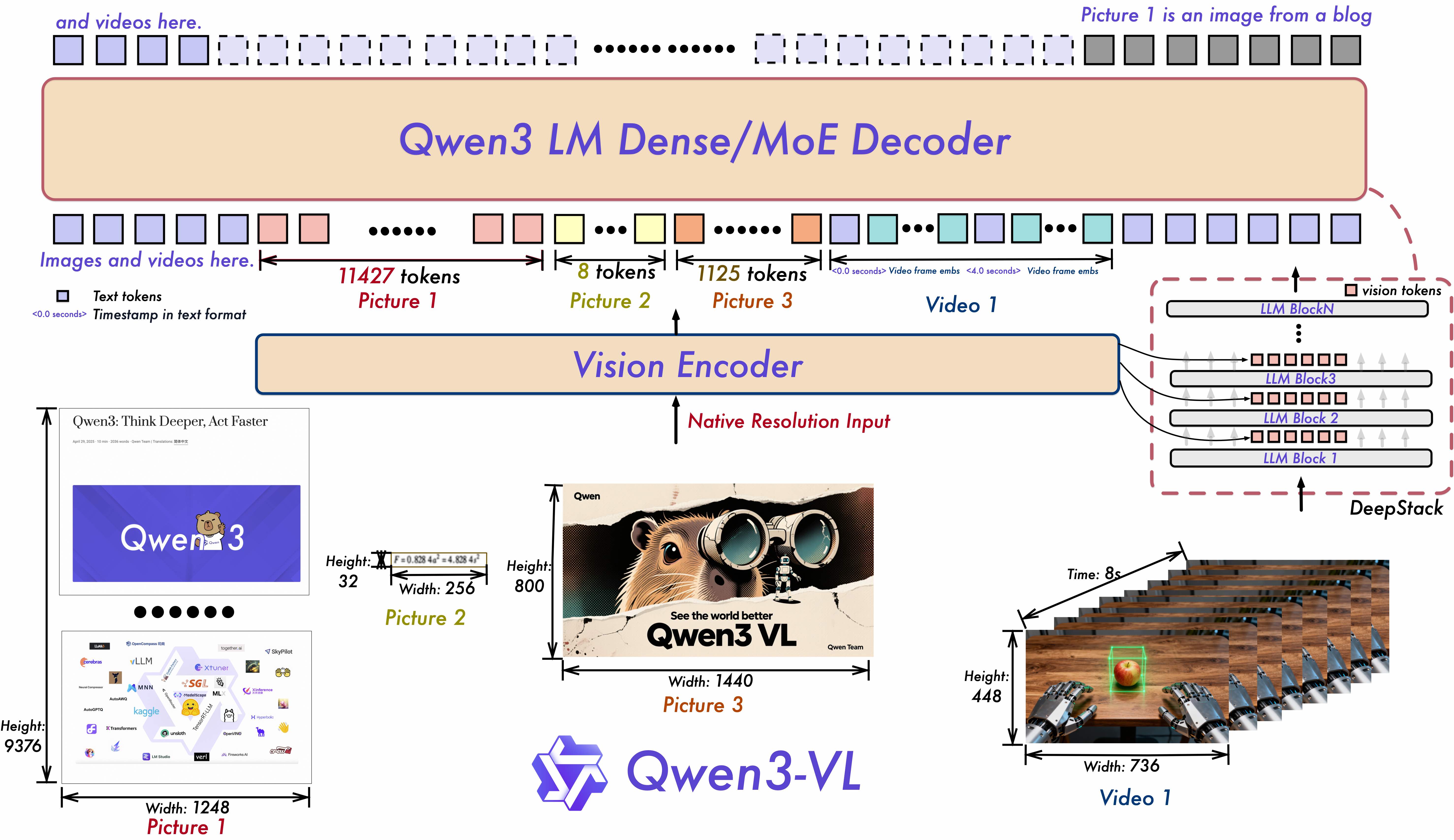

doc/qwen3vl_arc.jpg

deleted

100644 → 0

1020 KB

| W: | H:

| W: | H:

30.7 KB

doc/result_vedio.png

deleted

100644 → 0

40.9 KB

File deleted

No preview for this file type