"git@developer.sourcefind.cn:orangecat/ollama.git" did not exist on "ed5fb088c4d7f57ea3ccf629e36ecb2e857e22eb"

Add infos in README

Showing

CONTRIBUTING.md

deleted

100644 → 0

Contributors.md

0 → 100644

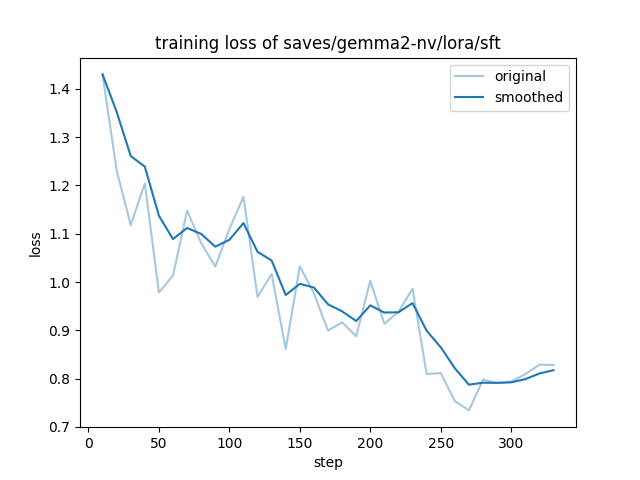

docs/training_loss_nv.png

0 → 100644

40 KB