v1.0

Showing

.gitattributes

0 → 100644

.gitignore

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_ZH.md

0 → 100644

README_origin.md

0 → 100644

app.py

0 → 100644

This diff is collapsed.

doc/LDM.png

0 → 100644

272 KB

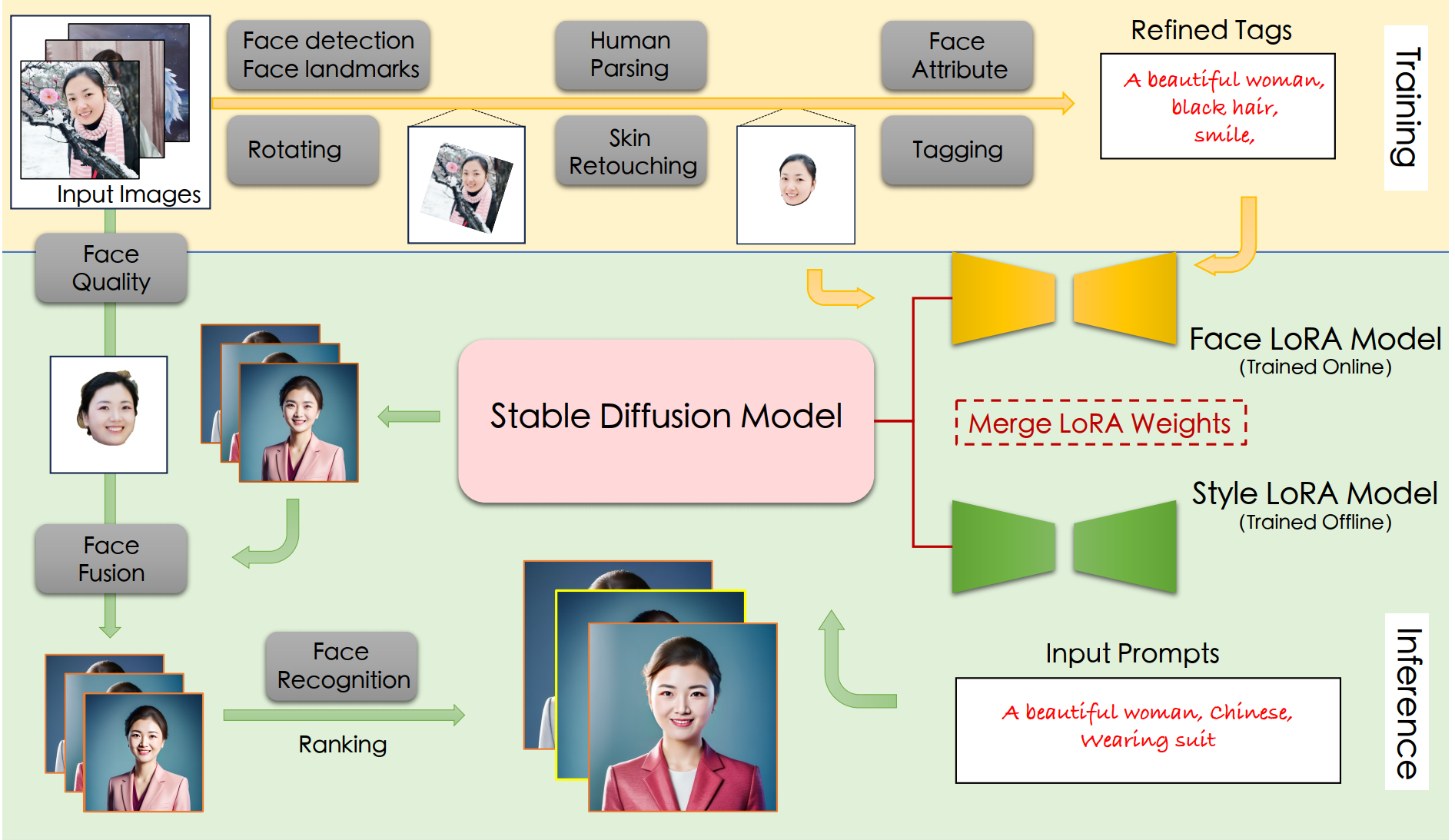

doc/facechain.png

0 → 100644

521 KB

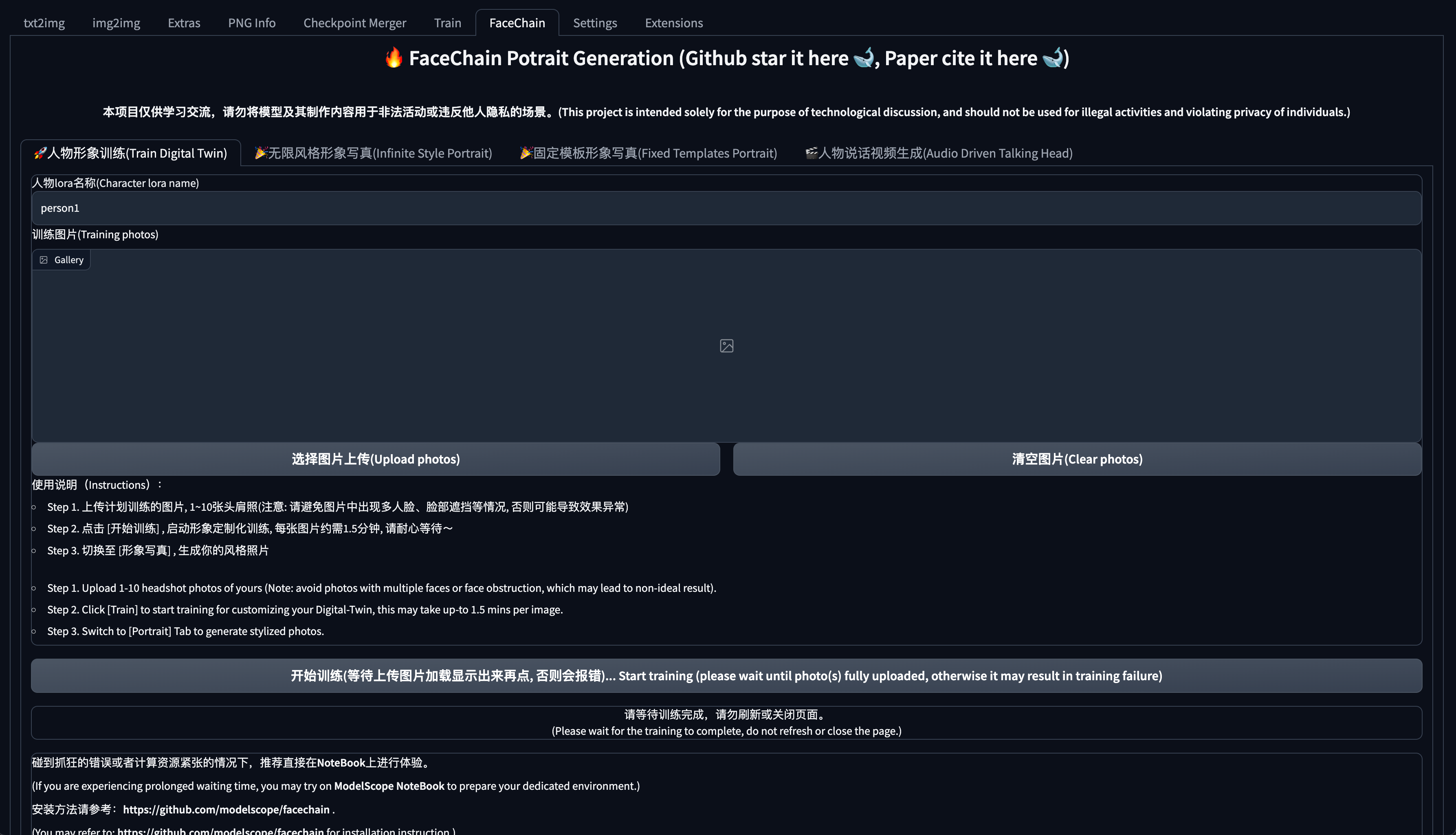

doc/sdwebui_success.png

0 → 100644

737 KB

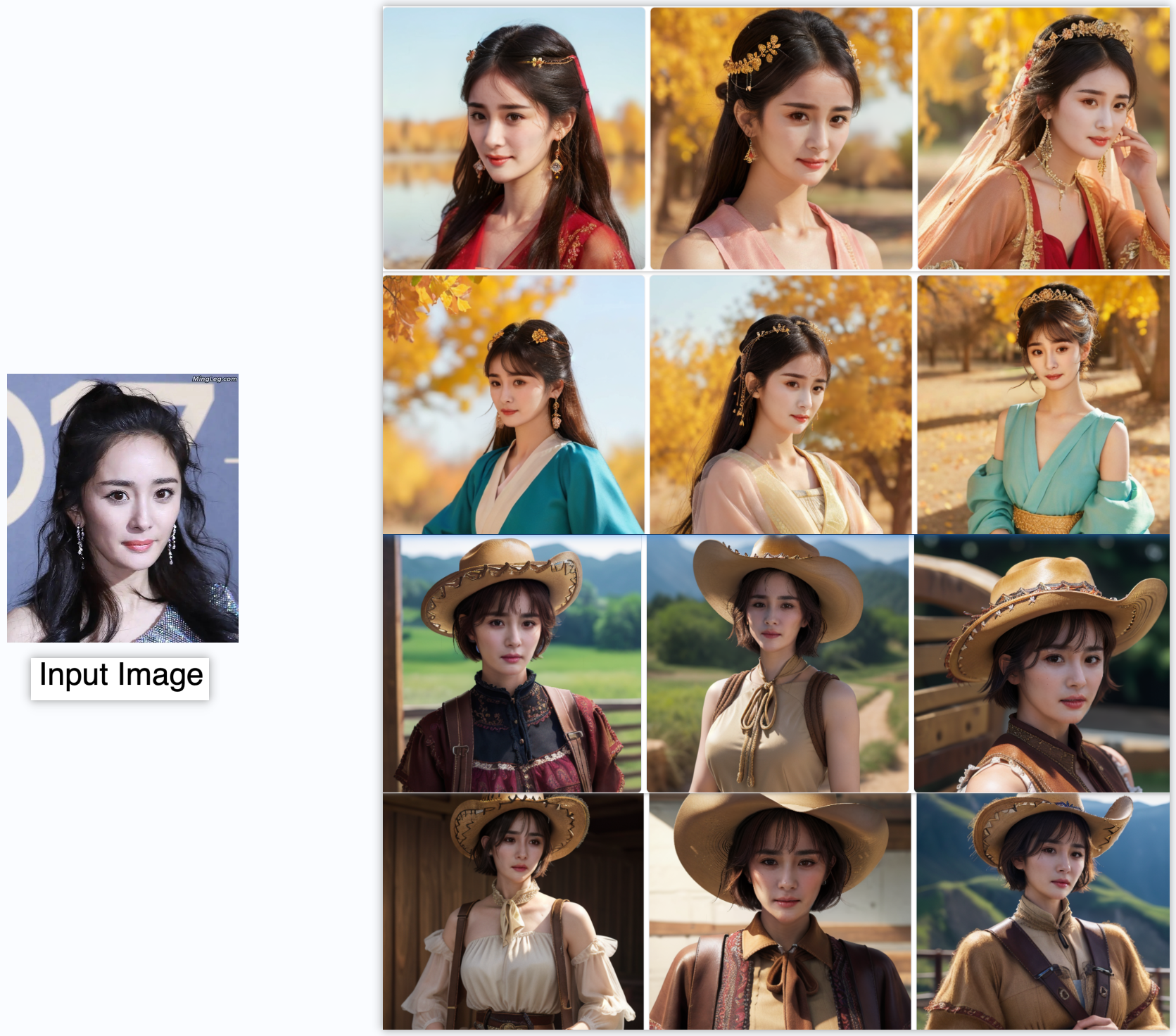

doc/test.png

0 → 100644

2.93 MB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

dwpose/__init__.py

0 → 100644

dwpose/onnxdet.py

0 → 100644

dwpose/onnxpose.py

0 → 100644

dwpose/util.py

0 → 100644

dwpose/wholebody.py

0 → 100644