Initial commit

Showing

export_to_onnx.py

0 → 100644

export_to_torchscript.py

0 → 100644

figs/.DS_Store

0 → 100644

File added

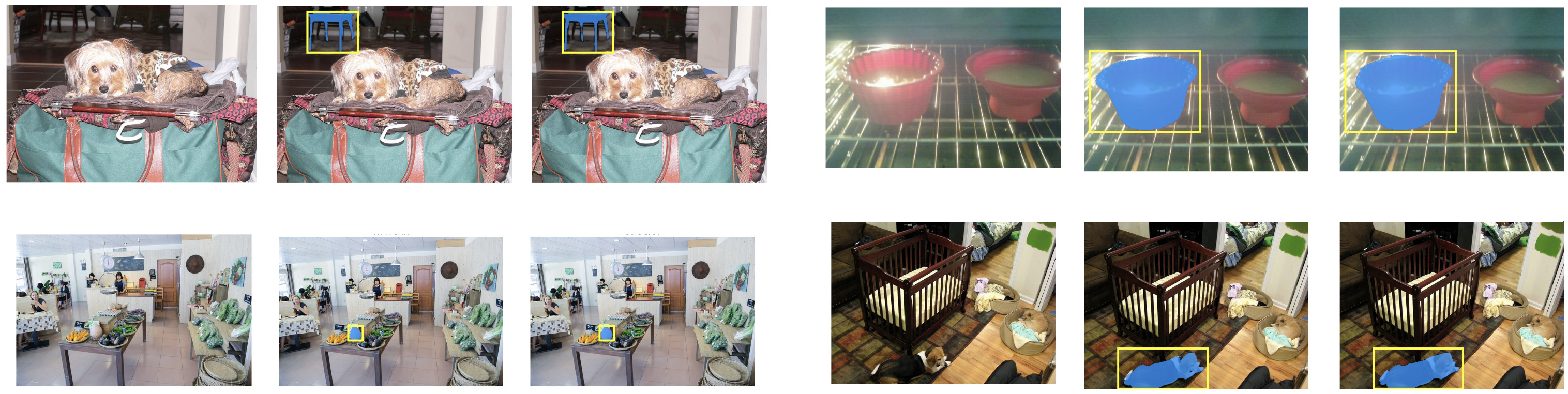

figs/examples/demo_box.png

0 → 100644

3.41 MB

2.93 MB

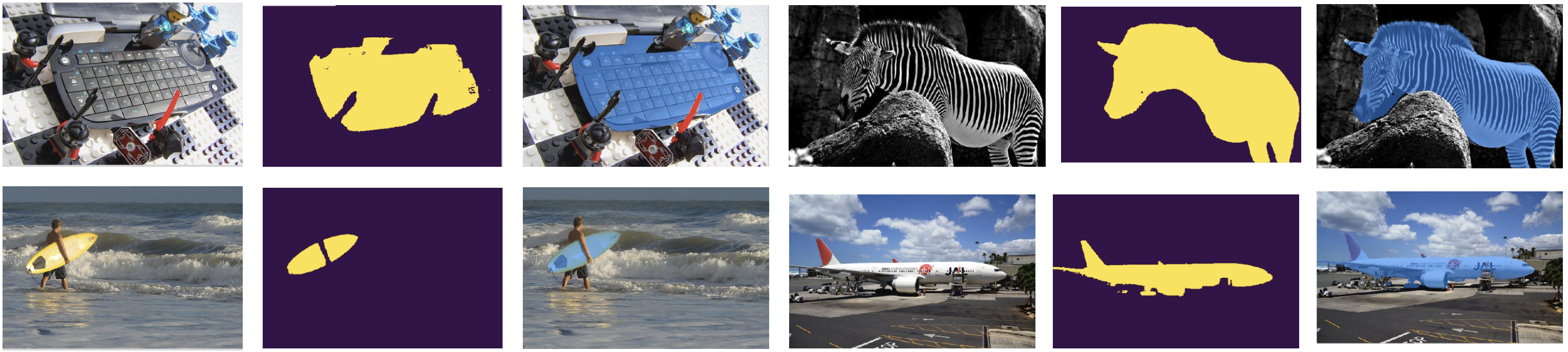

figs/examples/demo_point.png

0 → 100644

4.81 MB

2.43 MB

figs/examples/dogs.jpg

0 → 100644

438 KB

175 KB

178 KB

175 KB

178 KB

177 KB

inference_box_prompt.py

0 → 100644

inference_point_prompt.py

0 → 100644

linter.sh

0 → 100644

model.properties

0 → 100644

This diff is collapsed.