add unaligned face inference

Showing

inference_face.py

0 → 100644

46.4 KB

668 KB

26.4 KB

91 KB

24 KB

44.1 KB

10.2 KB

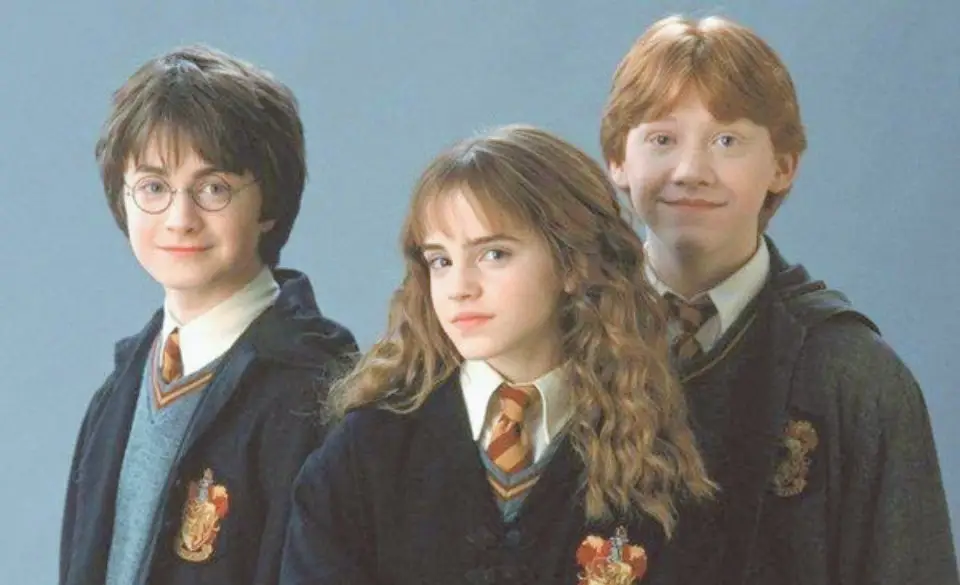

inputs/general/0014.jpg

0 → 100644

9.38 KB

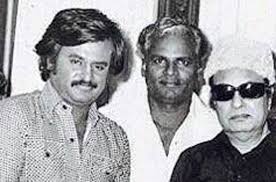

inputs/general/14.jpg

0 → 100755

26.9 KB

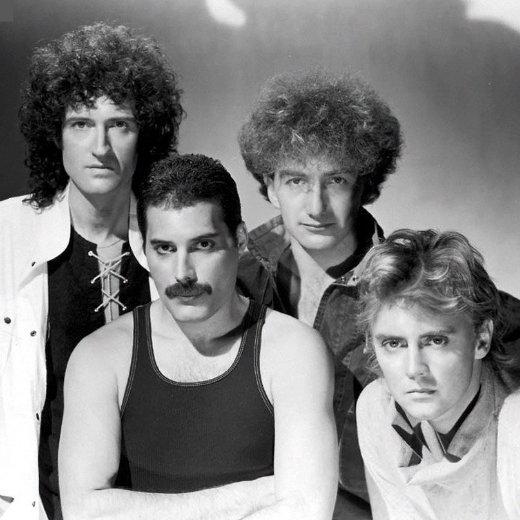

inputs/general/48.jpg

0 → 100755

2.77 KB

inputs/general/chip.png

0 → 100755

25.2 KB

requirements.txt

0 → 100644

| pytorch_lightning==1.4.2 | |||

| einops | |||

| open-clip-torch | |||

| omegaconf | |||

| torchmetrics==0.6.0 | |||

| triton | |||

| opencv-python-headless | |||

| scipy | |||

| matplotlib | |||

| lpips | |||

| gradio | |||

| chardet | |||

| transformers | |||

| facexlib | |||

| \ No newline at end of file |