First commit

parents

Showing

LICENSE

0 → 100644

README.md

0 → 100644

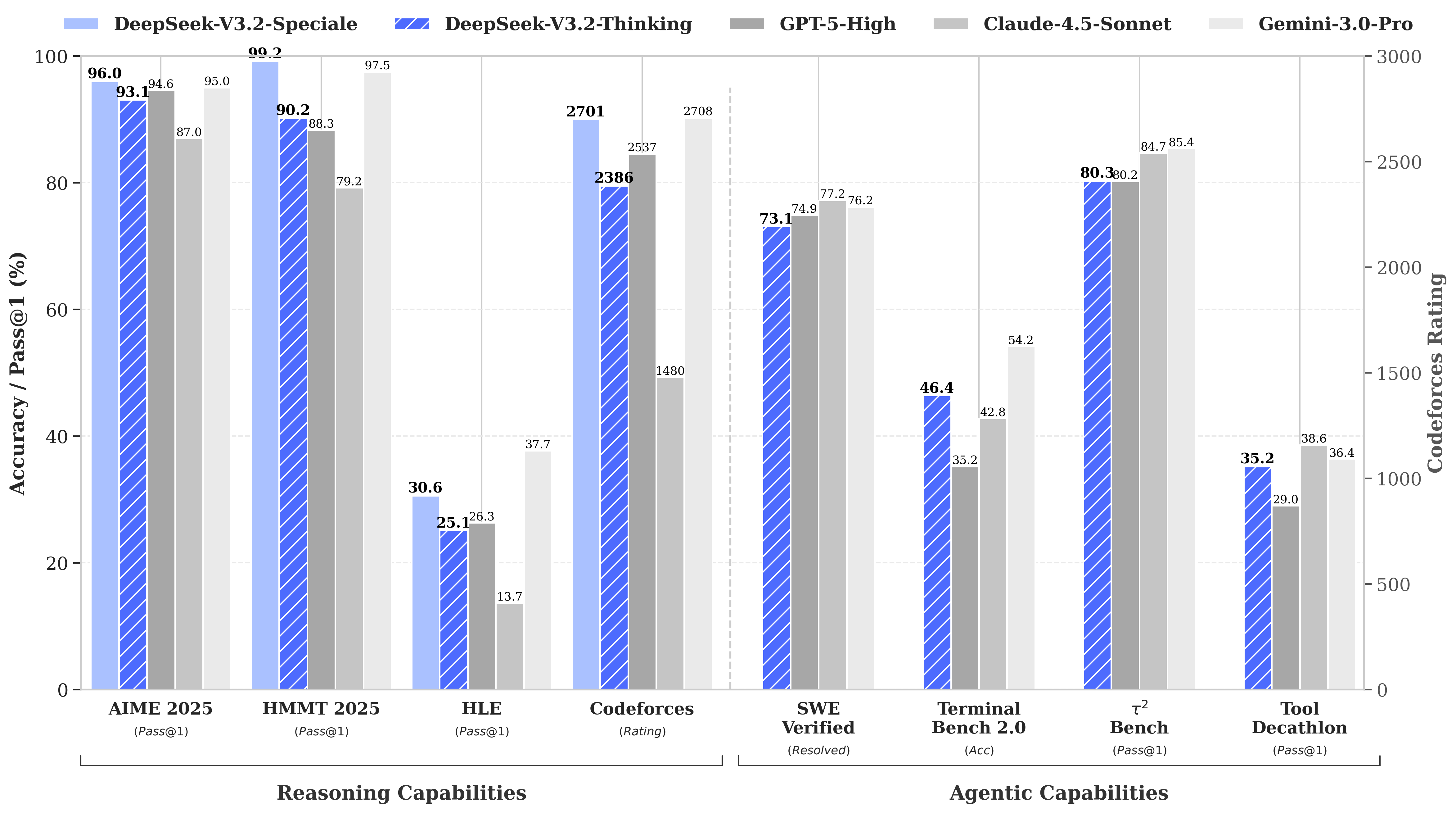

doc/benchmark.png

0 → 100644

456 KB

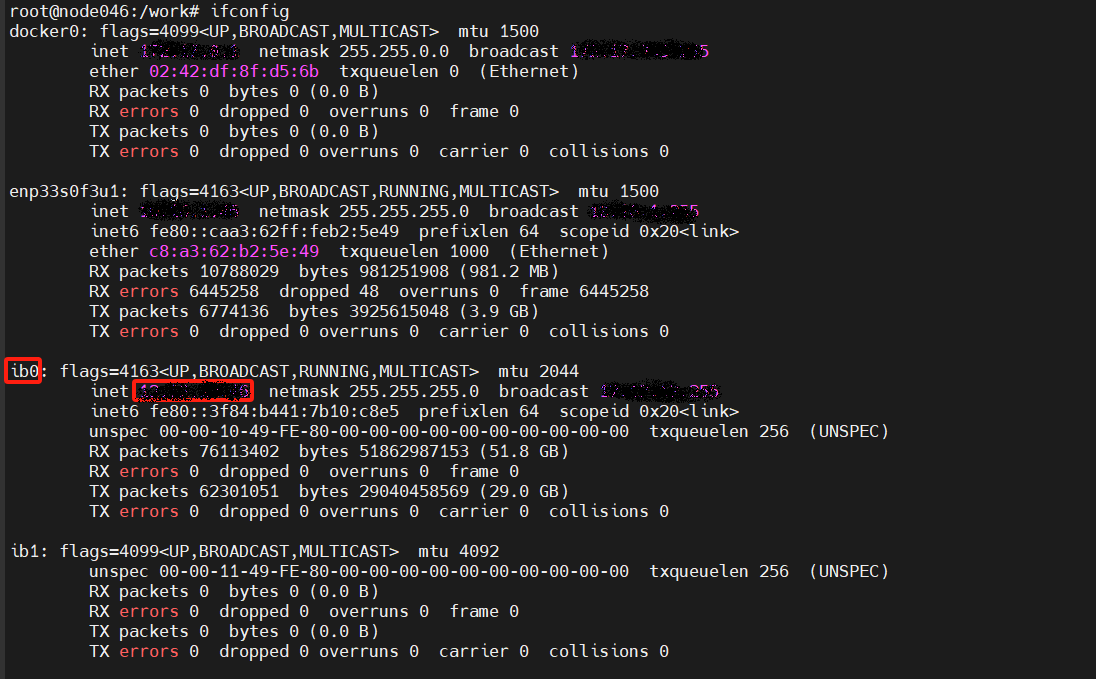

doc/ip_bw.png

0 → 100644

97.1 KB

doc/paper.pdf

0 → 100644

File added

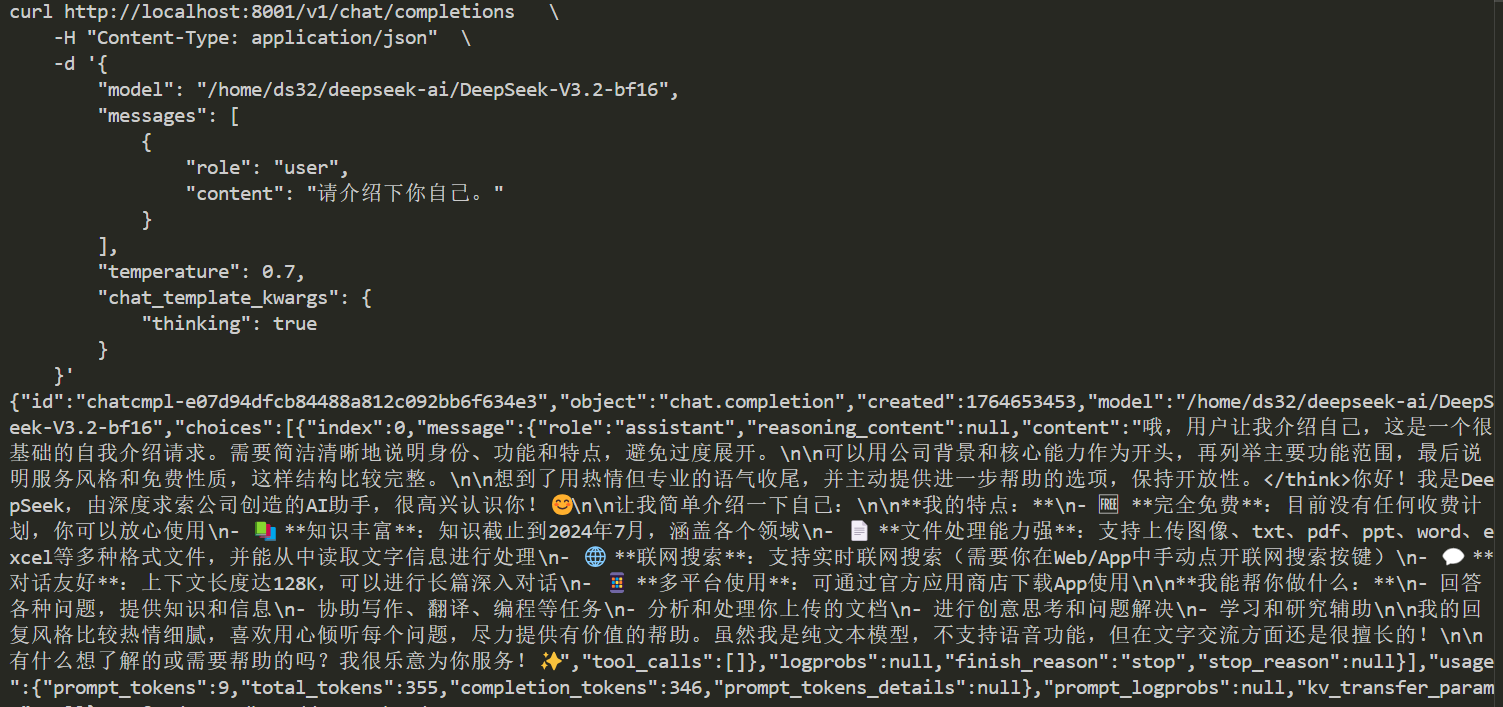

doc/result-dcu.png

0 → 100644

201 KB

icon.png

0 → 100644

53.8 KB

File added

inference/config.json

0 → 100644

inference/fp8_cast_bf16.py

0 → 100644

inference/kernel.py

0 → 100644

model.properties

0 → 100644

vllm-codes/chat_utils.py

0 → 100644

This diff is collapsed.

vllm-codes/encoding_dsv32.py

0 → 100644