First init

Showing

Contributors.md

0 → 100644

This diff is collapsed.

This diff is collapsed.

DeepSeek_OCR_paper.pdf

0 → 100644

File added

LICENSE

0 → 100644

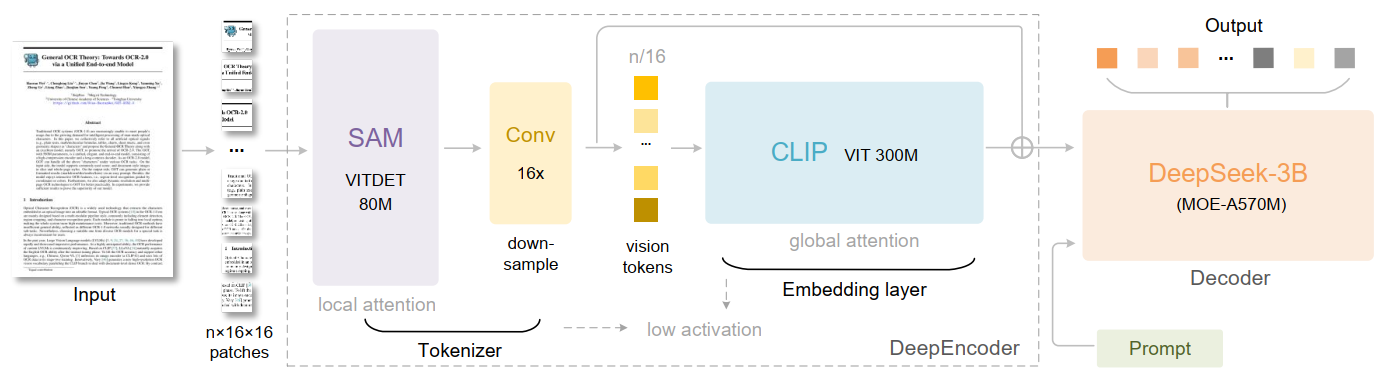

doc/model.png

0 → 100644

124 KB

docker/Dockerfile

0 → 100644

icon.png

0 → 100644

61 KB