"vscode:/vscode.git/clone" did not exist on "090d8237d30befeb2326aad628ca80757adfea81"

update codes

Showing

.gitignore

0 → 100644

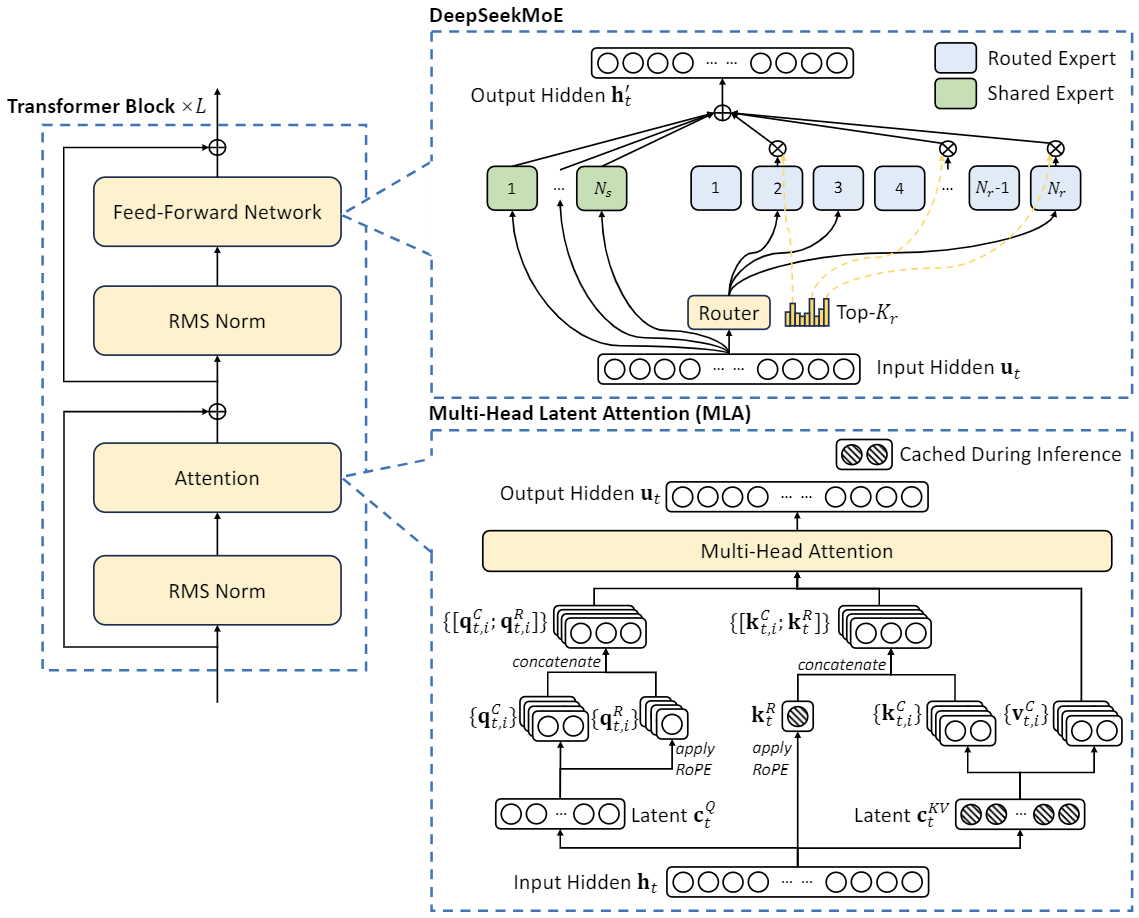

assets/model_framework.png

0 → 100644

165 KB

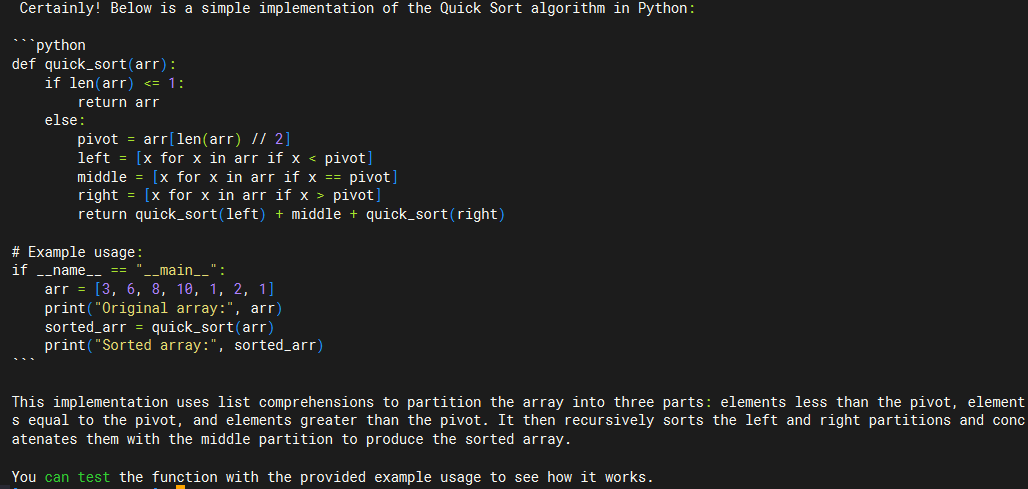

assets/result.png

0 → 100644

29.4 KB

docker/Dockerfile

0 → 100644

icon.png

0 → 100644

62.1 KB

inference.py

0 → 100644

model.properties

0 → 100644

requirements.txt

0 → 100644

| #torch>=2.0 | ||

| #tokenizers>=0.14.0 | ||

| #transformers==4.35.0 | ||

| #accelerate | ||

| #deepspeed==0.12.2 | ||

| sympy==1.12 | ||

| pebble | ||

| timeout-decorator | ||

| accelerate | ||

| attrdict | ||

| tqdm | ||

| datasets | ||

| tensorboardX |