update

Showing

LICENSE

0 → 100644

README.md

0 → 100644

README_ori.md

0 → 100644

datasets/__init__.py

0 → 100644

datasets/cityscapes.py

0 → 100644

datasets/data/train_aug.txt

0 → 100644

This diff is collapsed.

datasets/utils.py

0 → 100644

datasets/voc.py

0 → 100644

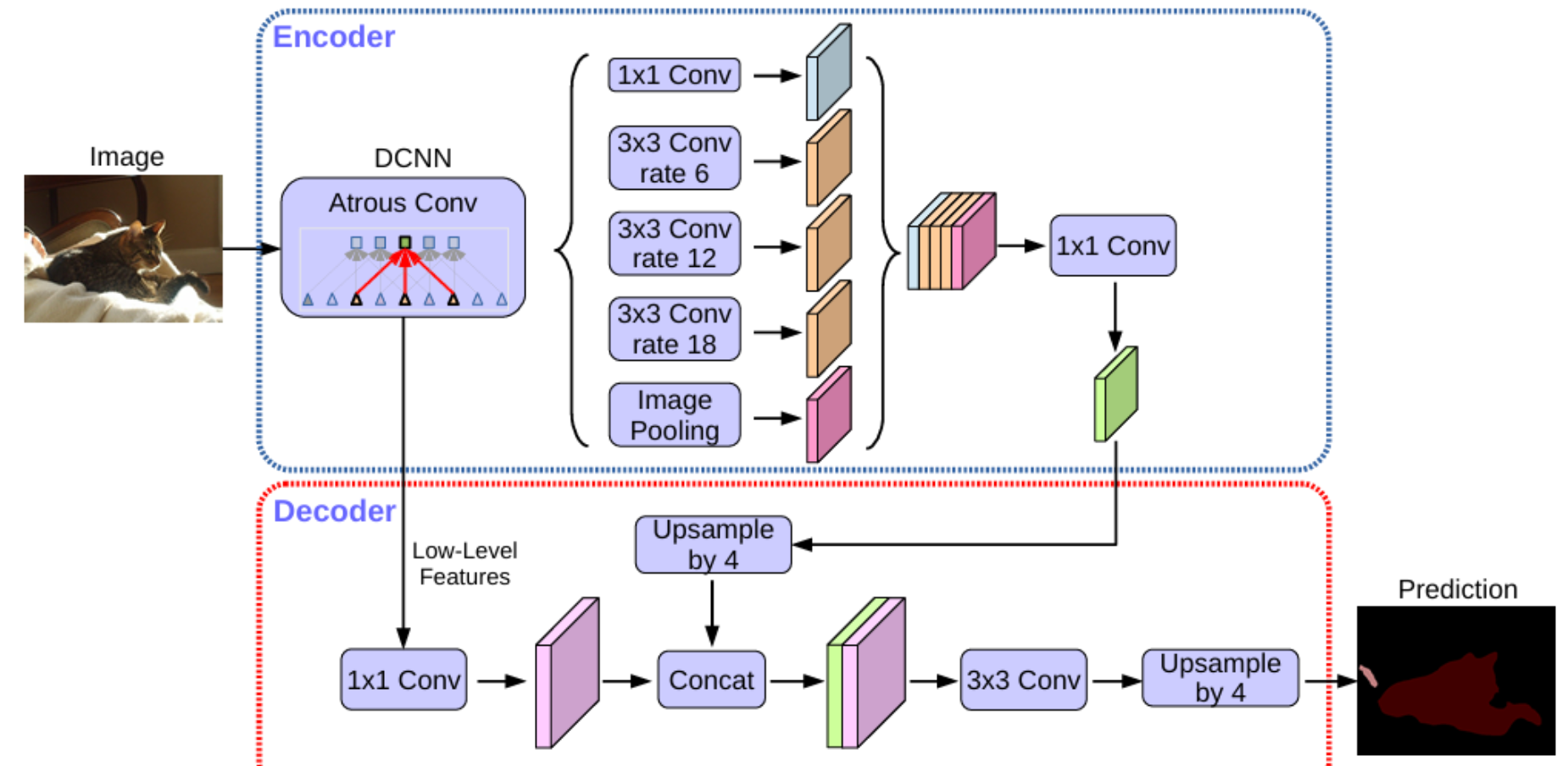

image.png

0 → 100644

418 KB

main.py

0 → 100644

metrics/__init__.py

0 → 100644

metrics/stream_metrics.py

0 → 100644

model.properties

0 → 100644

network/__init__.py

0 → 100644

network/_deeplab.py

0 → 100644

network/backbone/__init__.py

0 → 100644

network/backbone/hrnetv2.py

0 → 100644

network/backbone/resnet.py

0 → 100644

network/backbone/xception.py

0 → 100644