update

parents

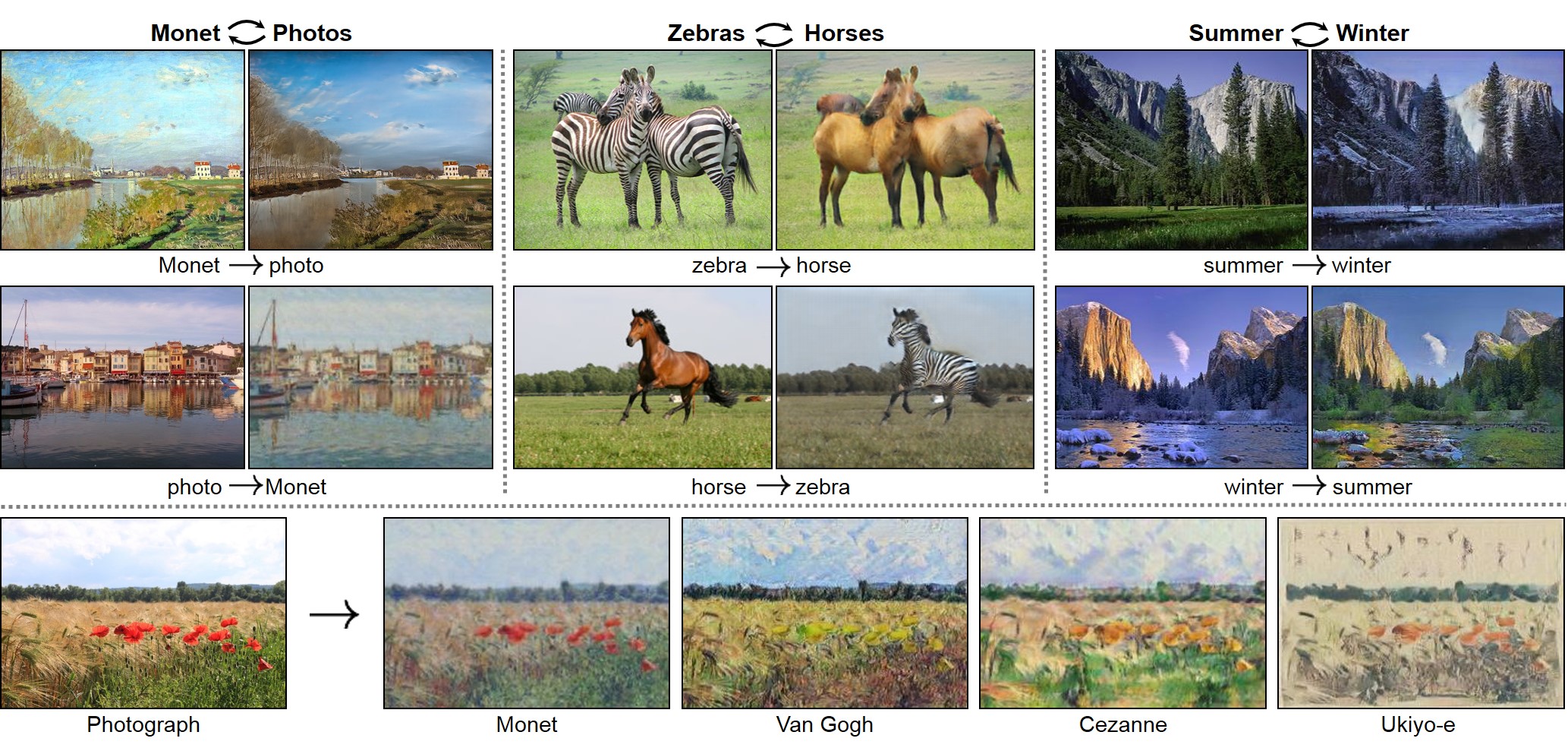

Showing

imgs/season.jpg

0 → 100644

407 KB

imgs/teaser.jpg

0 → 100644

554 KB

model.properties

0 → 100644

models/architectures.lua

0 → 100644

models/base_model.lua

0 → 100644

models/bigan_model.lua

0 → 100644

models/content_gan_model.lua

0 → 100644

models/cycle_gan_model.lua

0 → 100644

models/pix2pix_model.lua

0 → 100644

options.lua

0 → 100644

test.lua

0 → 100644

train.lua

0 → 100644

util/VGG_preprocess.lua

0 → 100644

util/content_loss.lua

0 → 100644