update

parents

Showing

.gitignore

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_ori.md

0 → 100644

data/aligned_data_loader.lua

0 → 100644

data/base_data_loader.lua

0 → 100644

data/data.lua

0 → 100644

data/data_util.lua

0 → 100644

data/dataset.lua

0 → 100644

data/donkey_folder.lua

0 → 100644

examples/train_maps.sh

0 → 100644

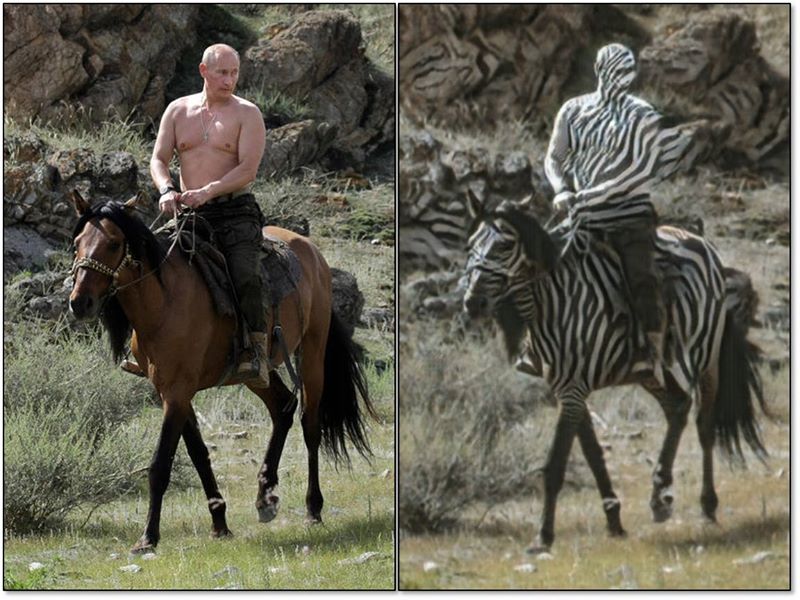

imgs/failure_putin.jpg

0 → 100644

98.4 KB

imgs/horse2zebra.gif

0 → 100644

7.33 MB

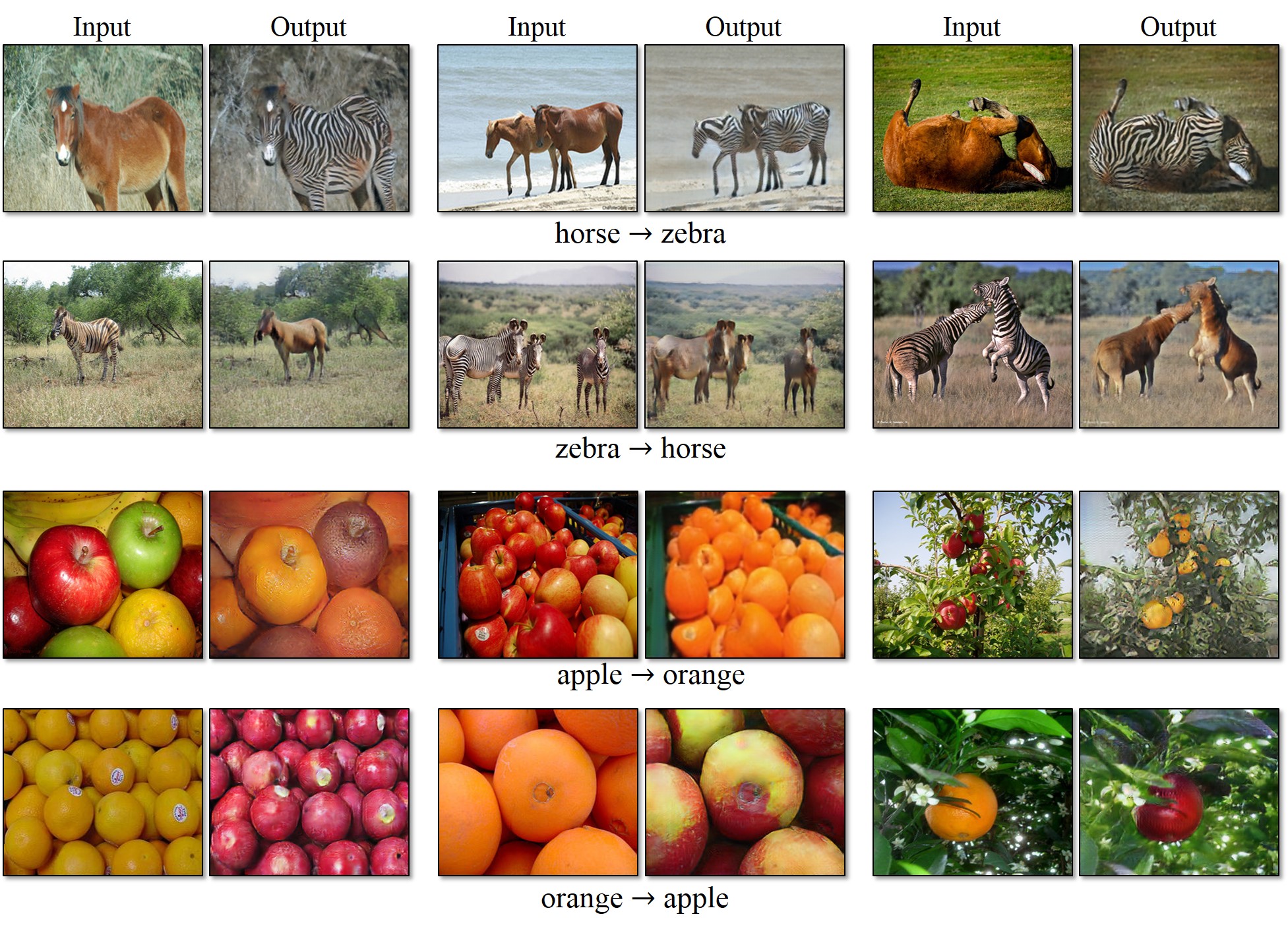

imgs/objects.jpg

0 → 100644

681 KB

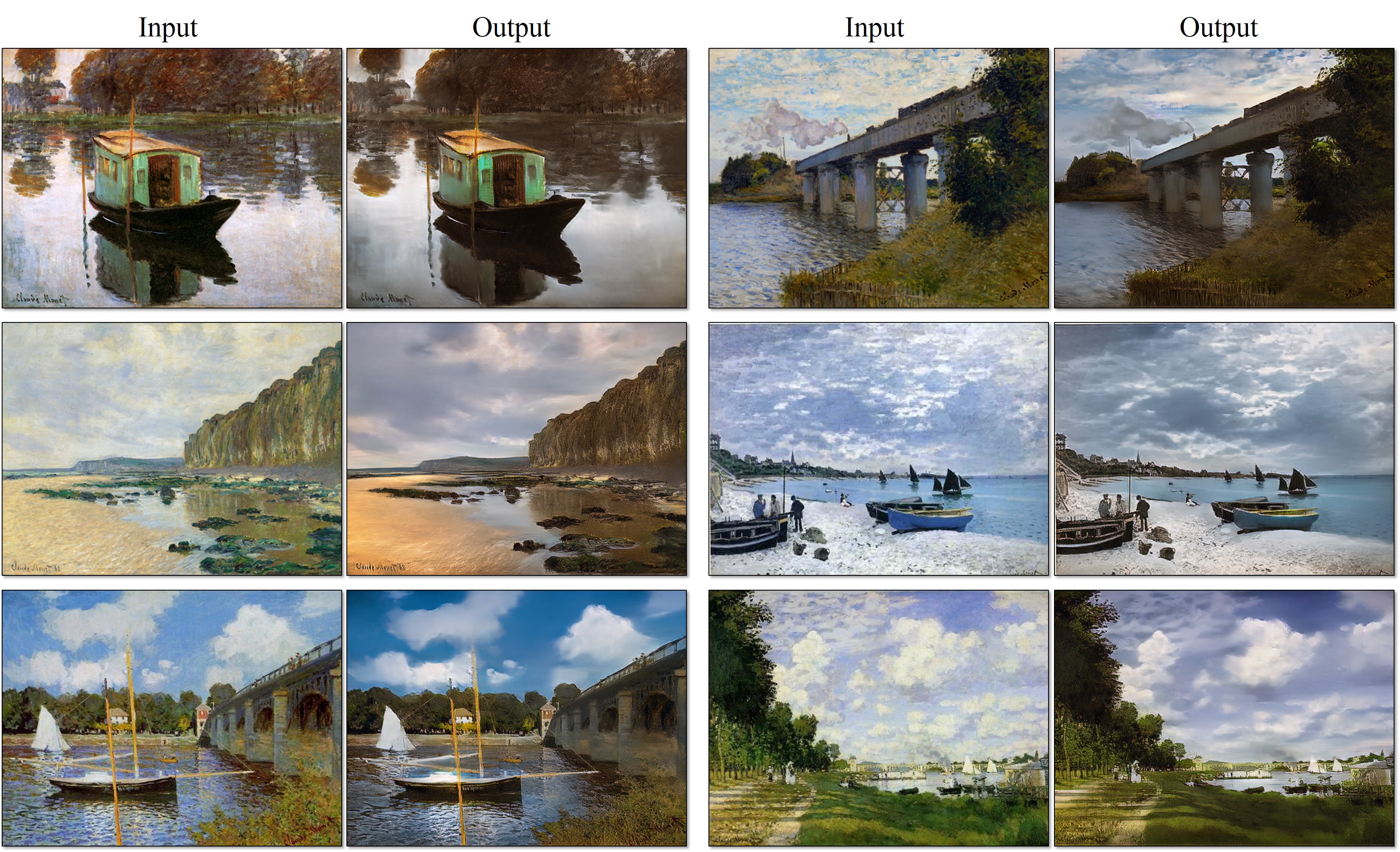

imgs/painting2photo.jpg

0 → 100644

1.41 MB

imgs/paper_thumbnail.jpg

0 → 100644

8.71 KB

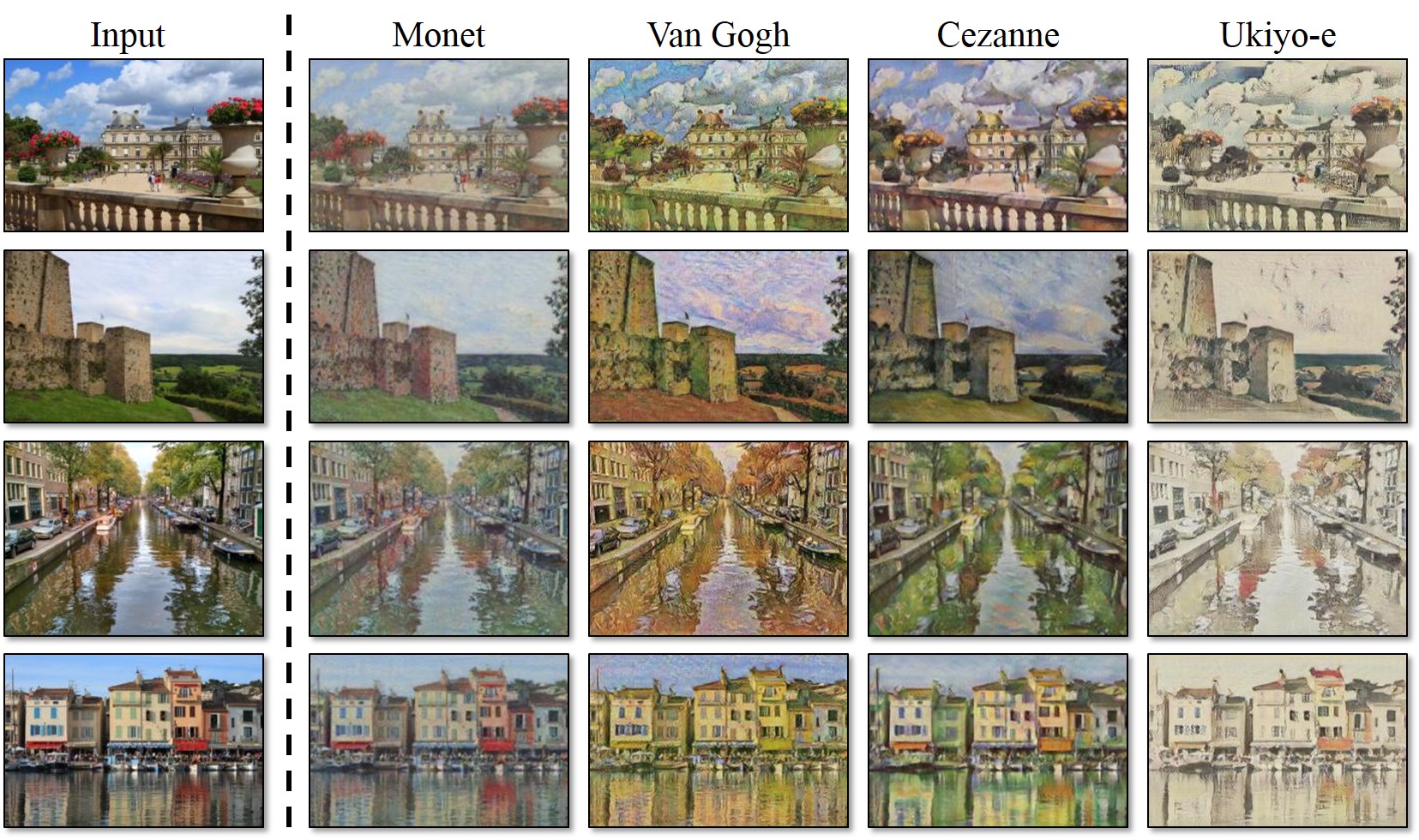

imgs/photo2painting.jpg

0 → 100644

470 KB

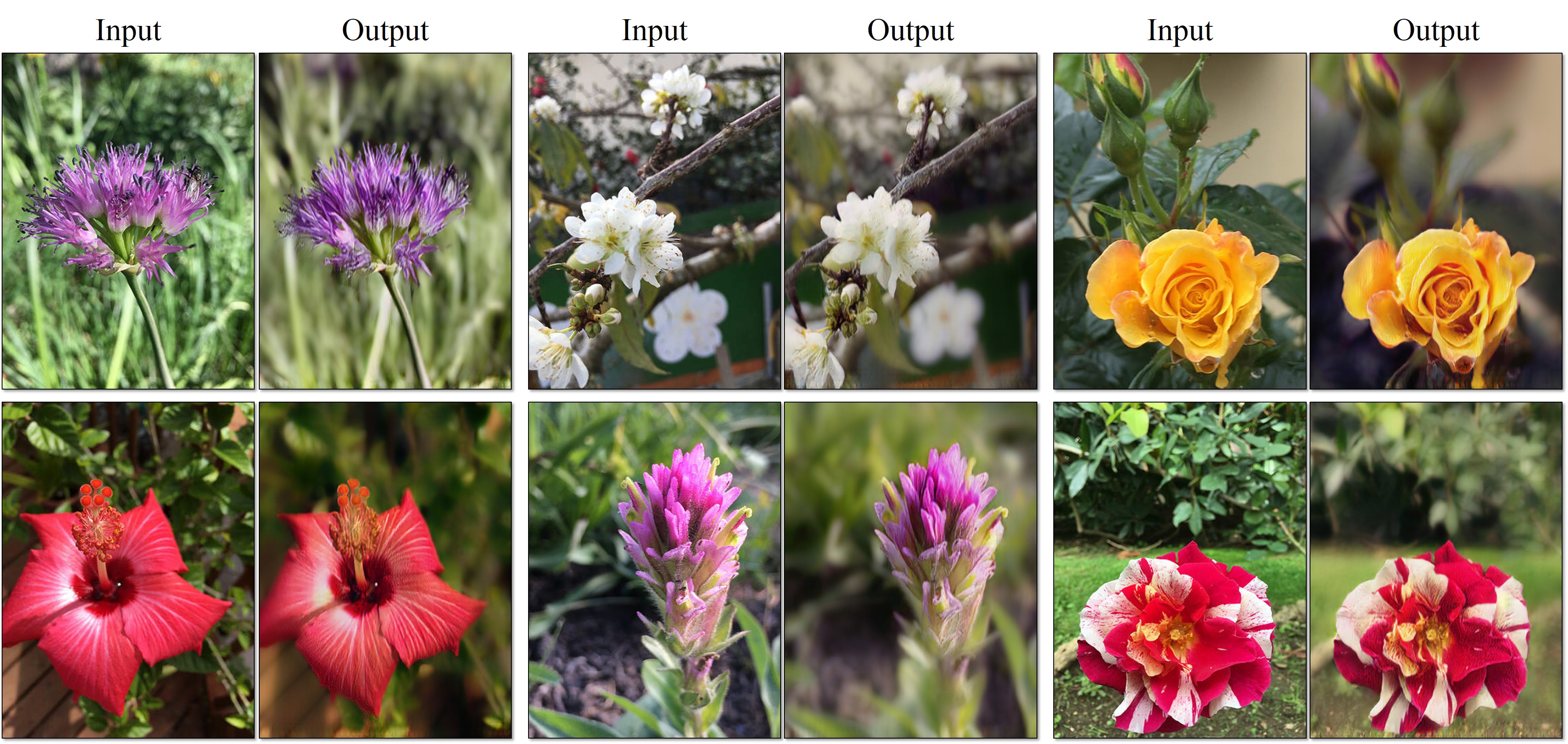

imgs/photo_enhancement.jpg

0 → 100644

1.1 MB