"cacheflow/vscode:/vscode.git/clone" did not exist on "53f70e73344a67f61b80feab03834a770bfb671b"

readme

Showing

.gitattributes

0 → 100644

.gitignore

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_origin.md

0 → 100644

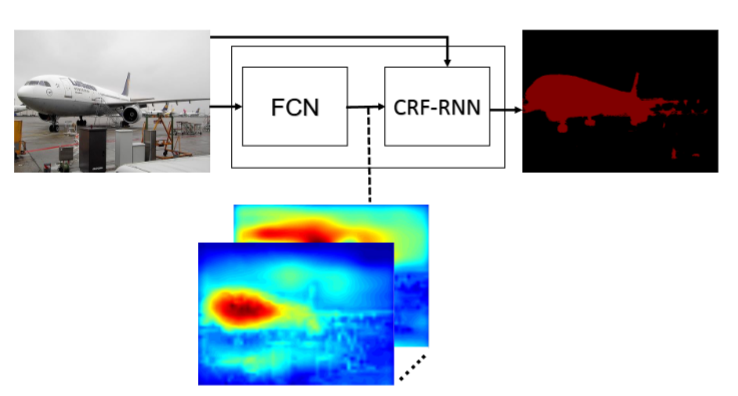

crfasrnn/__init__.py

0 → 100644

crfasrnn/crfasrnn_model.py

0 → 100644

crfasrnn/crfrnn.py

0 → 100644

crfasrnn/fcn8s.py

0 → 100644

crfasrnn/filters.py

0 → 100644

crfasrnn/params.py

0 → 100644

crfasrnn/permuto.cpp

0 → 100644

crfasrnn/permutohedral.cpp

0 → 100644

crfasrnn/permutohedral.h

0 → 100644

crfasrnn/setup.py

0 → 100644

crfasrnn/util.py

0 → 100644

image.jpg

0 → 100644

104 KB

image.png

0 → 100644

164 KB

quick_run.py

0 → 100644

requirements.txt

0 → 100644