codeformer

Showing

.gitignore

0 → 100644

Dockerfile

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_official.md

0 → 100644

assets/CodeFormer_logo.png

0 → 100644

5.14 KB

669 KB

503 KB

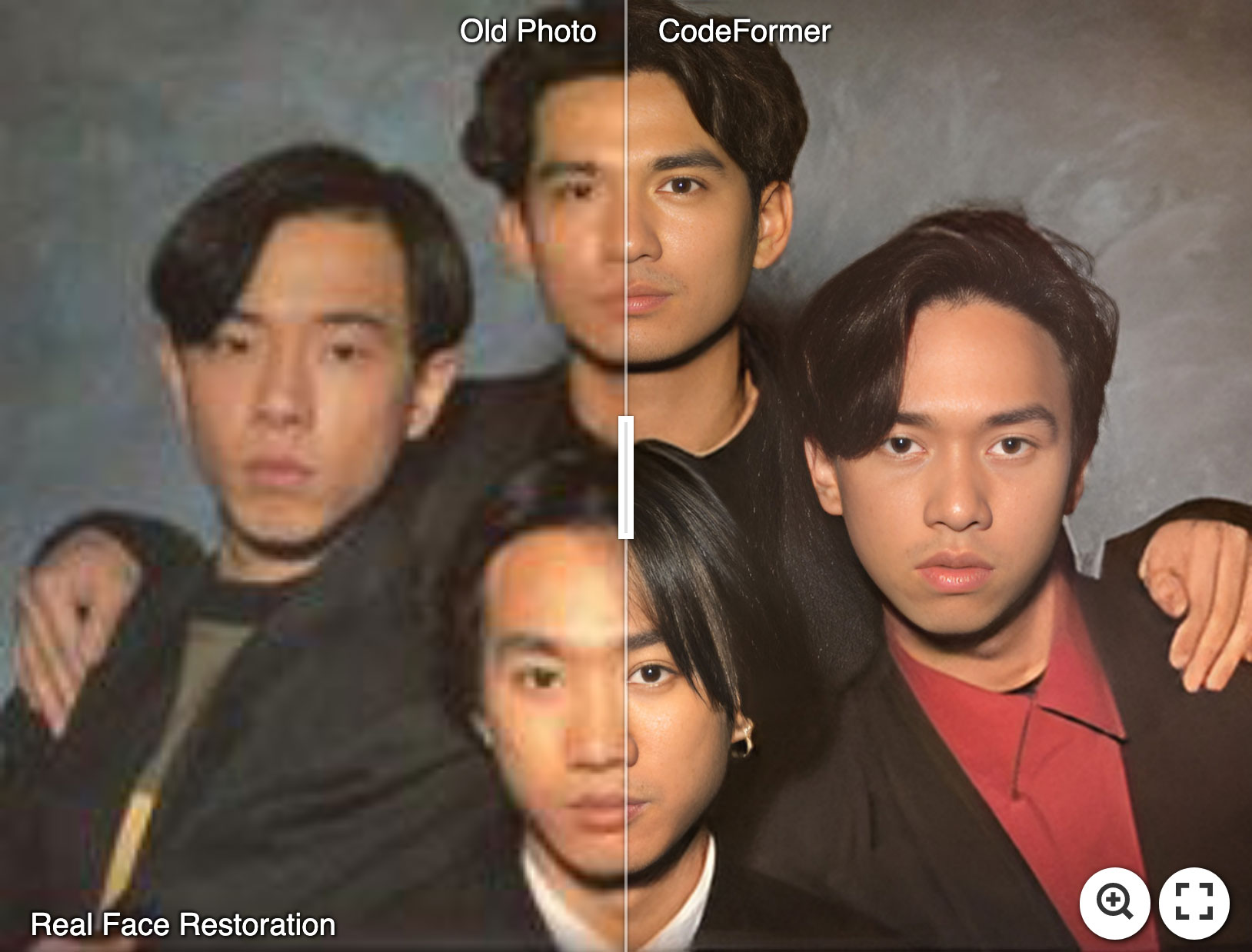

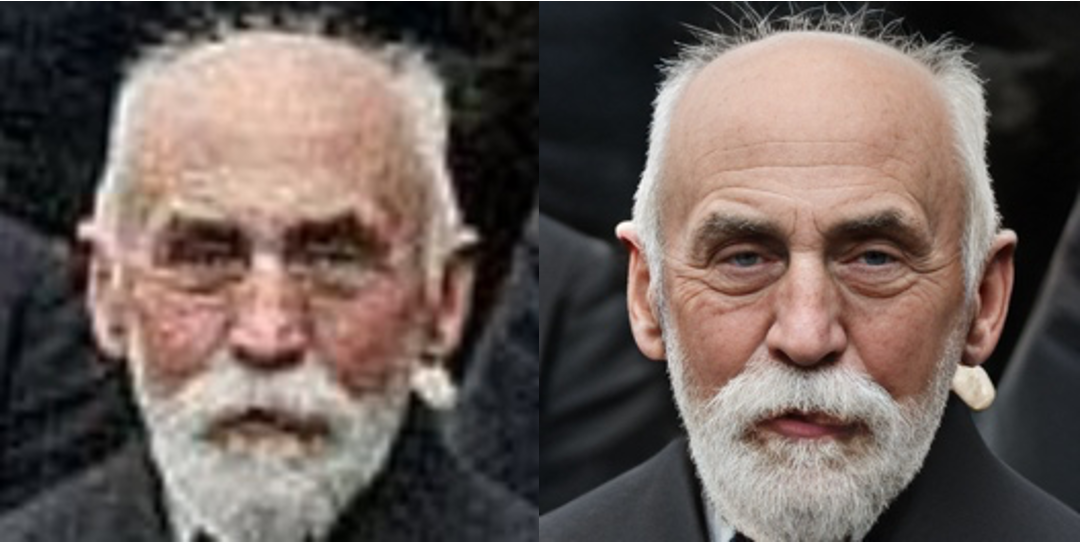

assets/imgsli_1.jpg

0 → 100644

192 KB

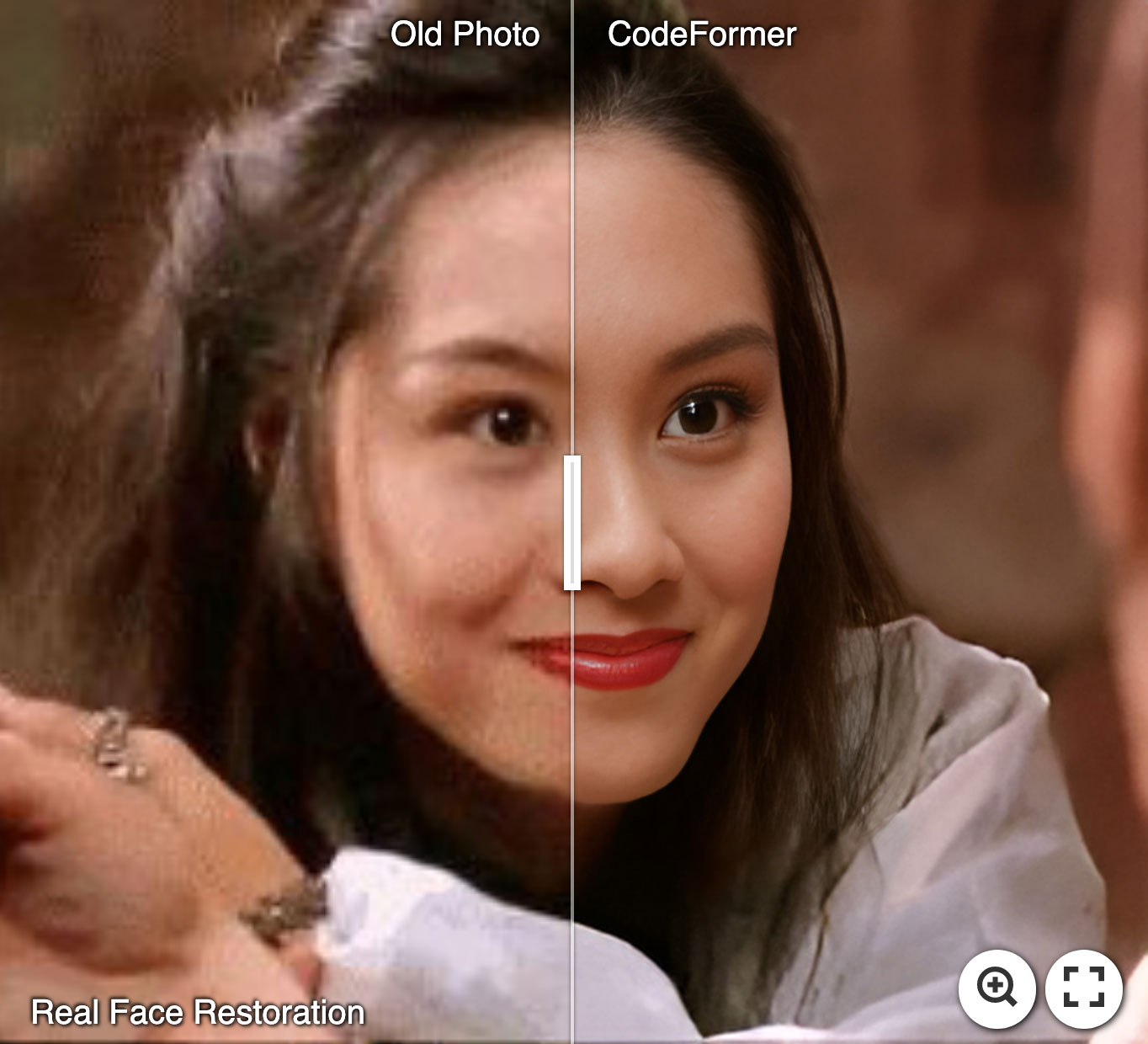

assets/imgsli_2.jpg

0 → 100644

145 KB

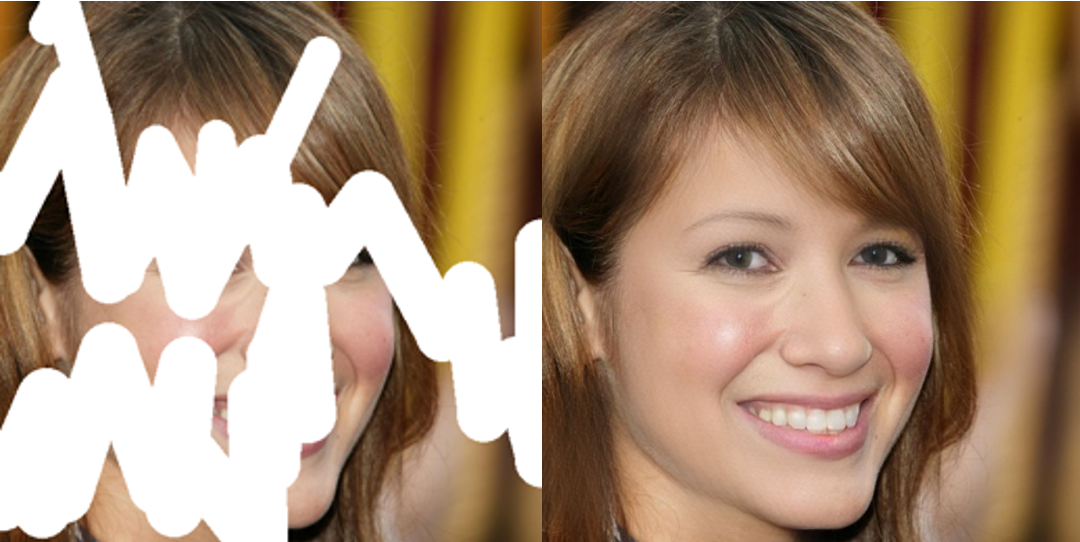

assets/imgsli_3.jpg

0 → 100644

223 KB

671 KB

749 KB

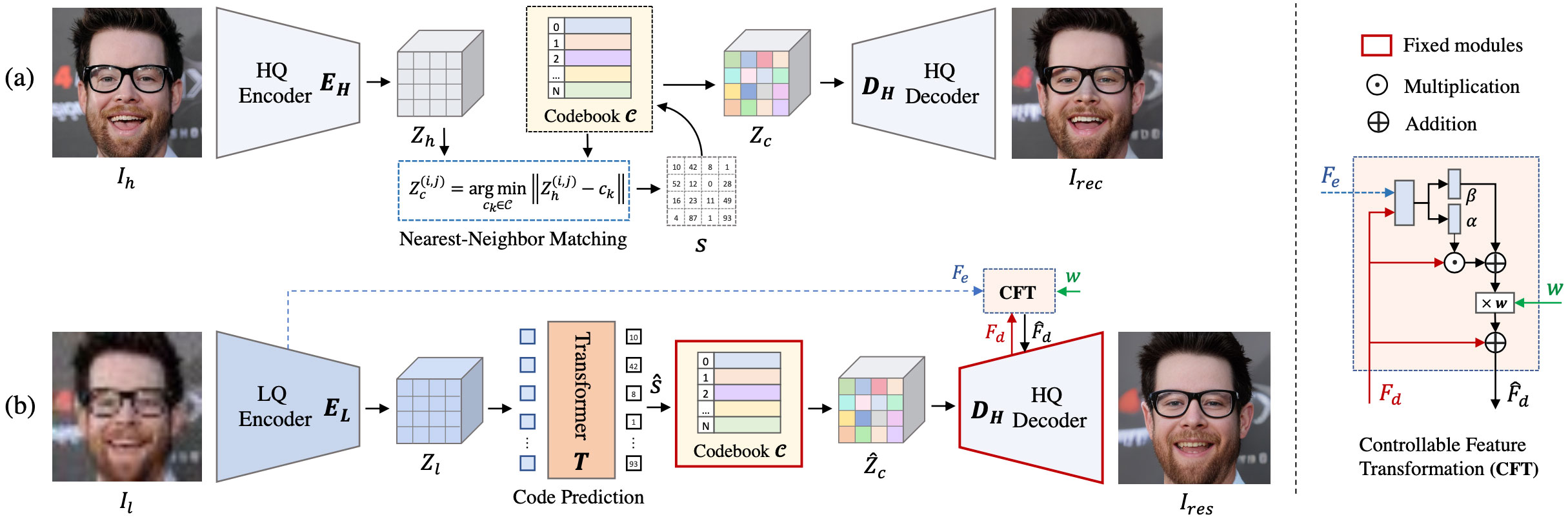

assets/network.jpg

0 → 100644

233 KB

811 KB

805 KB

735 KB

681 KB

basicsr/VERSION

0 → 100644

basicsr/__init__.py

0 → 100644