inference

Showing

This diff is collapsed.

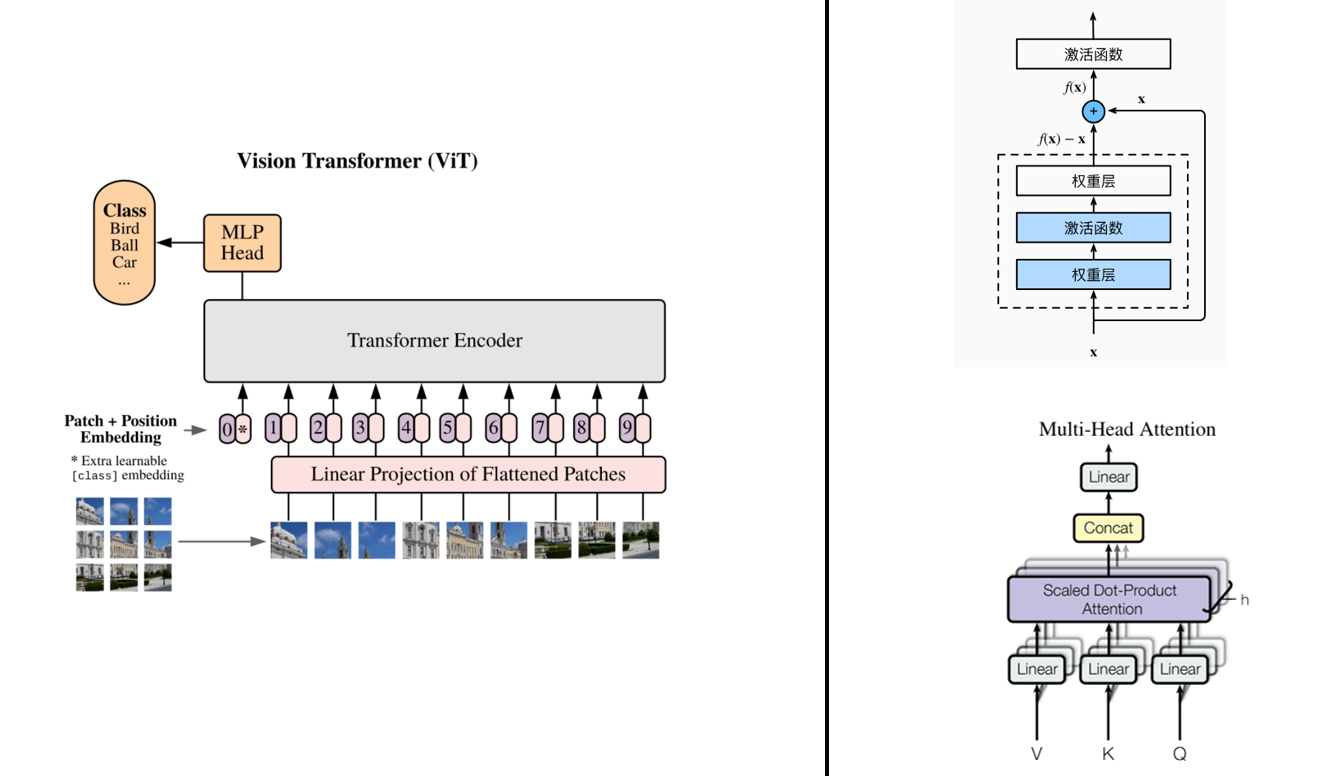

readme_imgs/alg.png

0 → 100644

69.6 KB

readme_imgs/model.png

0 → 100644

200 KB

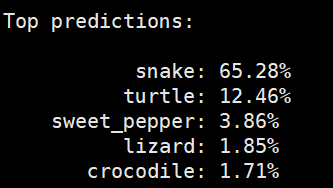

readme_imgs/r.png

0 → 100644

8.74 KB

requirements.txt

0 → 100644

| ftfy | ||

| packaging | ||

| regex | ||

| tqdm | ||

| scikit-learn | ||

| # torch | ||

| # torchvision |

setup.py

0 → 100644

tests/linear_probe.py

0 → 100644

tests/simple_test.py

0 → 100644

tests/test_consistency.py

0 → 100644

This diff is collapsed.