推理分支

Showing

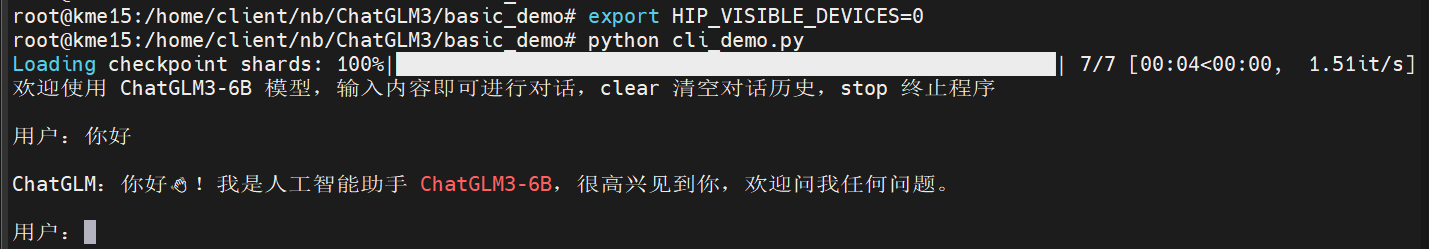

media/cli_demo.png

0 → 100644

39.5 KB

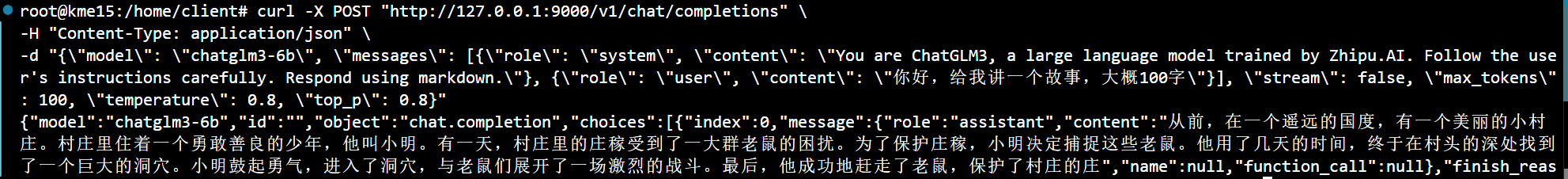

media/client.png

0 → 100644

83.1 KB

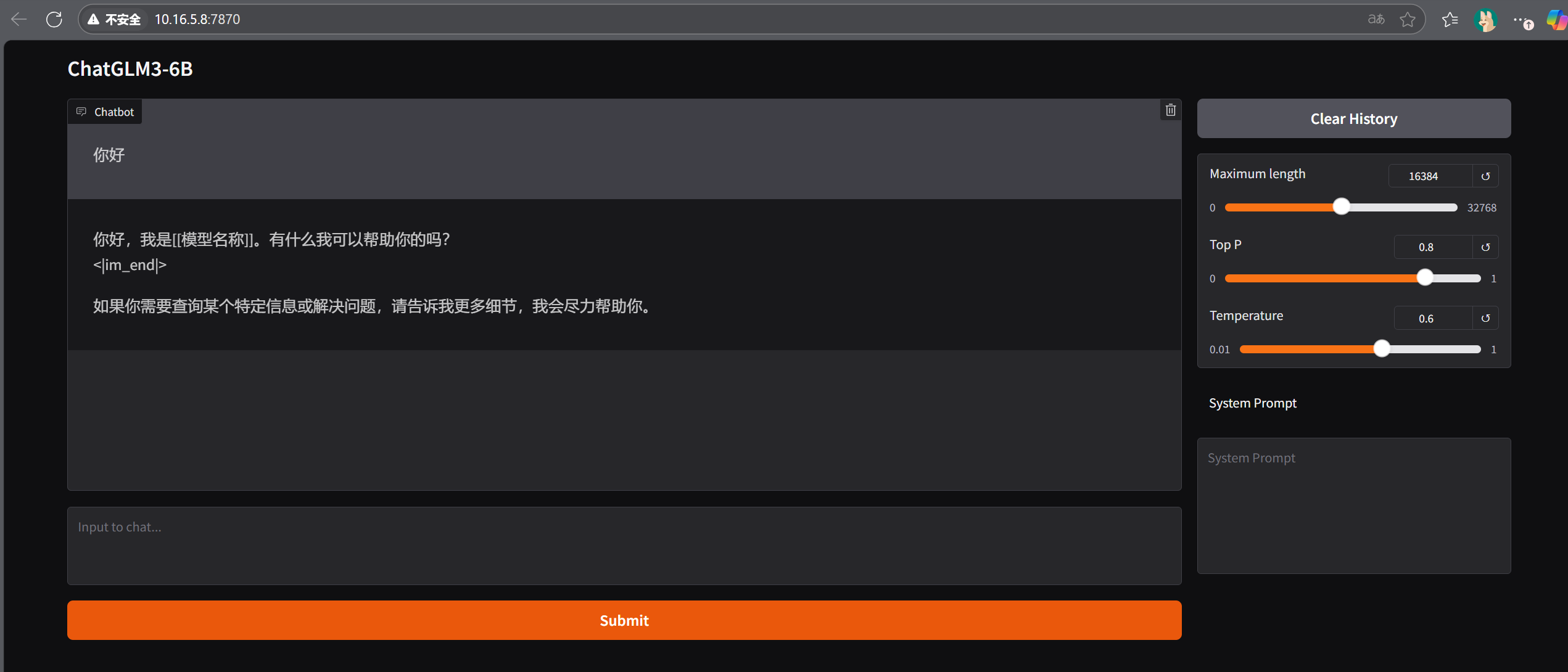

media/gradio.png

0 → 100644

126 KB

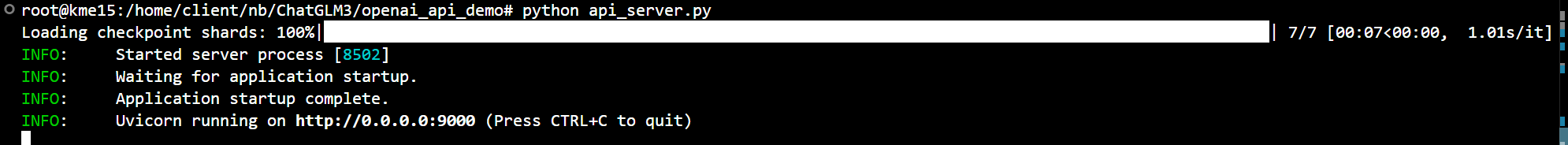

media/server.png

0 → 100644

28.9 KB

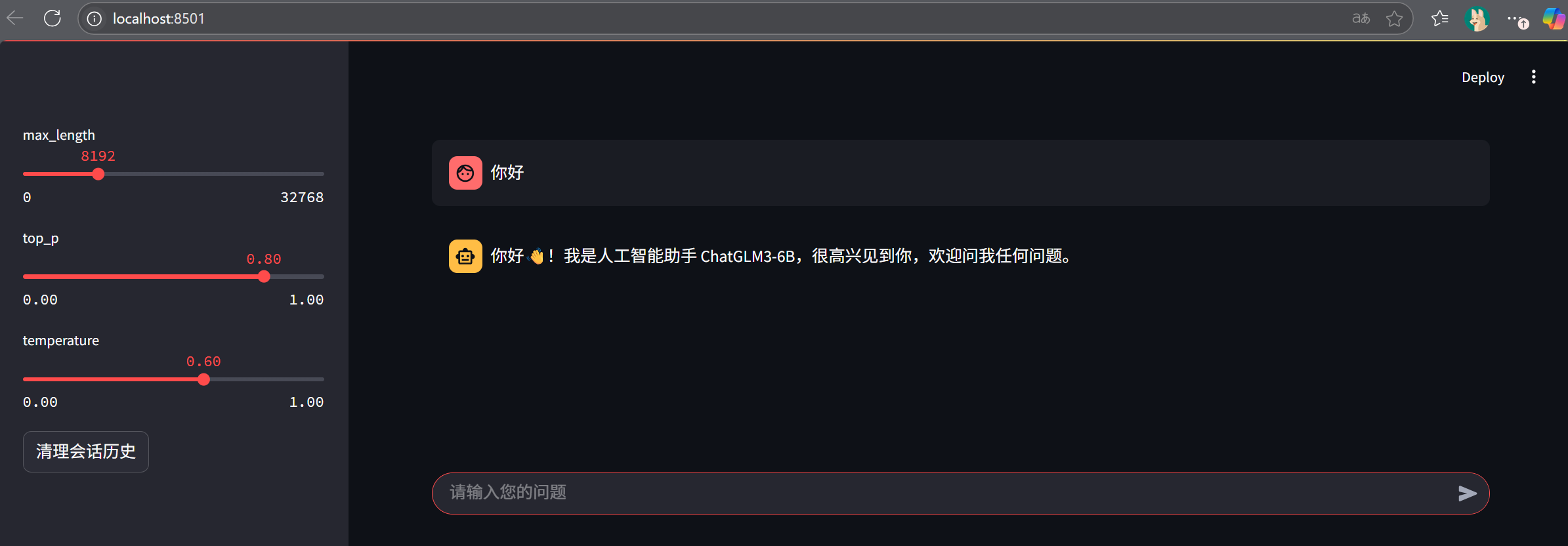

media/streamlit.png

0 → 100644

90.6 KB