Initial commit

parents

Showing

3rdParty/InstallRBuild.sh

0 → 100644

File added

File added

File added

File added

File added

CMakeLists.txt

0 → 100644

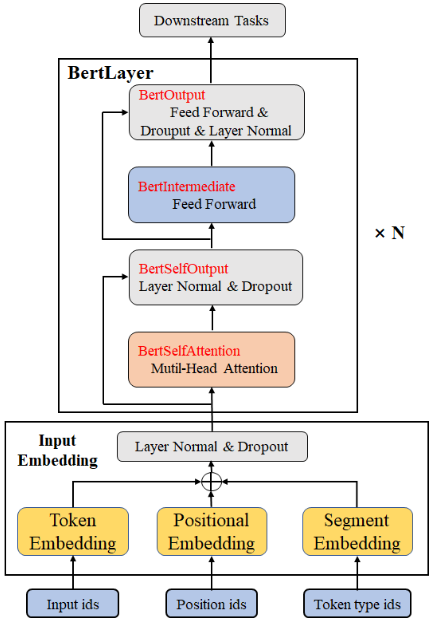

Doc/Images/Bert_01.png

0 → 100644

62.8 KB

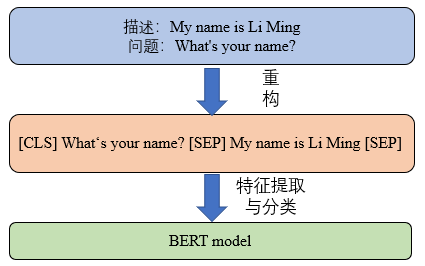

Doc/Images/Bert_02.png

0 → 100644

10.2 KB

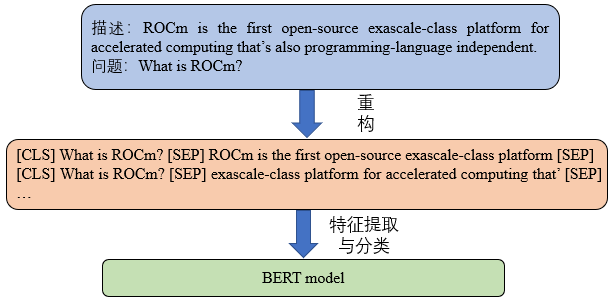

Doc/Images/Bert_03.png

0 → 100644

19 KB

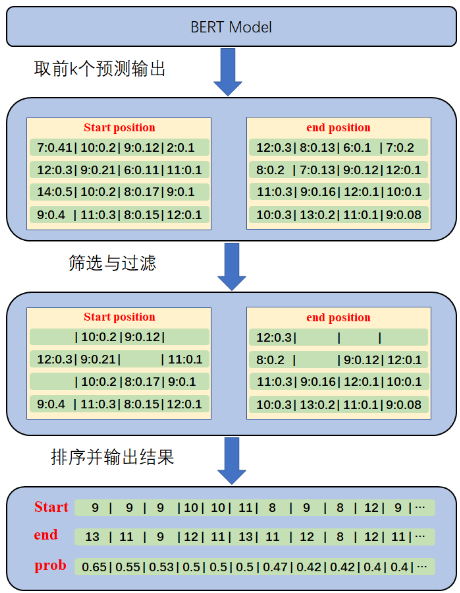

Doc/Images/Bert_04.png

0 → 100644

74.7 KB

Doc/Tutorial_Cpp.md

0 → 100644

Doc/Tutorial_Python.md

0 → 100644

Python/bert.py

0 → 100644

Python/requirements.txt

0 → 100644

Python/run_onnx_squad.py

0 → 100644

README.md

0 → 100644

Resource/inputs_data.json

0 → 100644

Src/Bert.cpp

0 → 100644

Src/Bert.h

0 → 100644