Skip to content

GitLab

Menu

Projects

Groups

Snippets

Loading...

Help

Help

Support

Community forum

Keyboard shortcuts

?

Submit feedback

Contribute to GitLab

Sign in / Register

Toggle navigation

Menu

Open sidebar

ModelZoo

BERT-Large-squad_onnxruntime

Commits

f6d1e016

Commit

f6d1e016

authored

May 06, 2025

by

chenzk

Browse files

Update sf.md

parent

56eabd55

Changes

1

Hide whitespace changes

Inline

Side-by-side

Showing

1 changed file

with

4 additions

and

4 deletions

+4

-4

README.md

README.md

+4

-4

No files found.

README.md

View file @

f6d1e016

...

...

@@ -4,11 +4,11 @@

## 模型结构

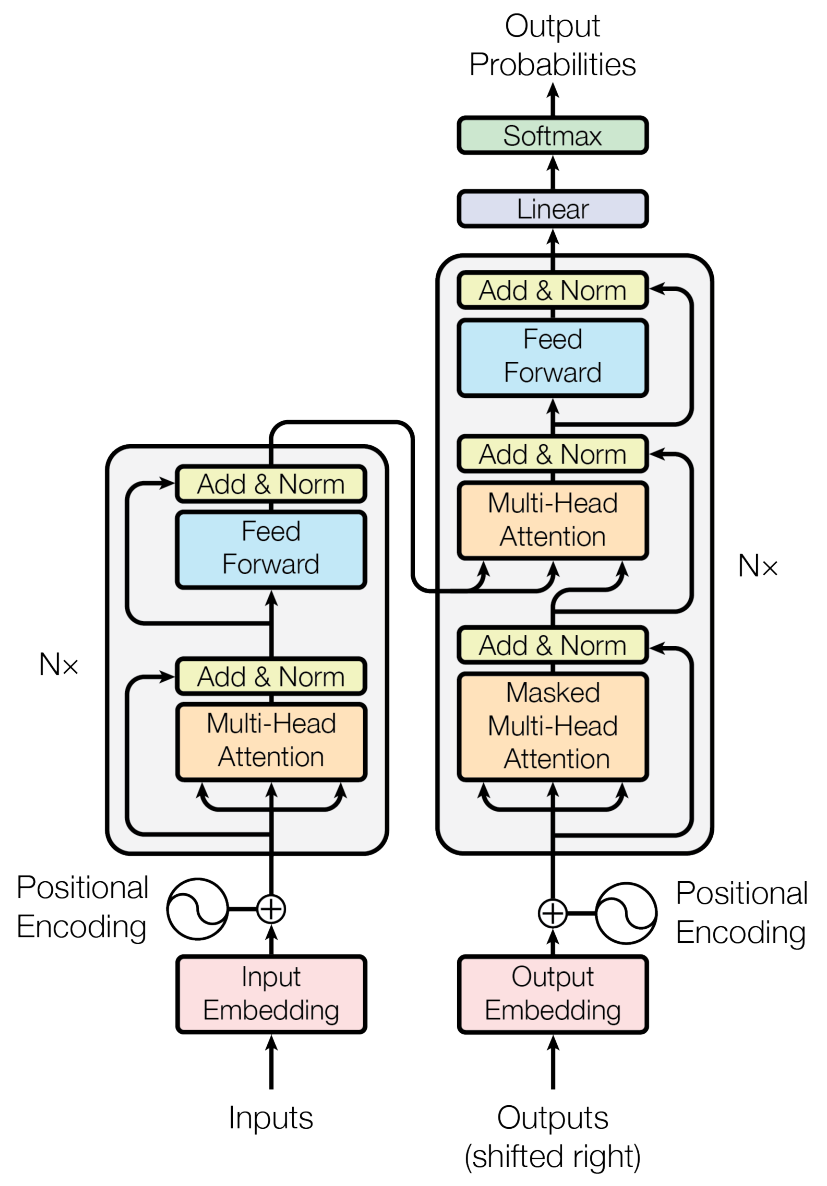

bert_large_squad核心是transformer,transformer结构如下:

## 算法原理

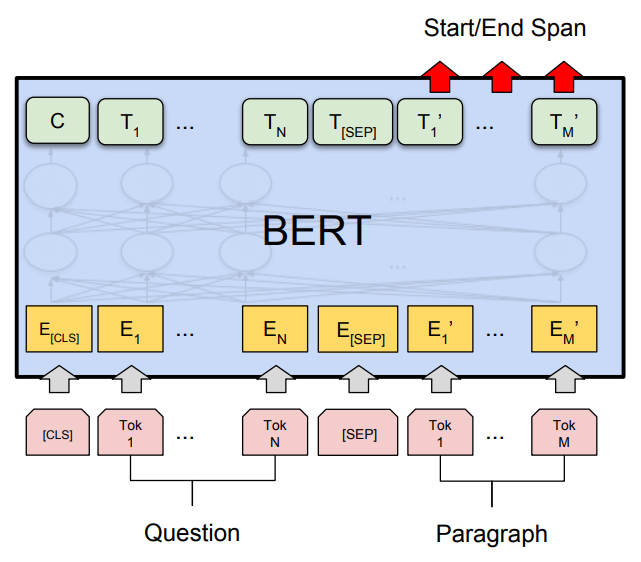

bert_large_squad模型的主要参数为:24个transformer层、1024个hidden size、16个self-attention heads,简要原理可用下图表示:

## 数据集

暂无合适中文数据集

...

...

@@ -33,7 +33,7 @@ python3 main.py

```

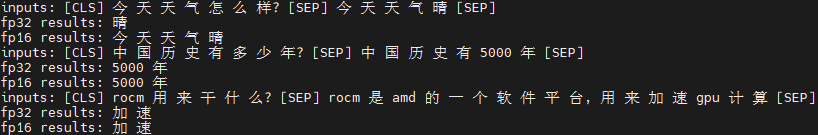

## result

### 精度

暂无

## 应用场景

...

...

@@ -42,7 +42,7 @@ python3 main.py

### 热点应用行业

医疗,科研,金融,教育

## 源码仓库及问题反馈

https://developer.

hpccube.com

/codes/modelzoo/bert_large_squad_onnxruntime

https://developer.

sourcefind.cn

/codes/modelzoo/bert_large_squad_onnxruntime

## 参考资料

https://github.com/google-research/bert

Write

Preview

Markdown

is supported

0%

Try again

or

attach a new file

.

Attach a file

Cancel

You are about to add

0

people

to the discussion. Proceed with caution.

Finish editing this message first!

Cancel

Please

register

or

sign in

to comment