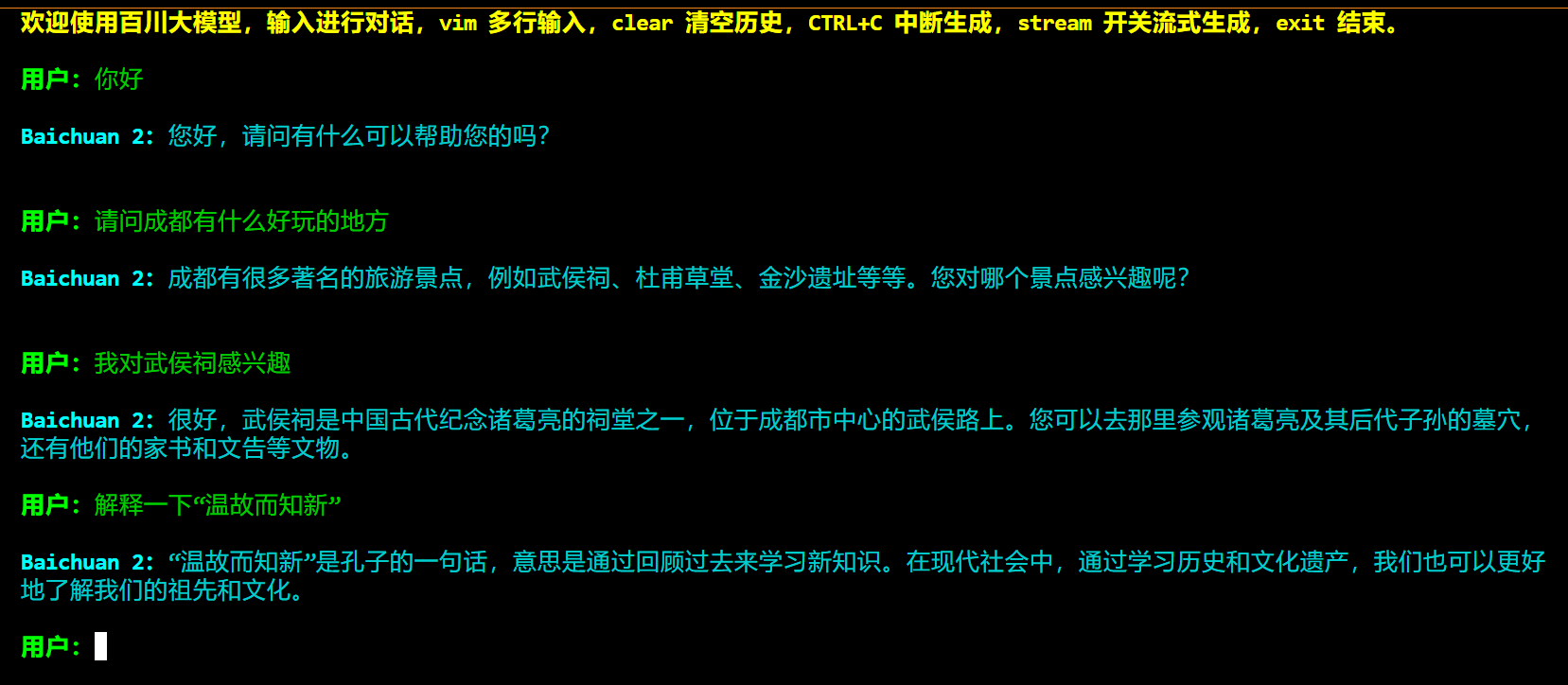

added baichuan2

Showing

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

media/baichuan2-test.png

0 → 100644

97.9 KB