arcface

parents

Showing

docs/prepare_webface42m.md

0 → 100644

docs/speed_benchmark.md

0 → 100644

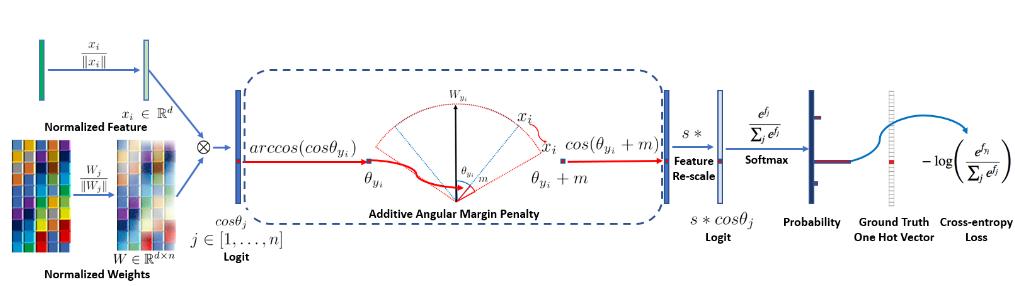

docs/train.jpg

0 → 100644

34 KB

eval/__init__.py

0 → 100644

eval/verification.py

0 → 100644

eval_ijbc.py

0 → 100644

flops.py

0 → 100644

inference.py

0 → 100644

losses.py

0 → 100644

lr_scheduler.py

0 → 100644

onnx_helper.py

0 → 100644

onnx_ijbc.py

0 → 100644

partial_fc_v2.py

0 → 100644

This diff is collapsed.

requirement.txt

0 → 100644

run.sh

0 → 100644

scripts/shuffle_rec.py

0 → 100644

torch2onnx.py

0 → 100644

train_v2.py

0 → 100644

This diff is collapsed.

utils/__init__.py

0 → 100644

utils/plot.py

0 → 100644