"git@developer.sourcefind.cn:chenpangpang/open-webui.git" did not exist on "a901031896aa7085d03064ba6ee046d695f03fcb"

arcface

parents

Showing

configs/wf4m_mbf.py

0 → 100644

configs/wf4m_r100.py

0 → 100644

configs/wf4m_r50.py

0 → 100644

dataset.py

0 → 100644

dist.sh

0 → 100644

docker/Dockerfile

0 → 100644

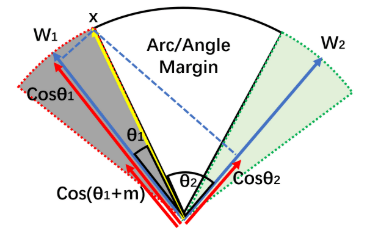

docs/arcface.png

0 → 100644

16.2 KB

docs/eval.md

0 → 100644

docs/install.md

0 → 100644

docs/install_dali.md

0 → 100644

docs/modelzoo.md

0 → 100644