v1.0

Showing

asset/他本是我莲花池里养大的金鱼.wav

0 → 100644

File added

asset/发表一个悲伤的演讲.wav

0 → 100644

File added

asset/发表一个振奋人心的演讲.wav

0 → 100644

File added

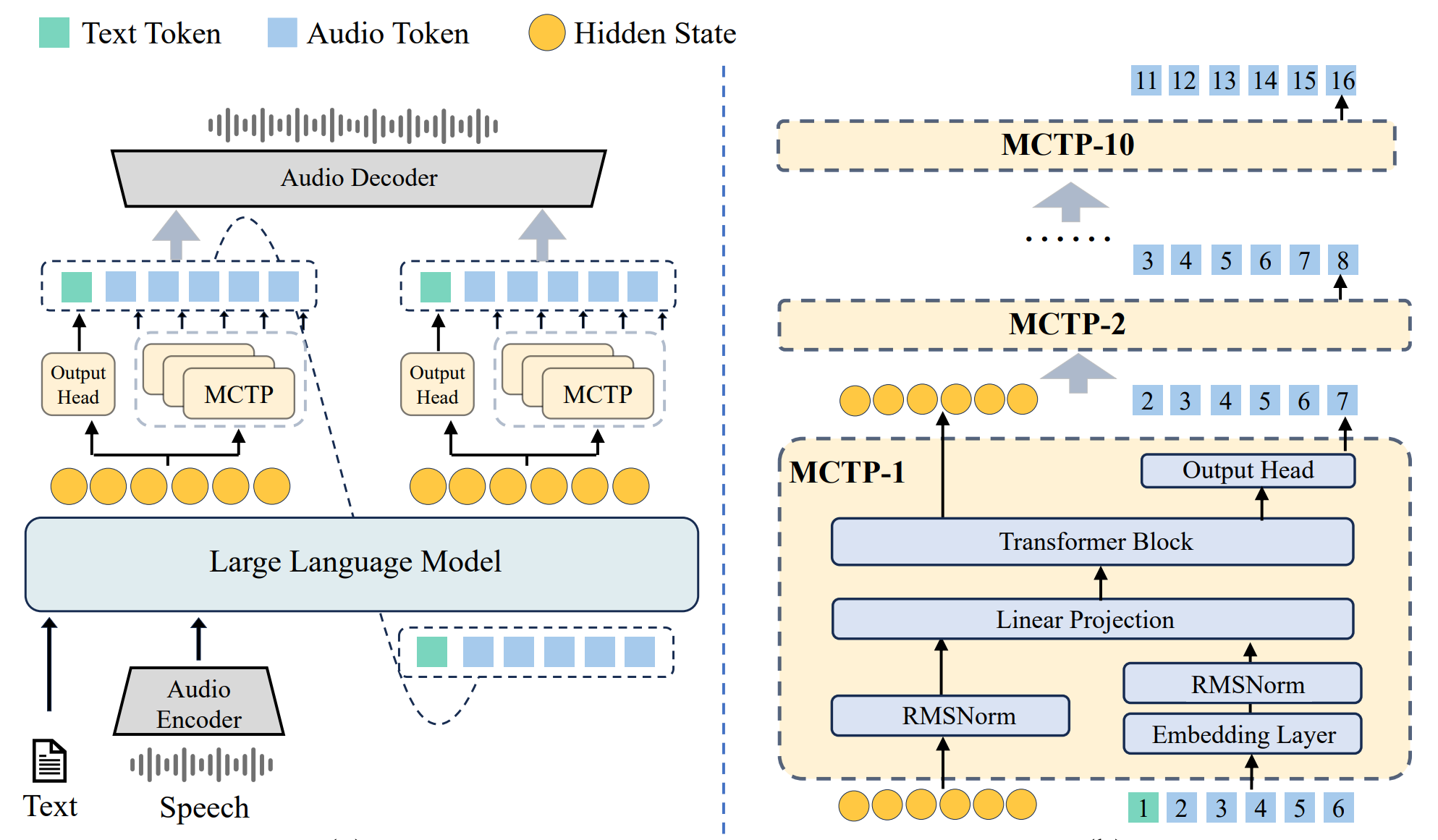

doc/VITA_MCTP.png

0 → 100644

227 KB

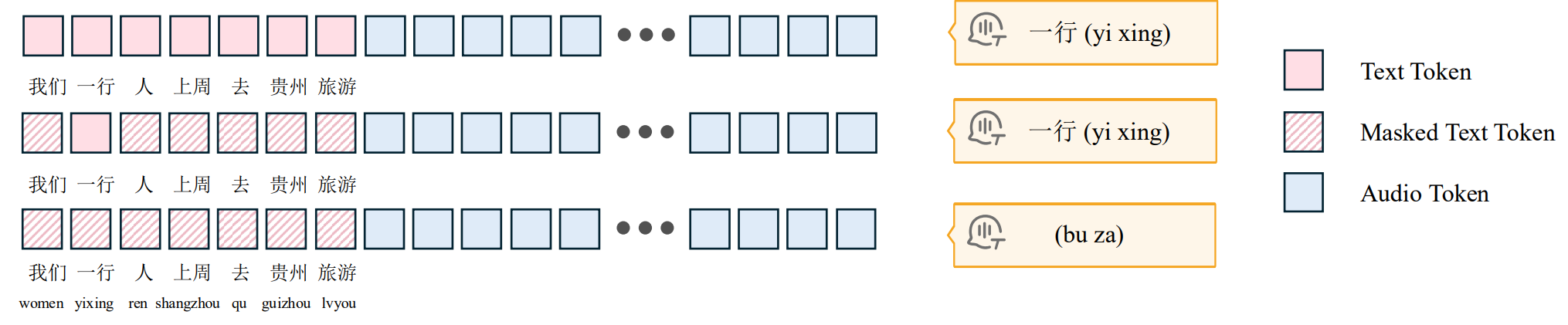

doc/relative.png

0 → 100644

85.2 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

docker_start.sh

0 → 100644

File added

evaluation/compute-cer.py

0 → 100644

This diff is collapsed.

evaluation/compute-wer.py

0 → 100644

This diff is collapsed.

evaluation/evaluate_asr.py

0 → 100644

evaluation/evaluate_sqa.py

0 → 100644

icon.png

0 → 100644

64.4 KB