Docs: update config instructions for vLLM compatibility

Showing

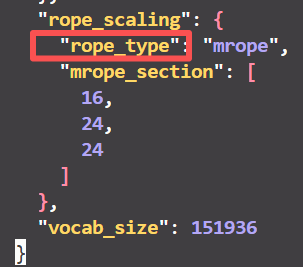

images/after_fix.png

0 → 100644

11.4 KB

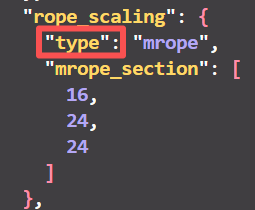

images/before_fix.png

0 → 100644

7.85 KB

images/result1.png

0 → 100644

122 KB

11.4 KB

7.85 KB

122 KB