v1.0

Showing

Qwen/QwQ-32B/README.md

0 → 100644

README.md

0 → 100644

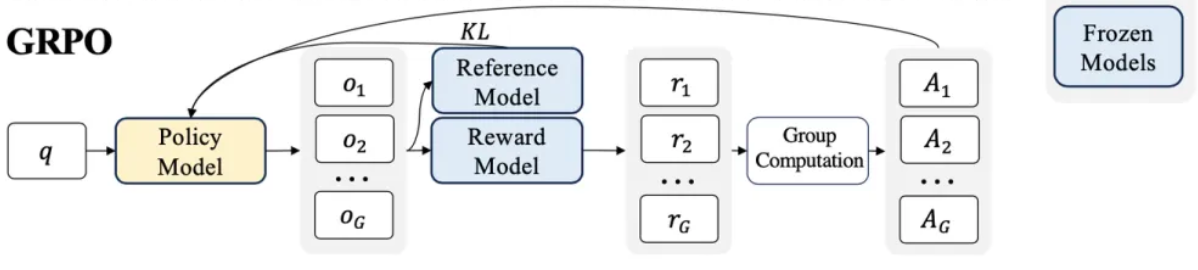

doc/GRPO.png

0 → 100644

108 KB

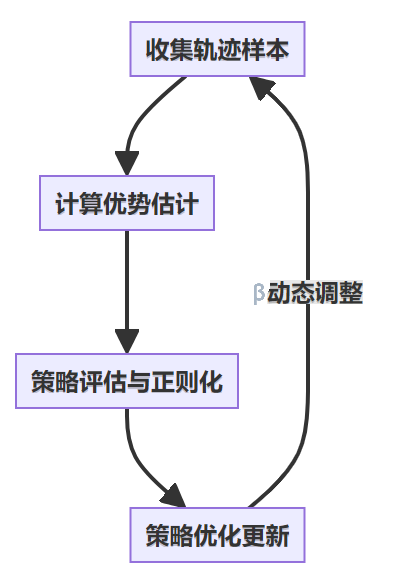

doc/GRPO_flow.png

0 → 100644

36.1 KB

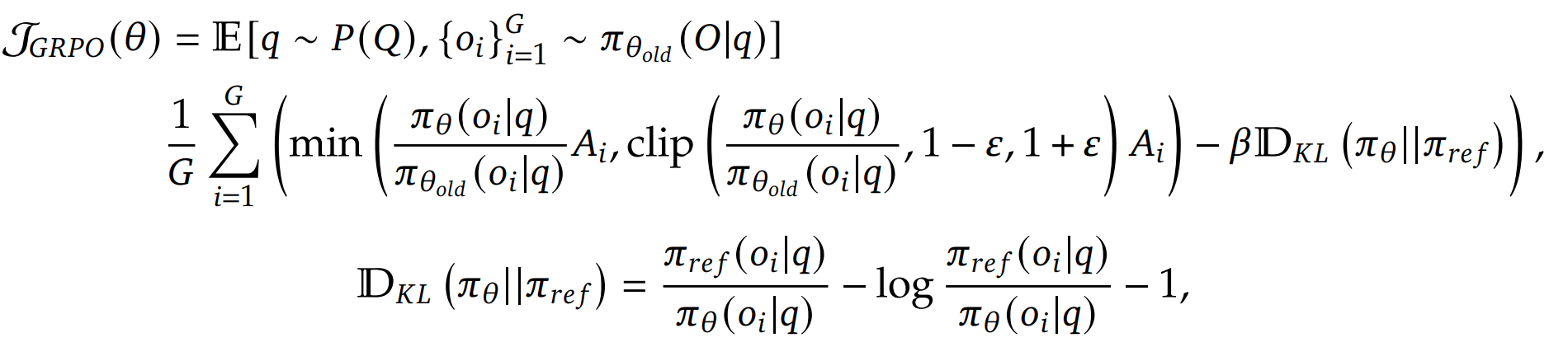

doc/algorithm.png

0 → 100644

97.7 KB

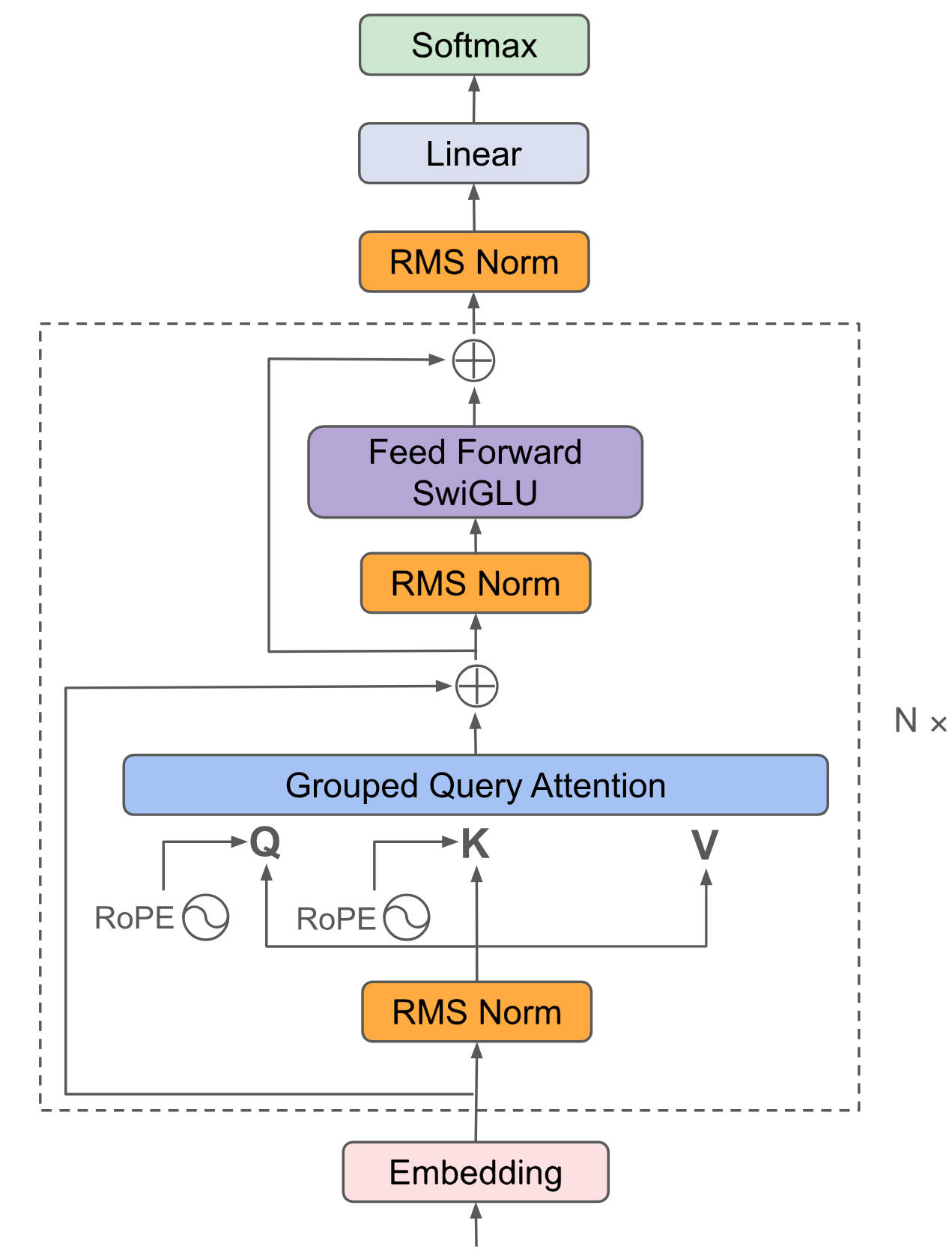

doc/qwen.png

0 → 100644

356 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

icon.png

0 → 100644

53.8 KB

infer.py

0 → 100644

infer_vllm.py

0 → 100644

model.properties

0 → 100644

requirements.txt

0 → 100644

| modelscope | ||

| \ No newline at end of file |

File added

File added