v1.0

Showing

LICENSE

0 → 100644

README.md

0 → 100644

README_origin.md

0 → 100644

app.py

0 → 100644

This diff is collapsed.

app_chat.py

0 → 100644

This diff is collapsed.

app_chat.sh

0 → 100644

assets/brand.png

0 → 100644

329 KB

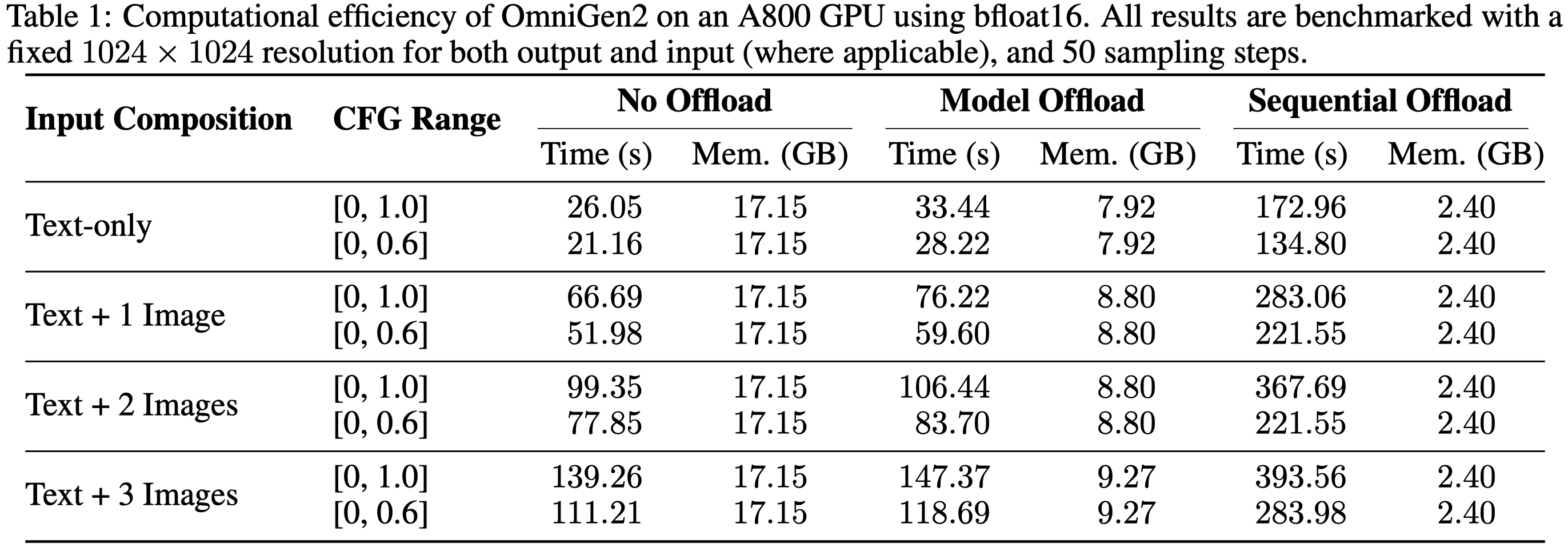

assets/efficiency.png

0 → 100644

293 KB

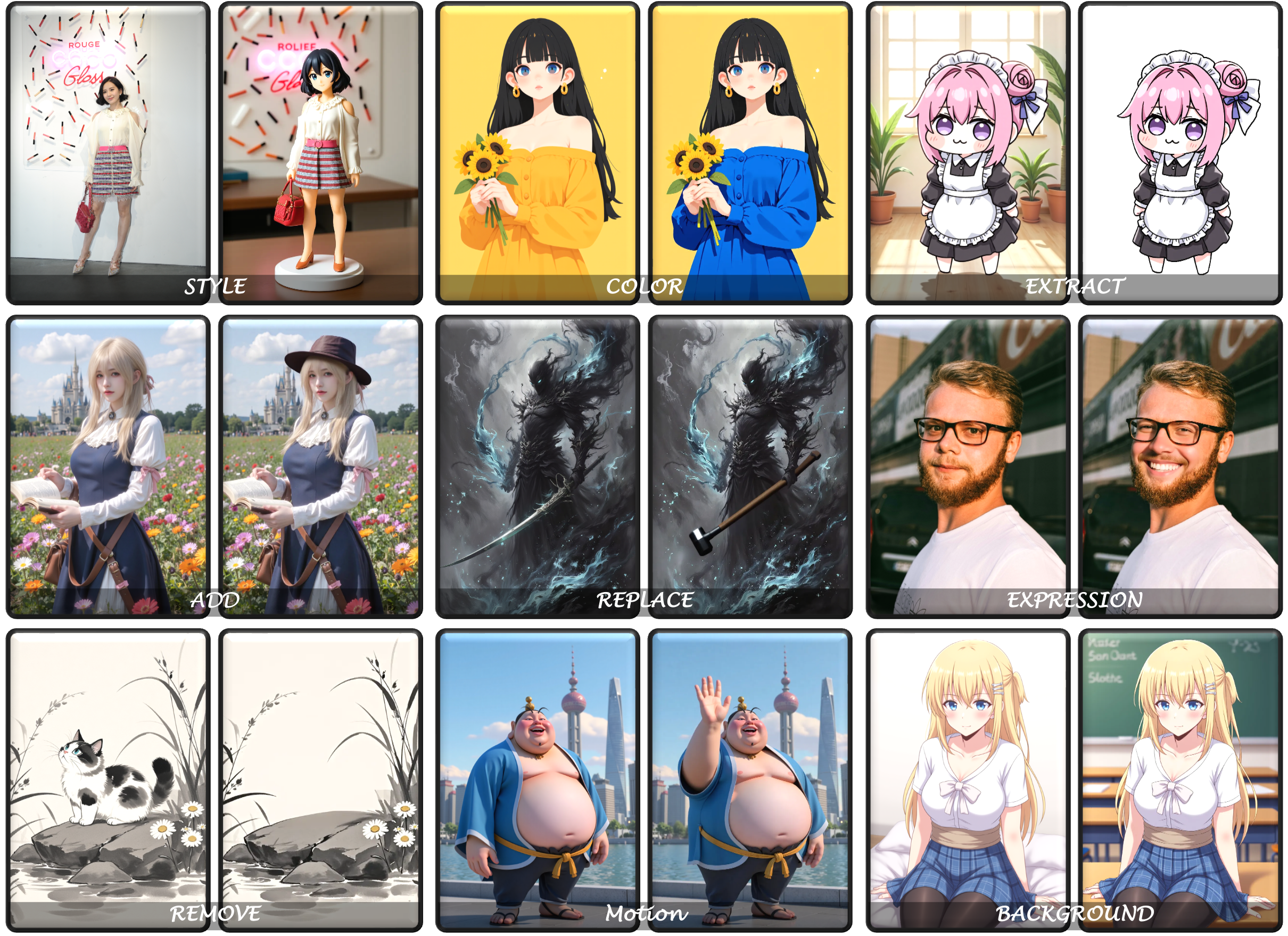

assets/examples_edit.png

0 → 100644

5.42 MB

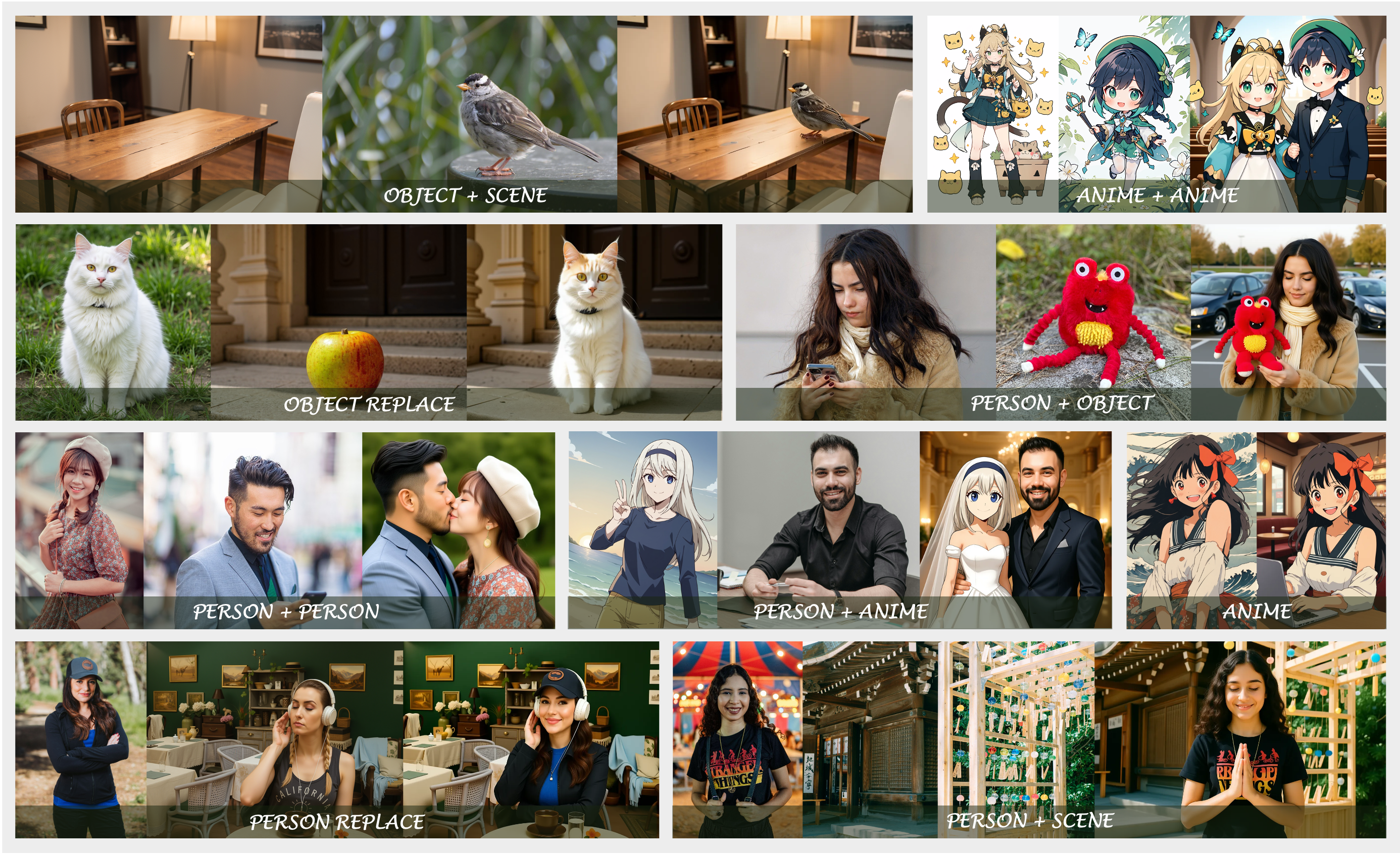

assets/examples_subject.png

0 → 100644

7.41 MB

3.56 MB

5.7 MB

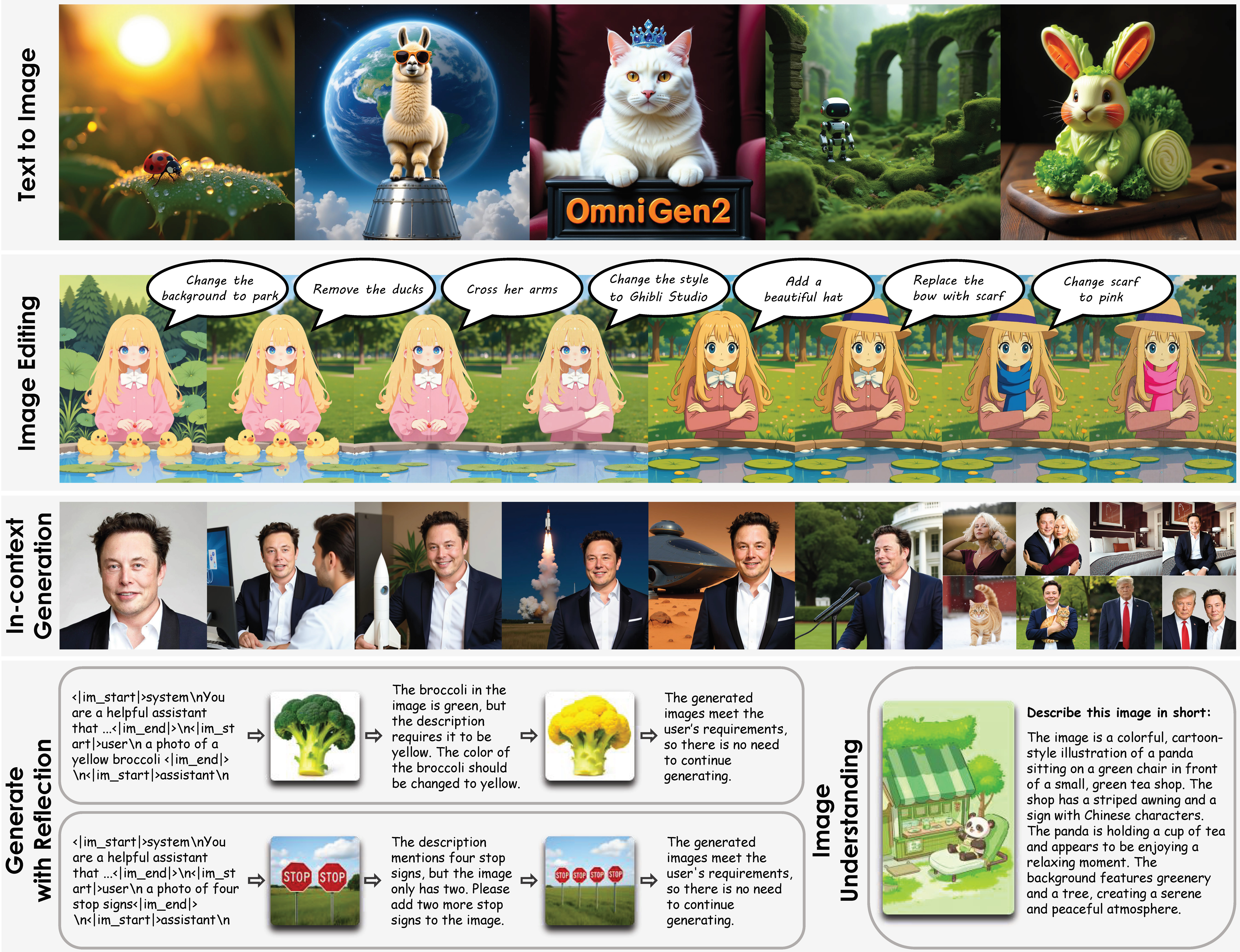

assets/teaser.jpg

0 → 100644

6.87 MB

assets/teaser.png

0 → 100644

4.24 MB

convert_ckpt_to_hf_format.py

0 → 100644