v1.0

Showing

1.51 MB

assets/qrcode/discord.png

0 → 100644

20 KB

assets/qrcode/wechat.png

0 → 100644

4.63 KB

assets/qrcode/x.png

0 → 100644

24.1 KB

27.3 KB

File added

blender_addon.py

0 → 100644

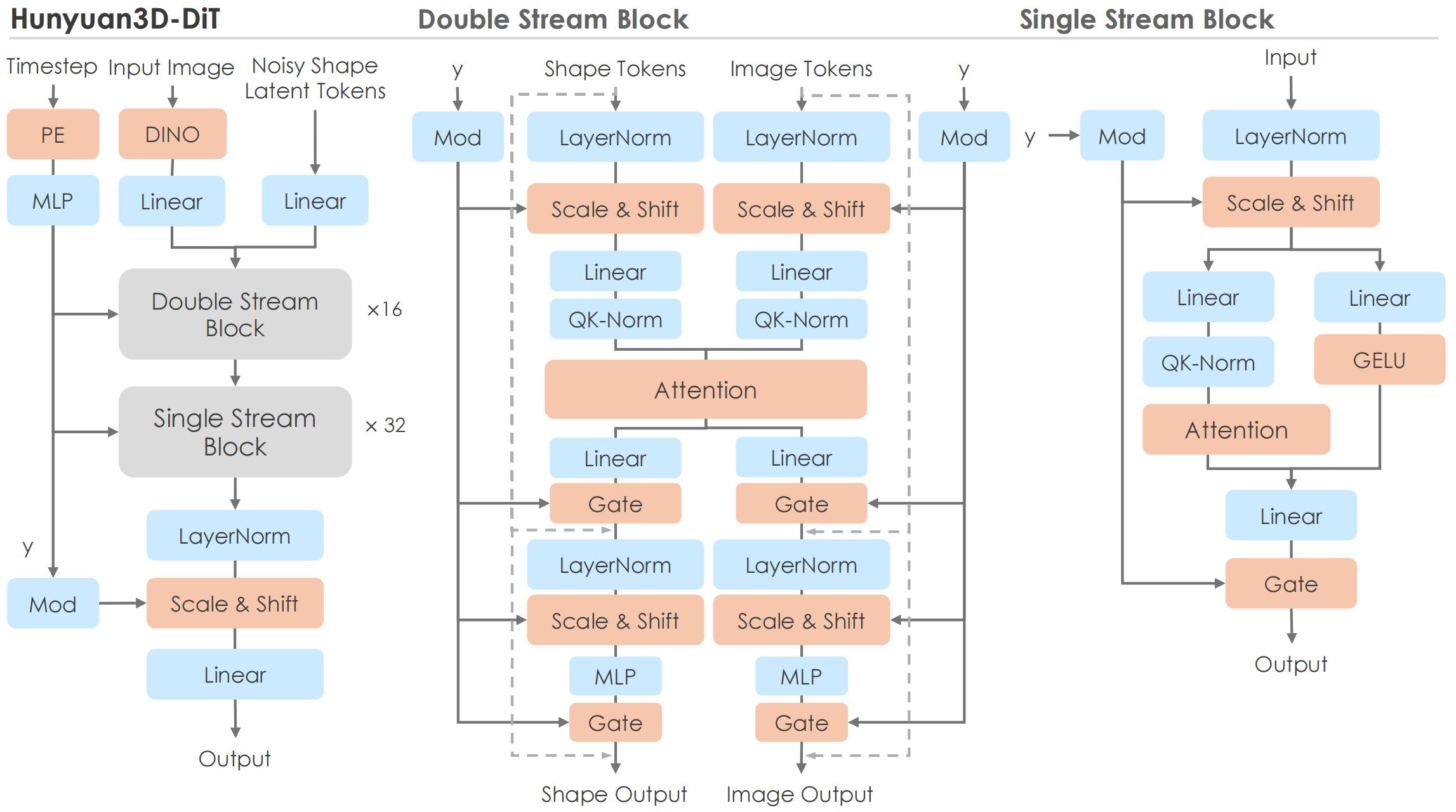

doc/Hunyuan3DDiT.png

0 → 100644

205 KB

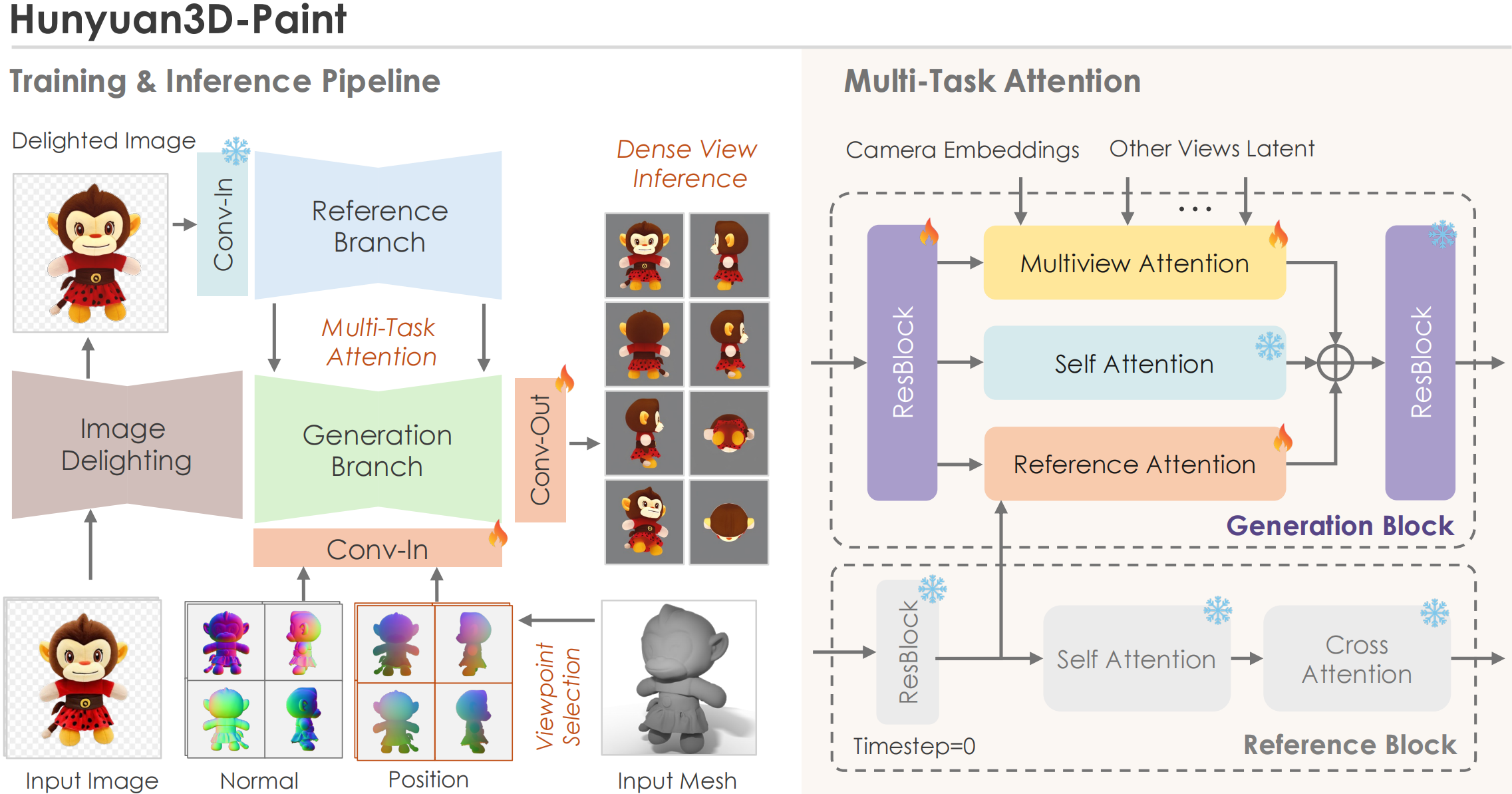

doc/Hunyuan3DPaint.png

0 → 100644

540 KB

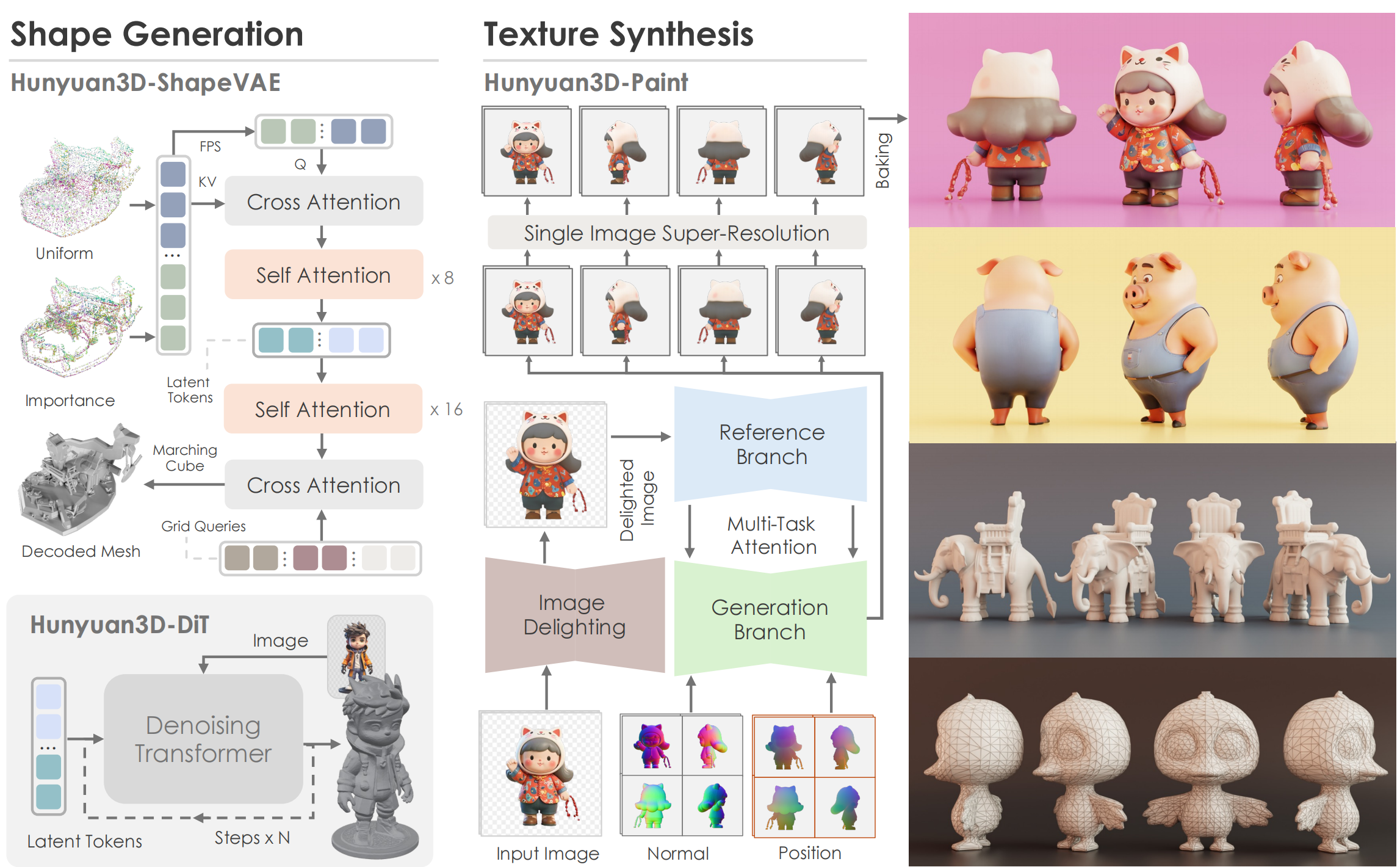

doc/algorithm.png

0 → 100644

1.49 MB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

docker_start.sh

0 → 100644

gradio_app.py

0 → 100644

hy3dgen/__init__.py

0 → 100644

File added

File added

File added