v1.0

Showing

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

File added

README.md

0 → 100644

README_origin.md

0 → 100644

car.jpg

0 → 100644

38.2 KB

doc/Florence-2.png

0 → 100644

810 KB

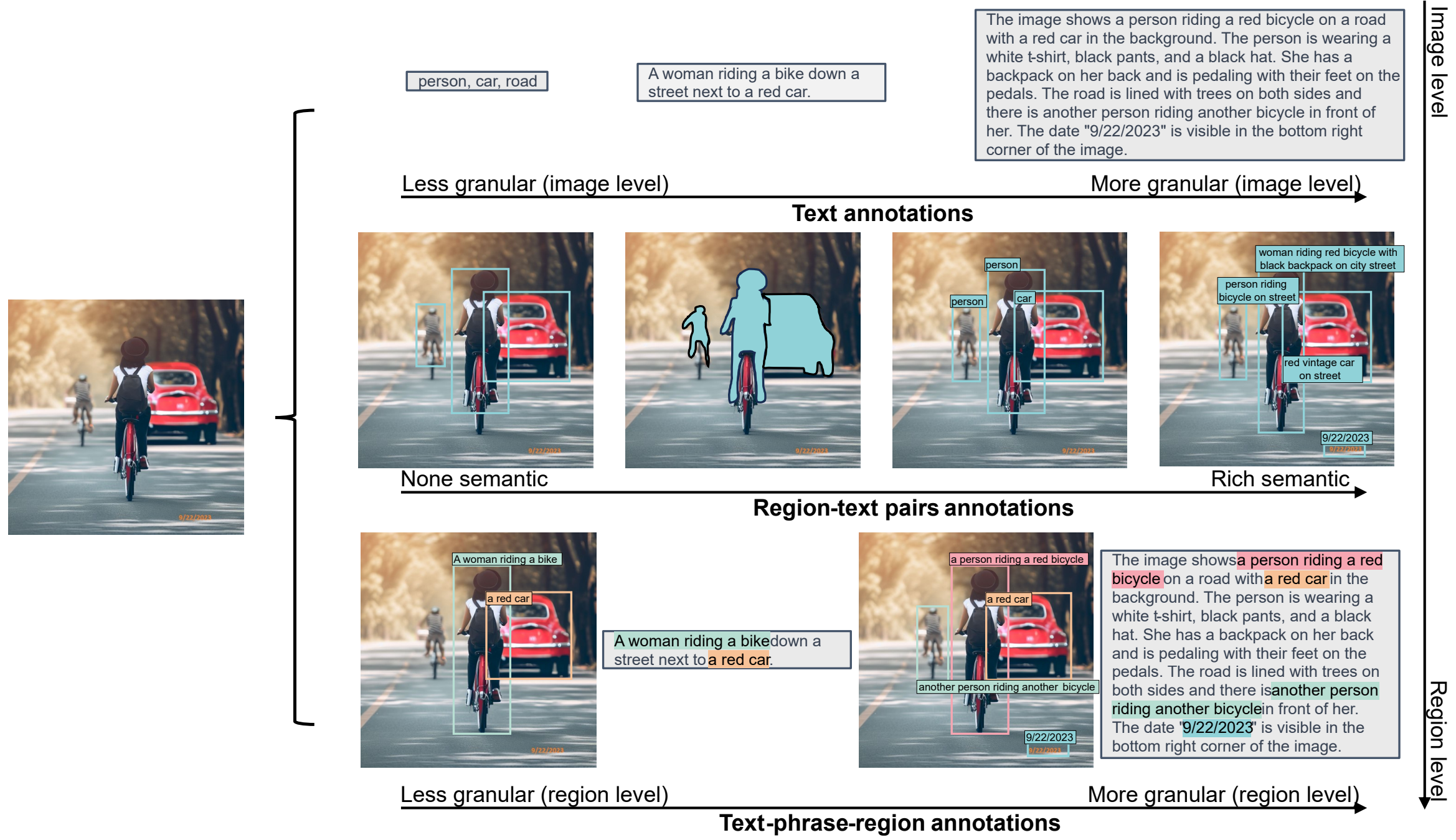

doc/label.png

0 → 100644

1.29 MB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

icon.png

0 → 100644

50.3 KB

img/annotation.jpeg

0 → 100644

58.2 KB

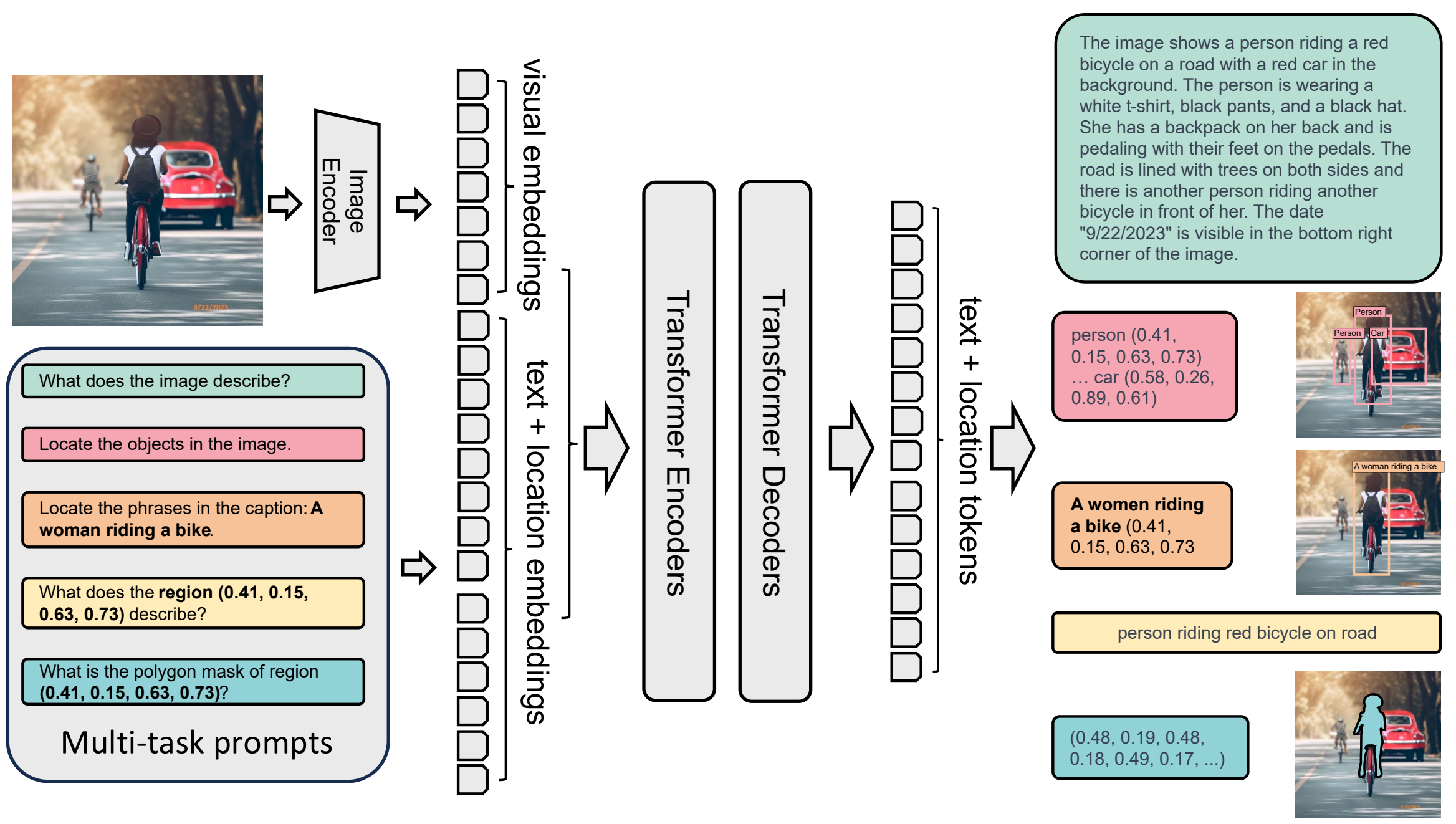

img/architecture.png

0 → 100644

428 KB

img/card.png

0 → 100644

51.8 KB

img/dataset.jpeg

0 → 100644

27.3 KB

img/representation.jpeg

0 → 100644

35.1 KB

infer.py

0 → 100644