Merge pull request #226 from jon-tow/evaluator-description-option

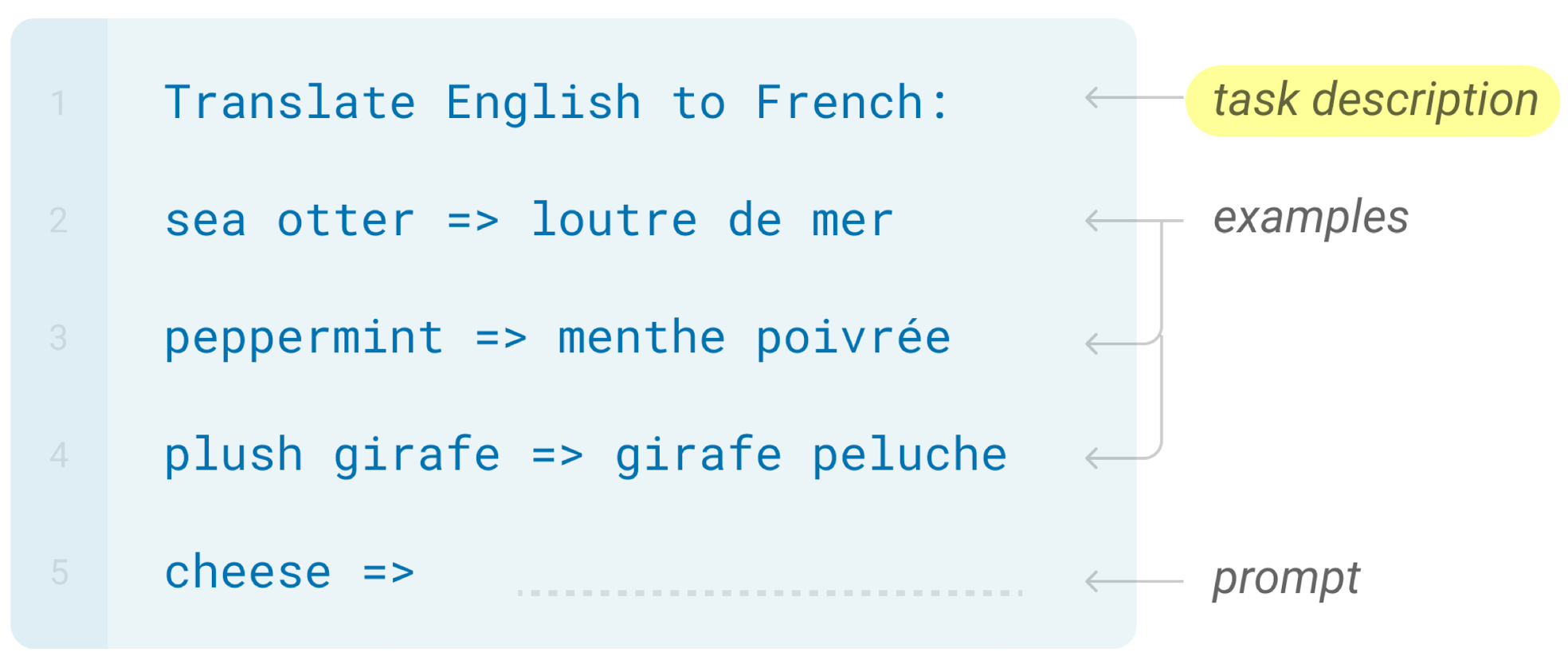

Replace `fewshot_description` API with a `description_dict` based interface

Showing

docs/description_guide.md

0 → 100644

308 KB

Replace `fewshot_description` API with a `description_dict` based interface

308 KB