First add in 0524

Showing

.gitignore

0 → 100644

CODE_OF_CONDUCT.md

0 → 100644

CONTRIBUTING.md

0 → 100644

README.md

0 → 100644

UPDATES.md

0 → 100644

dev_requirements.txt

0 → 100644

| vllm | |||

| pytest-mock | |||

| auditnlg | |||

| \ No newline at end of file |

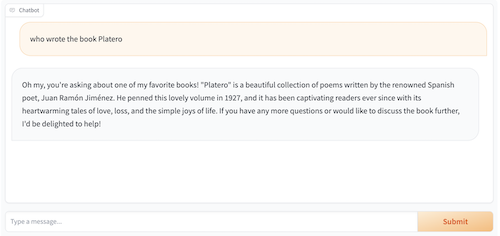

docs/FAQ.md

0 → 100644

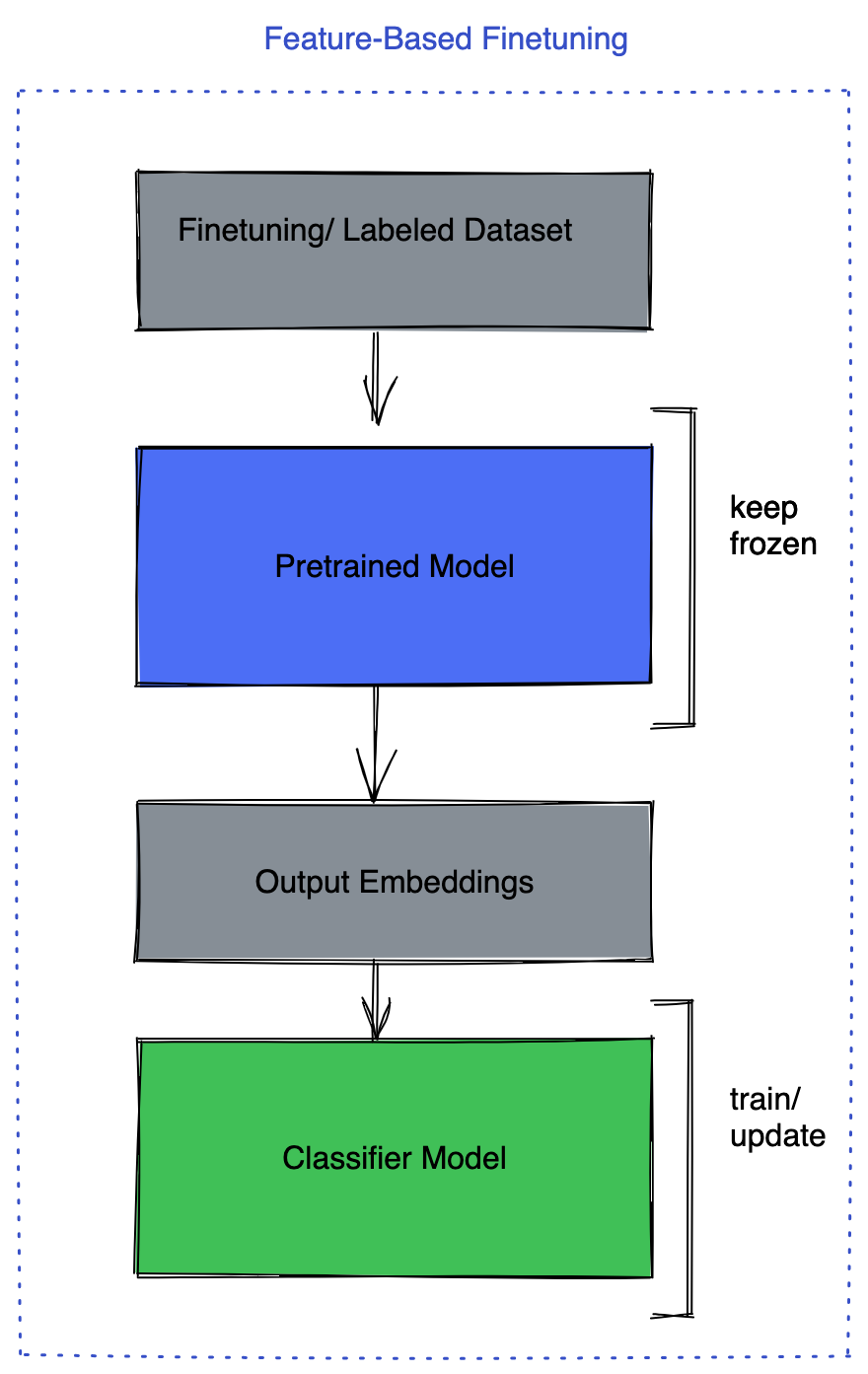

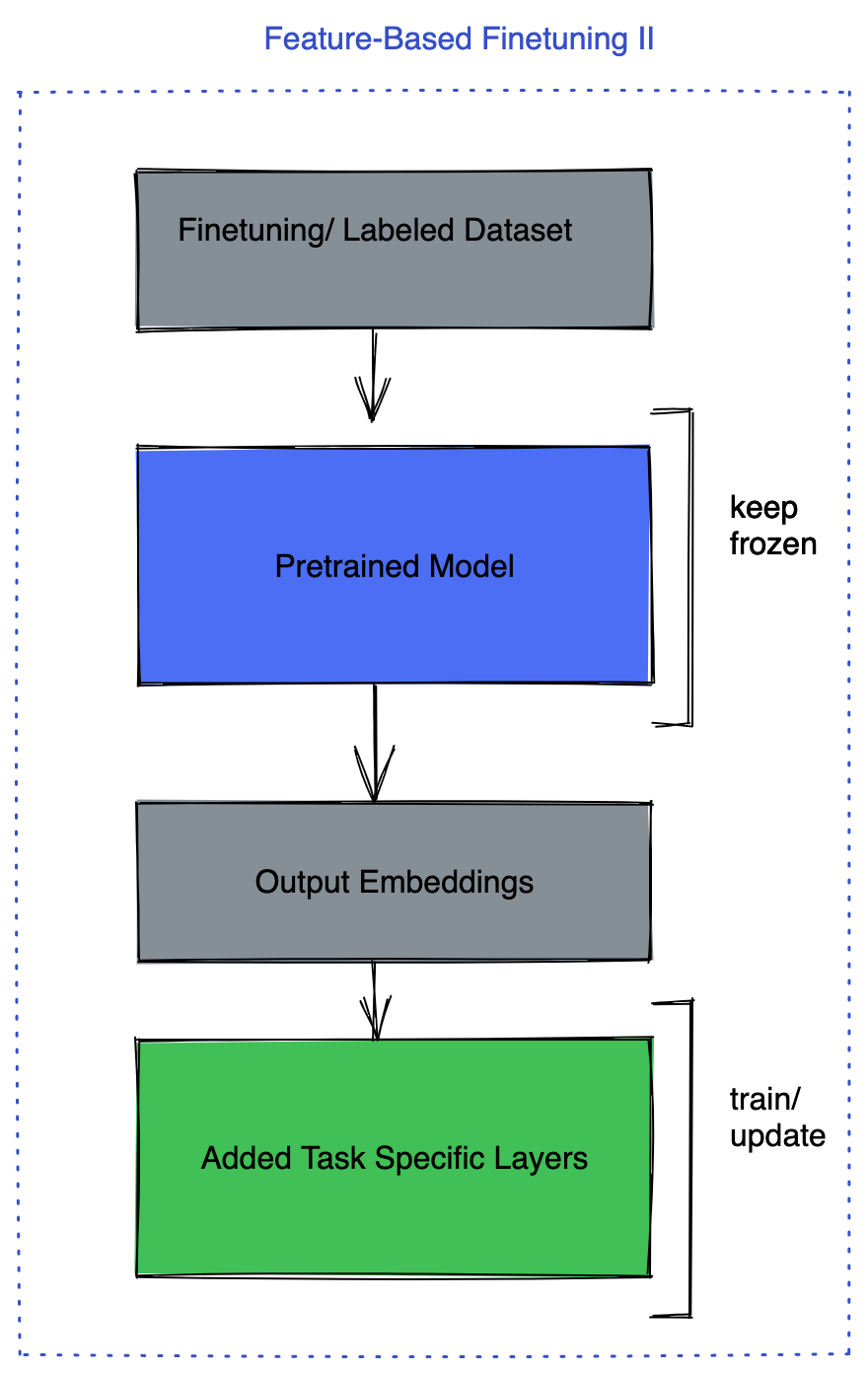

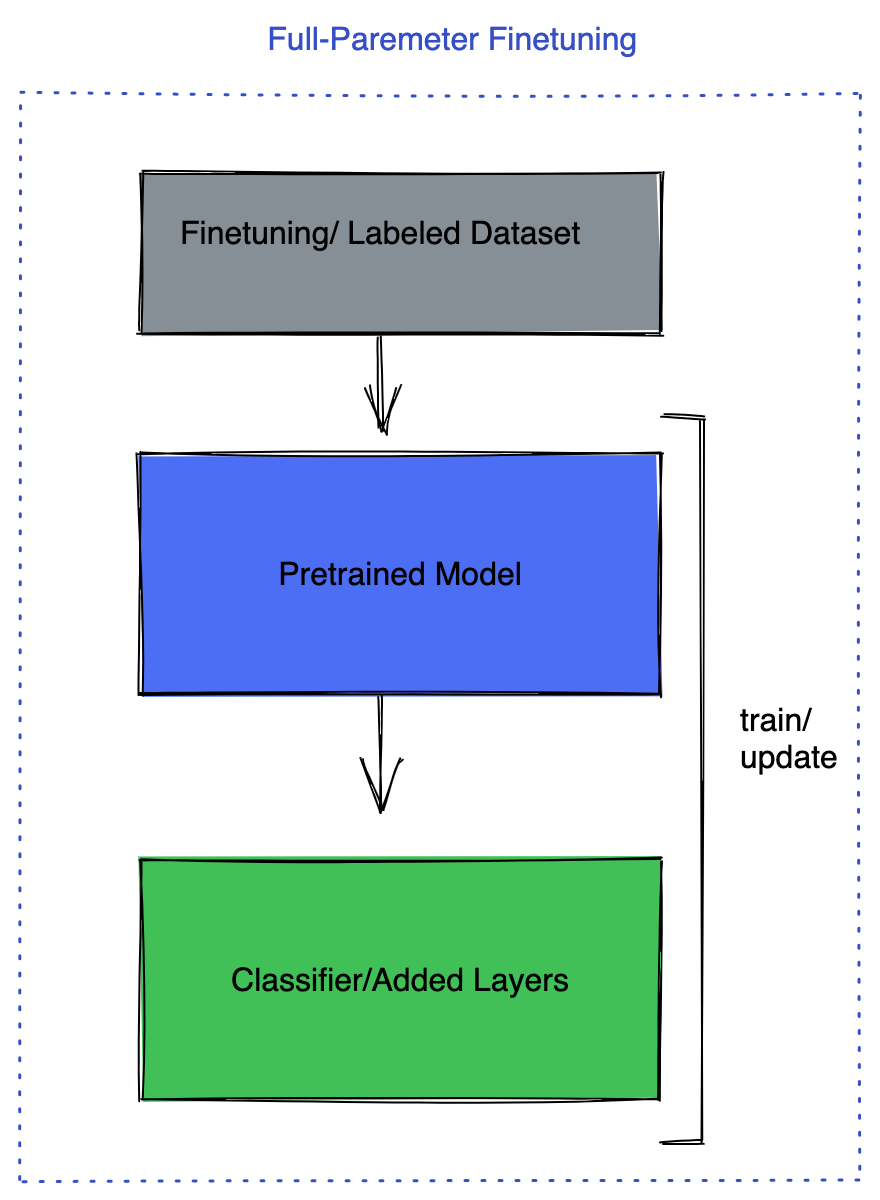

docs/LLM_finetuning.md

0 → 100644

153 KB

154 KB

129 KB

45.4 KB

71.8 KB

62.3 KB

520 KB

79.6 KB

188 KB

228 KB

82.8 KB

docs/multi_gpu.md

0 → 100644