"vscode:/vscode.git/clone" did not exist on "aa9af7fd12da3fe58d17fb758851eb8022af290f"

feat: 初始提交

Showing

.gitignore

0 → 100644

Dockerfile

0 → 100644

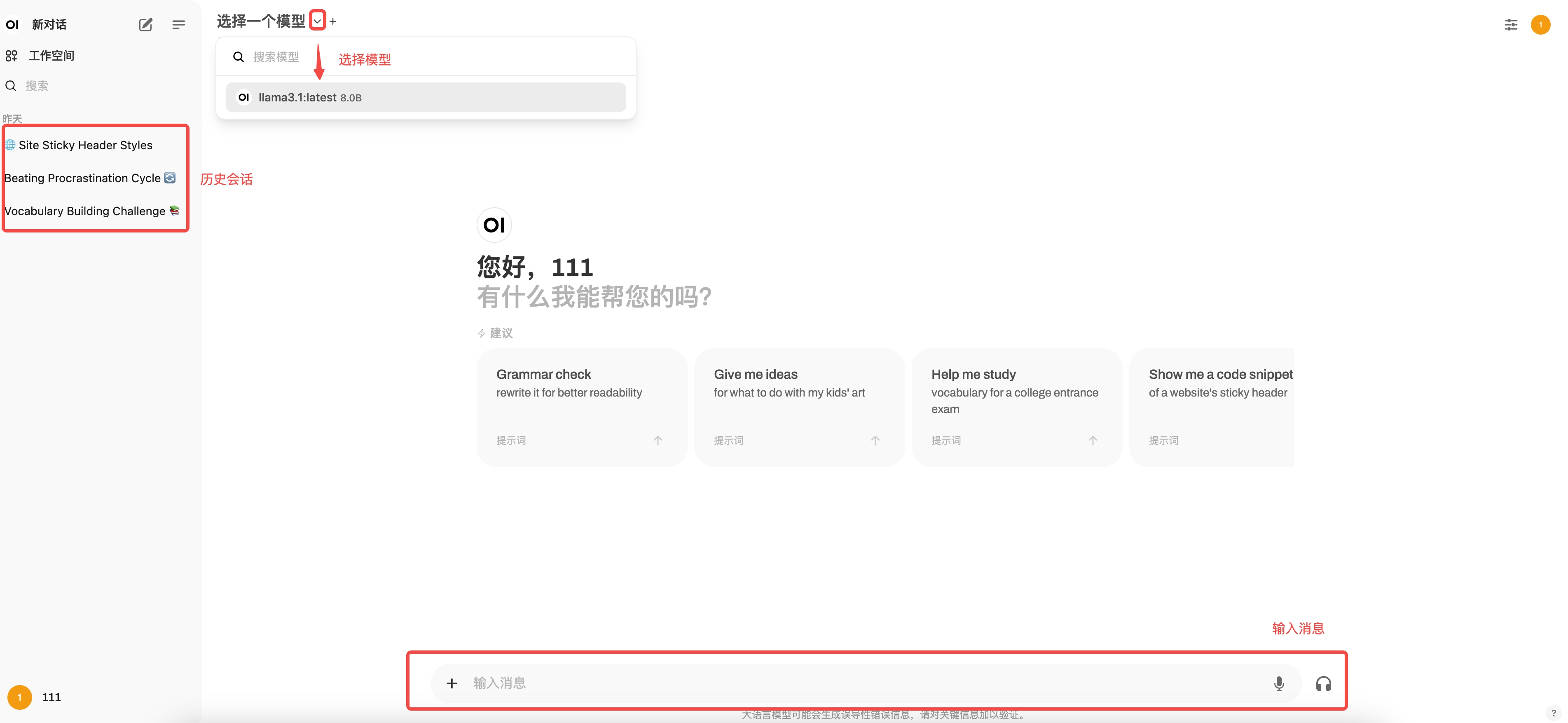

assets/示例.jpeg

0 → 100644

351 KB

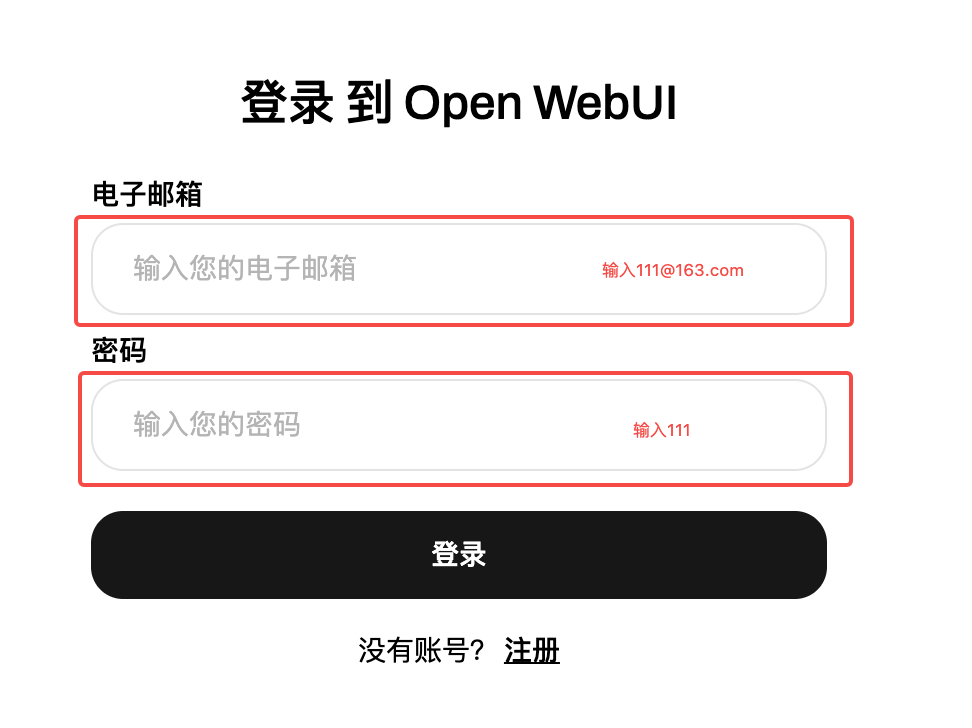

assets/账号.png

0 → 100644

55.1 KB

install.sh

0 → 100644

run.py

0 → 100644

start.sh

0 → 100644

启动器.ipynb

0 → 100644