Initial commit

parents

Showing

docs/img/spot_texw.png

0 → 100644

13.3 KB

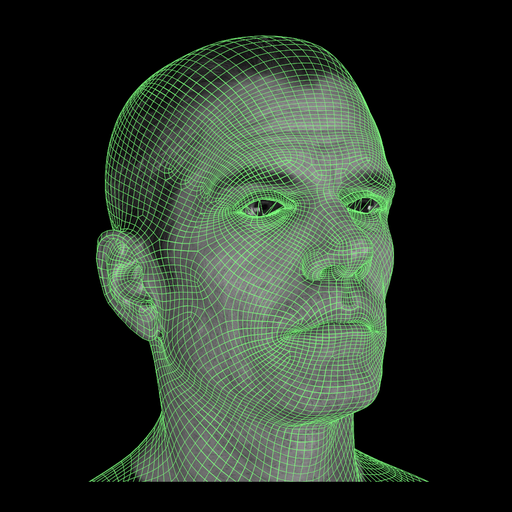

docs/img/spot_tri.png

0 → 100644

24.7 KB

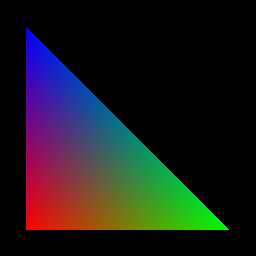

docs/img/spot_uv.png

0 → 100644

37.9 KB

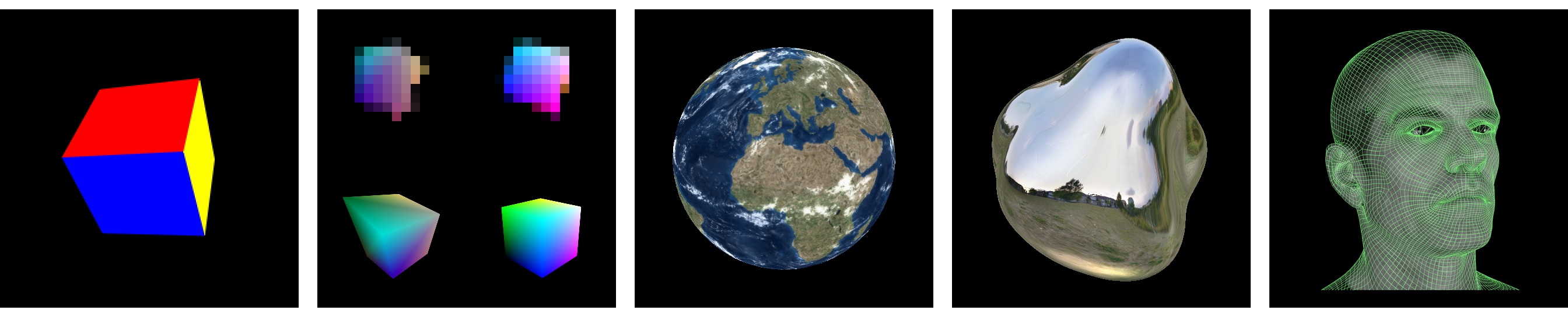

docs/img/teaser.png

0 → 100644

730 KB

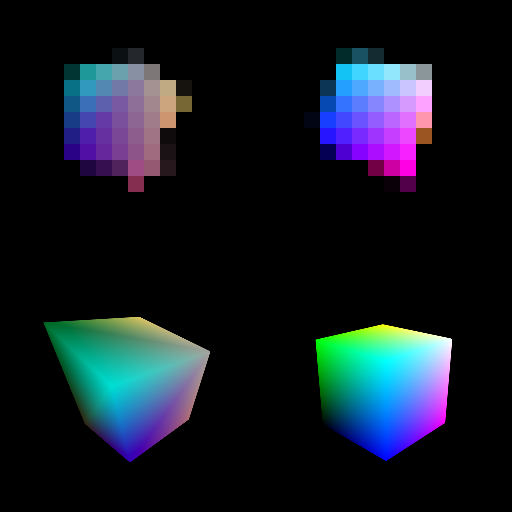

docs/img/teaser1.png

0 → 100644

19 KB

docs/img/teaser2.png

0 → 100644

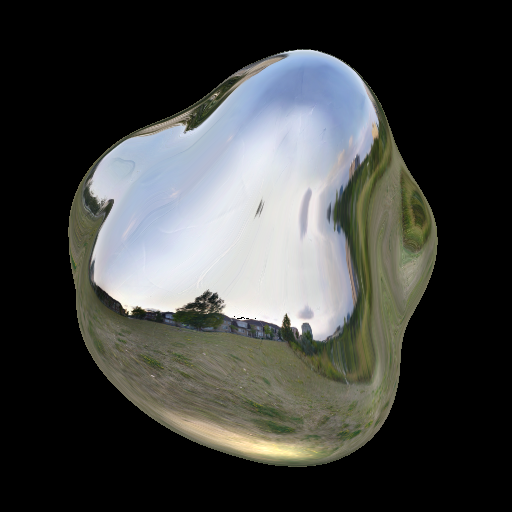

230 KB

docs/img/teaser3.png

0 → 100644

176 KB

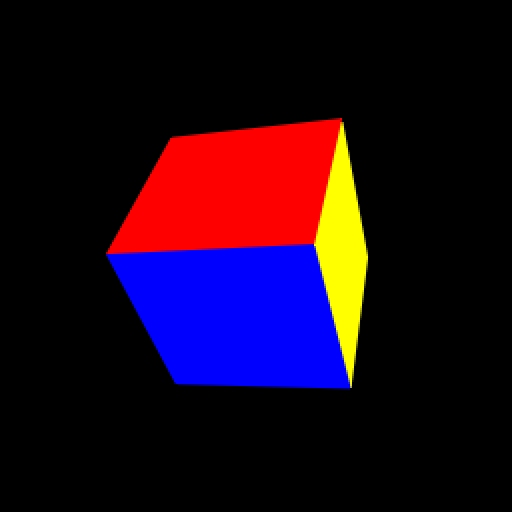

docs/img/teaser4.png

0 → 100644

3.9 KB

docs/img/teaser5.png

0 → 100644

263 KB

docs/img/tri.png

0 → 100644

2.37 KB

docs/index.html

0 → 100644

This diff is collapsed.

nvdiffrast/__init__.py

0 → 100644

nvdiffrast/common/common.cpp

0 → 100644

nvdiffrast/common/common.h

0 → 100644

This diff is collapsed.

nvdiffrast/common/glutil.inl

0 → 100644