"tutorials/vscode:/vscode.git/clone" did not exist on "7cb50072a4c3eec6619295d64bf5faf93cfb1f58"

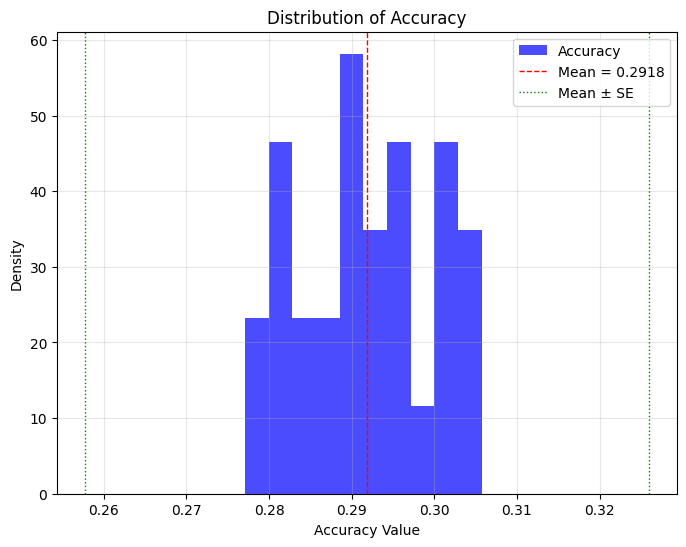

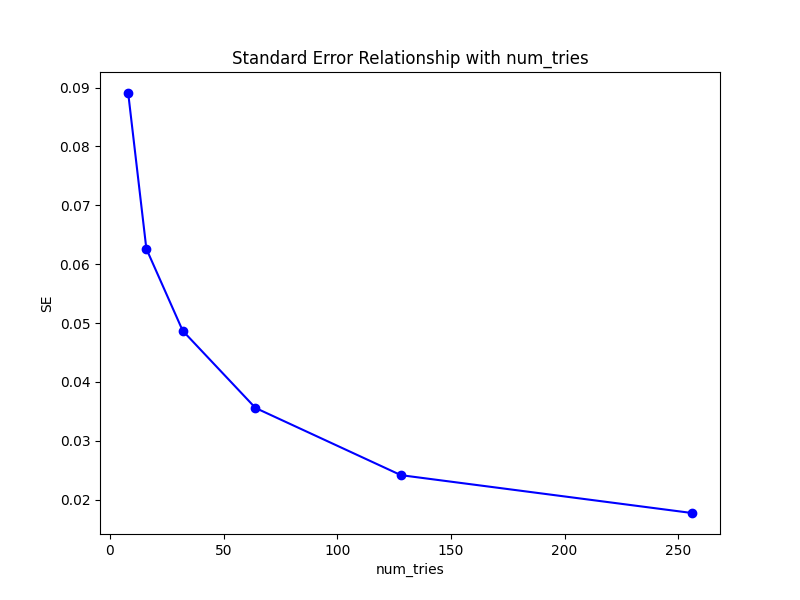

Benchmark: Statistical Analysis of the Output Stability of the Deepseek Model (#4202)

Co-authored-by:  Chayenne <zhaochen20@outlook.com>

Chayenne <zhaochen20@outlook.com>

Showing

32.9 KB

26.4 KB