"vscode:/vscode.git/clone" did not exist on "052edcecef3eb0ae9fe9e4b256fa2a488f9f395b"

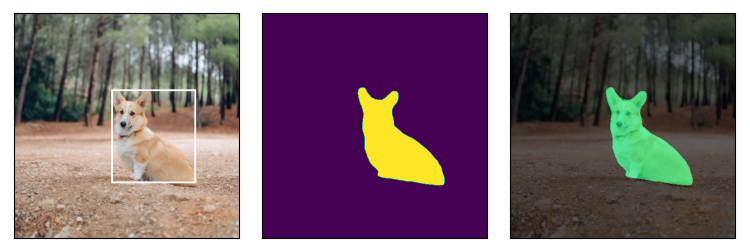

Add gallery example for drawing keypoints (#4892)

* Start writing gallery example * Remove the child image fix implementation add code * add docs * Apply suggestions from code review Co-authored-by:Vasilis Vryniotis <datumbox@users.noreply.github.com> * address review update thumbnail Co-authored-by:

Philip Meier <github.pmeier@posteo.de> Co-authored-by:

Vasilis Vryniotis <datumbox@users.noreply.github.com>

Showing

gallery/assets/person1.jpg

0 → 100644

68.5 KB

187 KB

293 KB