feat: upgrade to sdk v1 latest version

* 70b2701 on master

Signed-off-by:  huteng.ht <huteng.ht@bytedance.com>

huteng.ht <huteng.ht@bytedance.com>

Showing

README.zh.md

0 → 100644

docs/api.md

deleted

100644 → 0

docs/dynamic_load.md

deleted

100644 → 0

docs/encrypt_model.md

0 → 100644

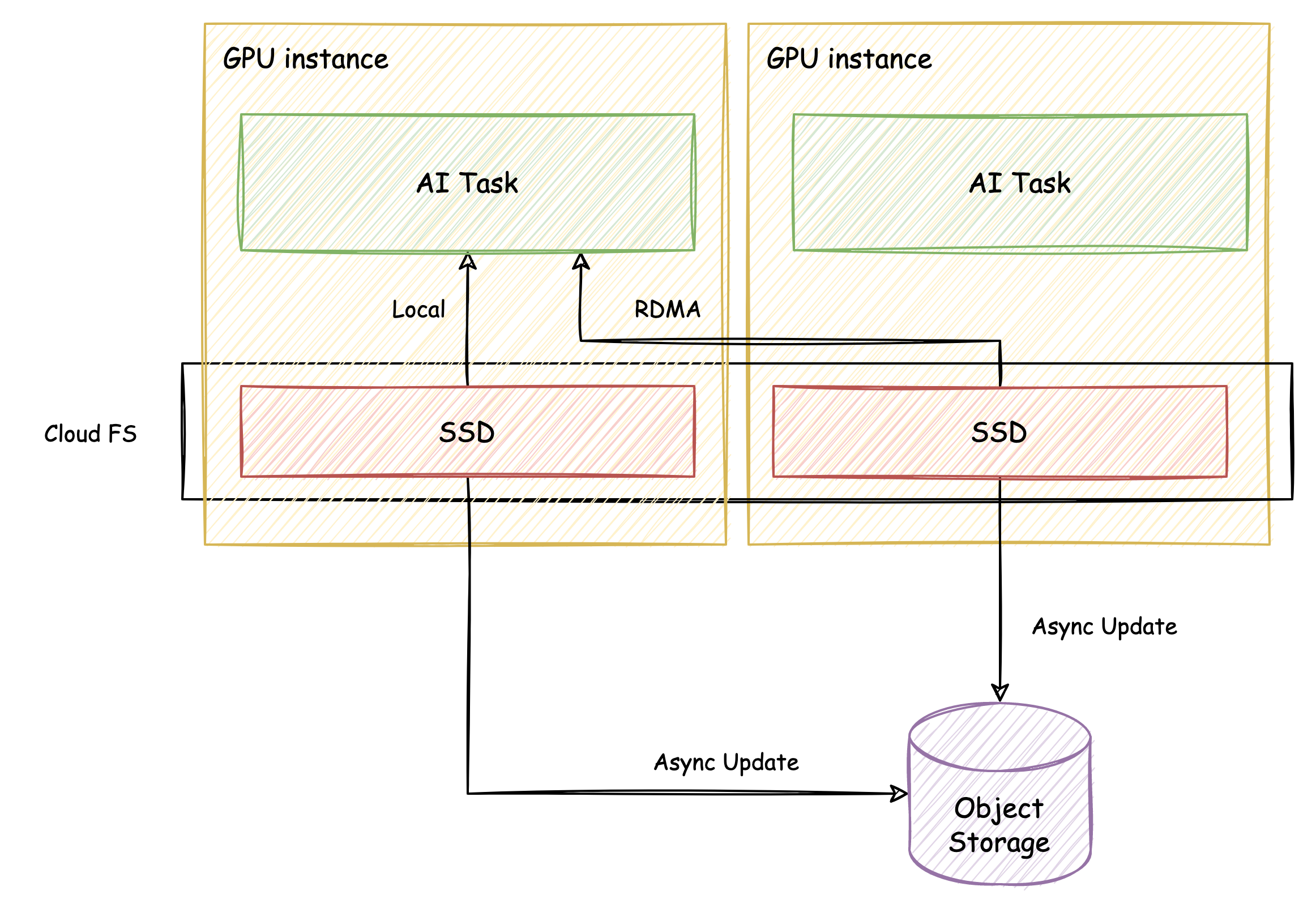

docs/imgs/SFCS.png

0 → 100644

1.41 MB

docs/pin_mem.md

0 → 100644

docs/release.md

deleted

100644 → 0

docs/sfcs_support.md

deleted

100644 → 0

This diff is collapsed.