[V0.4.1 Release] Merge v0.4.1 branch back to Master (#509)

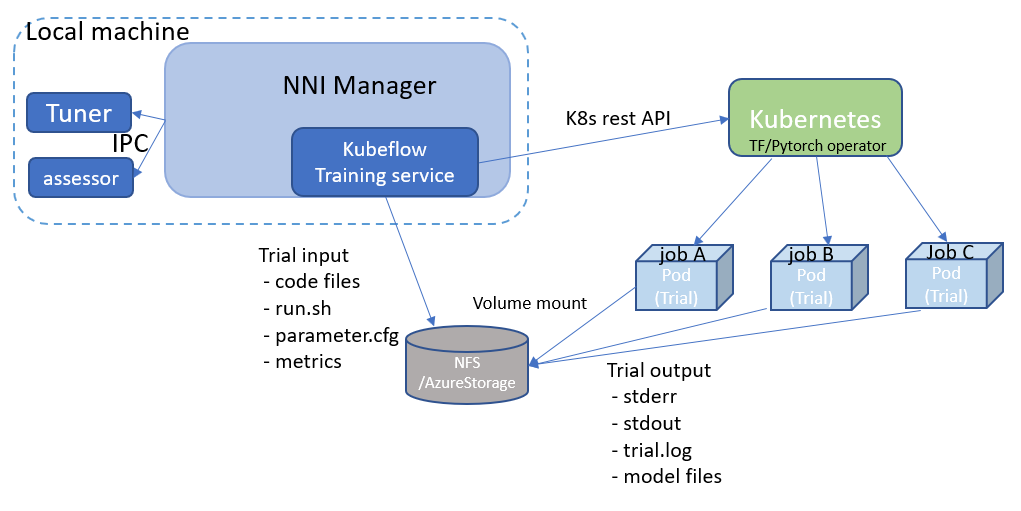

* Update nnictl.py Fix the issue that nnictl --version via pip installation doesn't work * Update kubeflow training service document (#494) * Remove kubectl related document, add messages for kubeconfig * Add design section for kubeflow training service * Move the image files for PAI training service doc into img folder. * Update KubeflowMode.md (#498) Update KubeflowMode.md, small terms change * [V0.4.1 bug fix] Cannot run kubeflow training service due to trial_keeper change (#503) * Update kubeflow training service document * fix bug a that kubeflow trial job cannot run * upgrade version number (#499) * [V0.4.1 bug fix] Support read K8S config from KUBECONFIG environment variable (#507) * Add KUBCONFIG env variable support * In main.ts, throw cached error to make sure nnictl can show the error in stderr

Showing

43.7 KB