Merge pull request #23 from microsoft/master

pull code

Showing

docs/en_US/GPTuner.md

0 → 100644

docs/img/release_icon.png

0 → 100644

1.97 KB

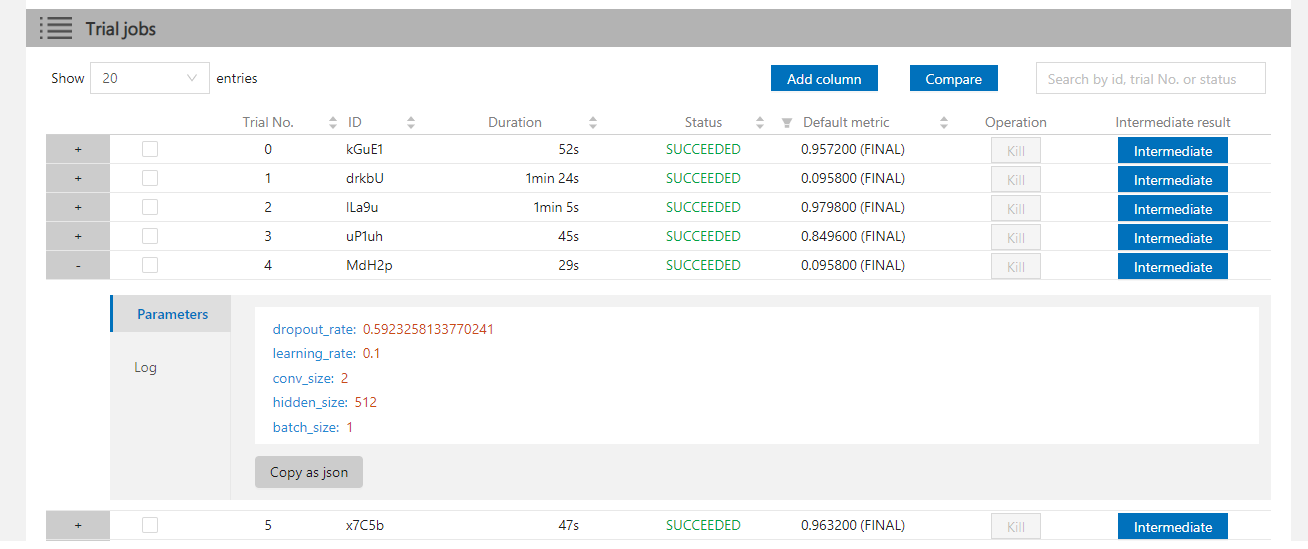

| W: | H:

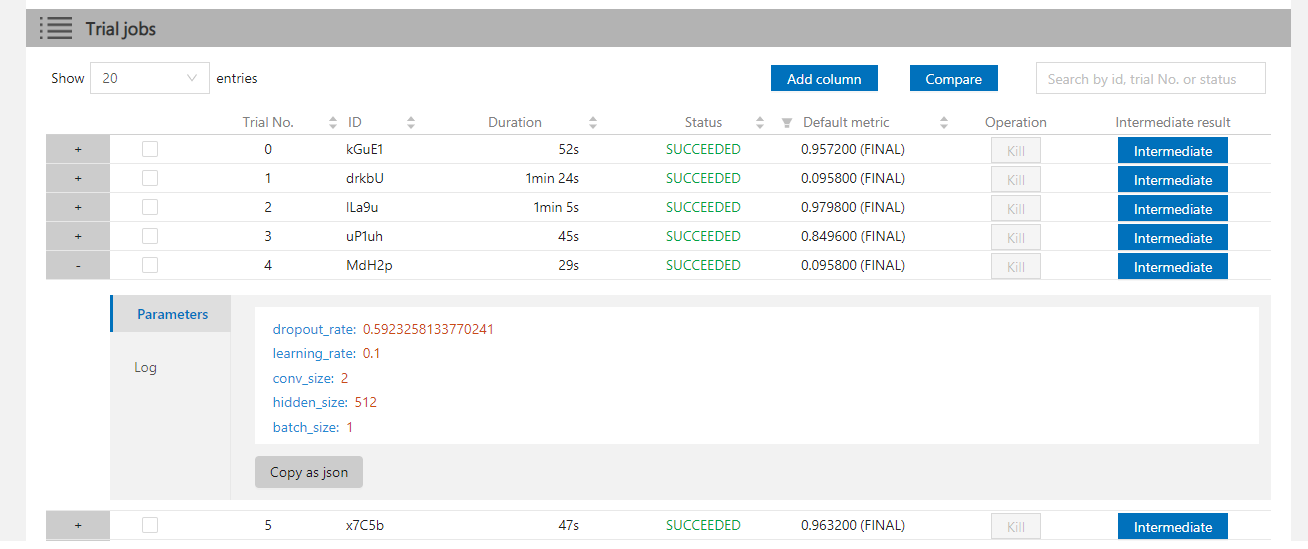

| W: | H:

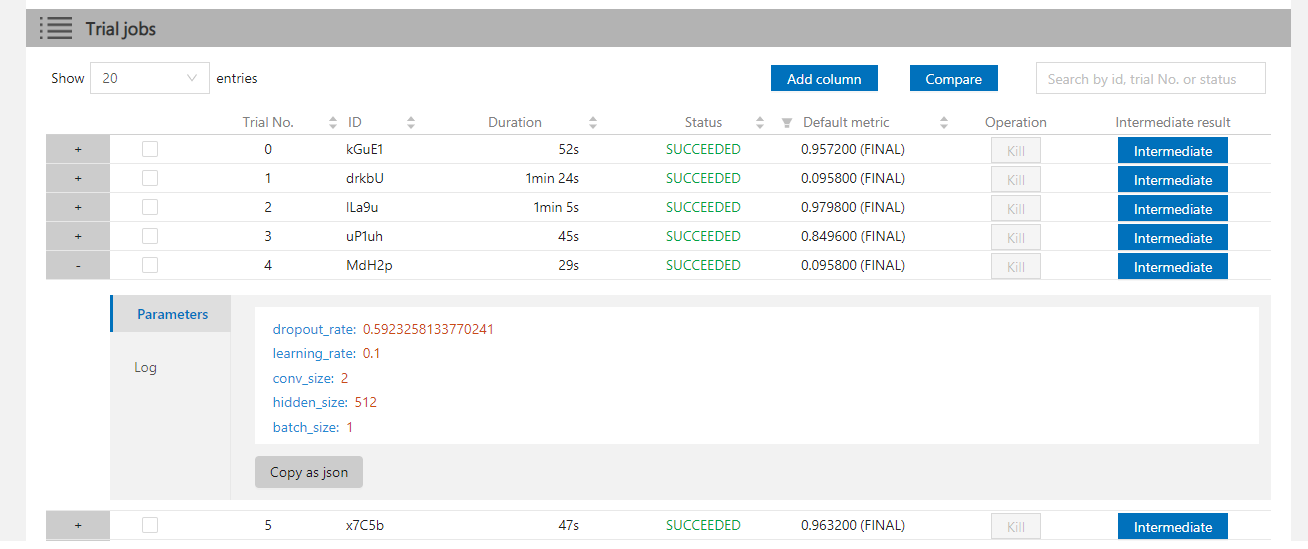

| W: | H:

| W: | H:

| W: | H:

| W: | H:

docs/static/css/custom.css

0 → 100644