Merge pull request #155 from Microsoft/master

merge master

Showing

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

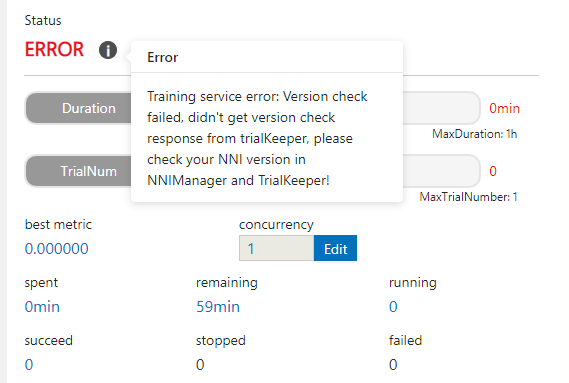

docs/img/version_check.png

0 → 100644

19.2 KB

| W: | H:

| W: | H:

merge master

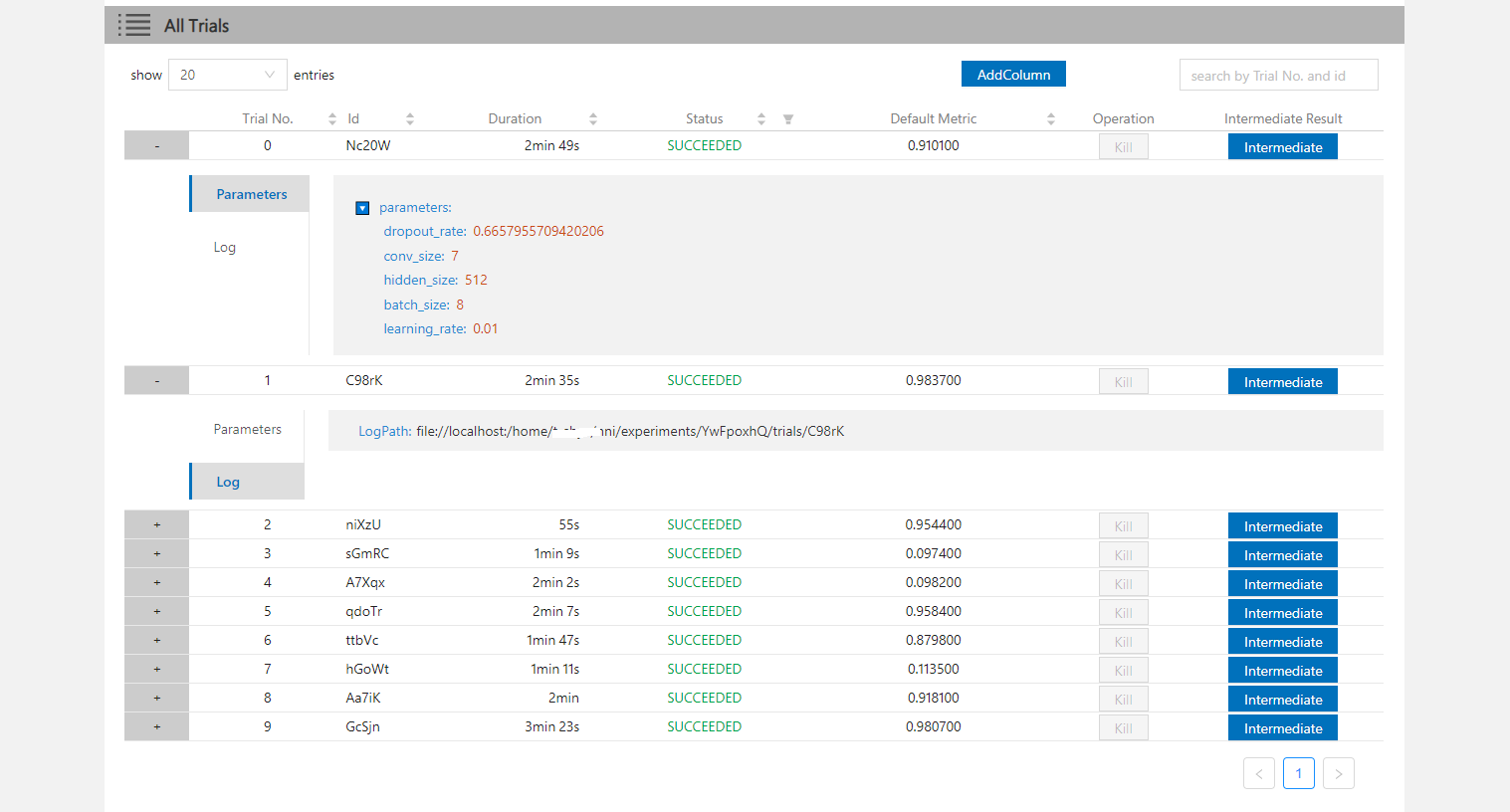

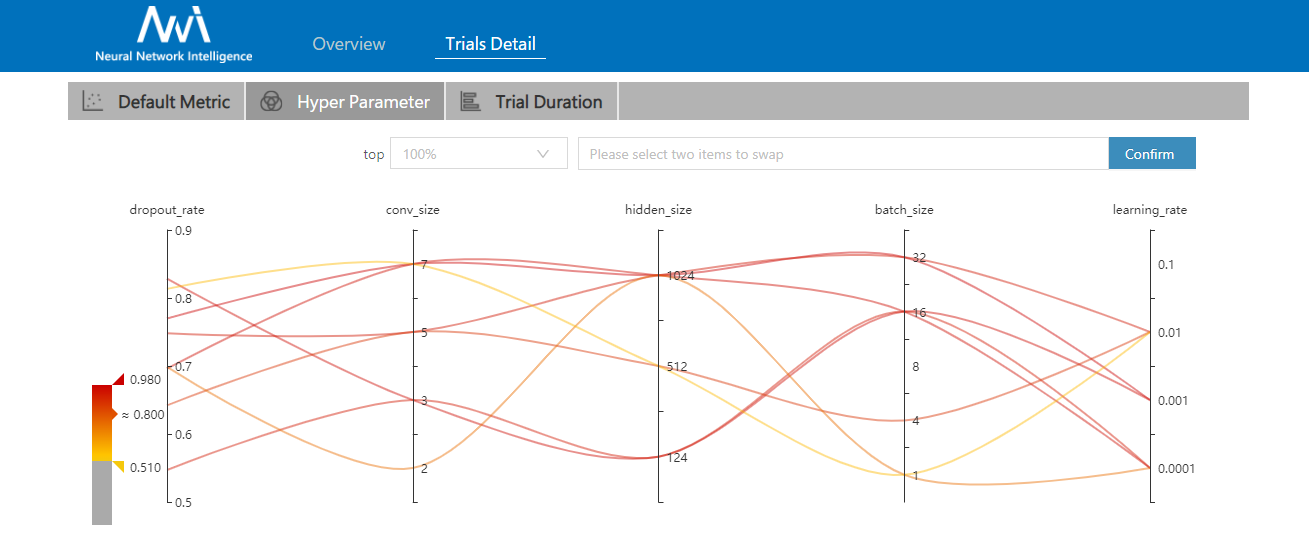

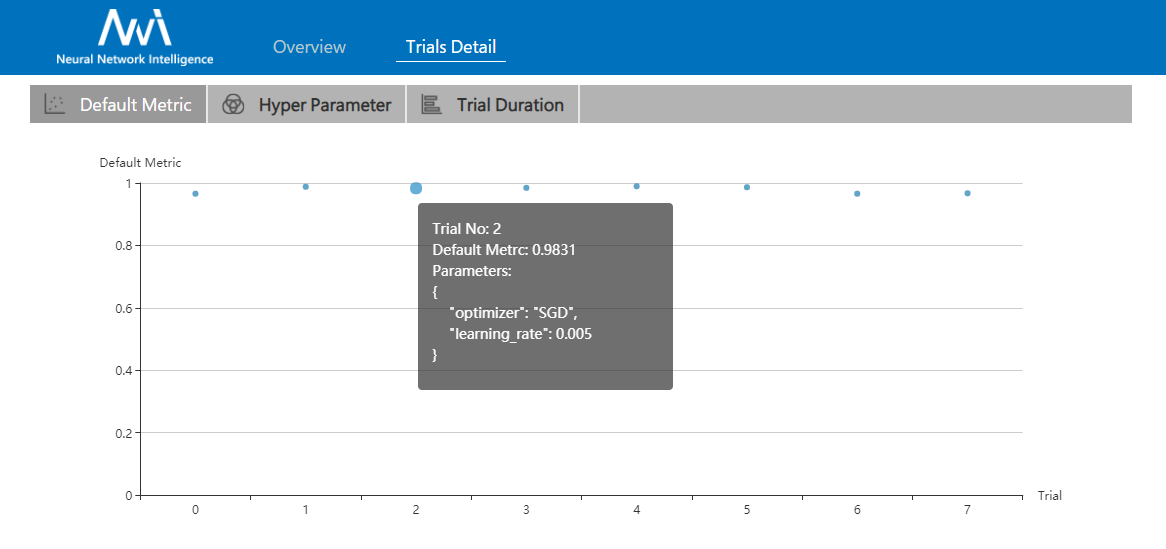

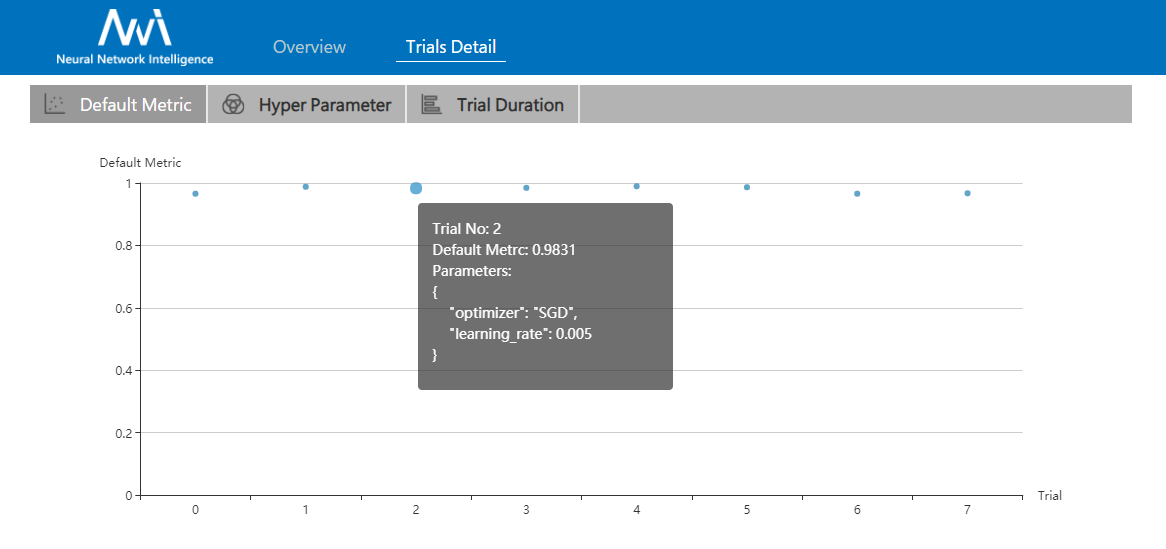

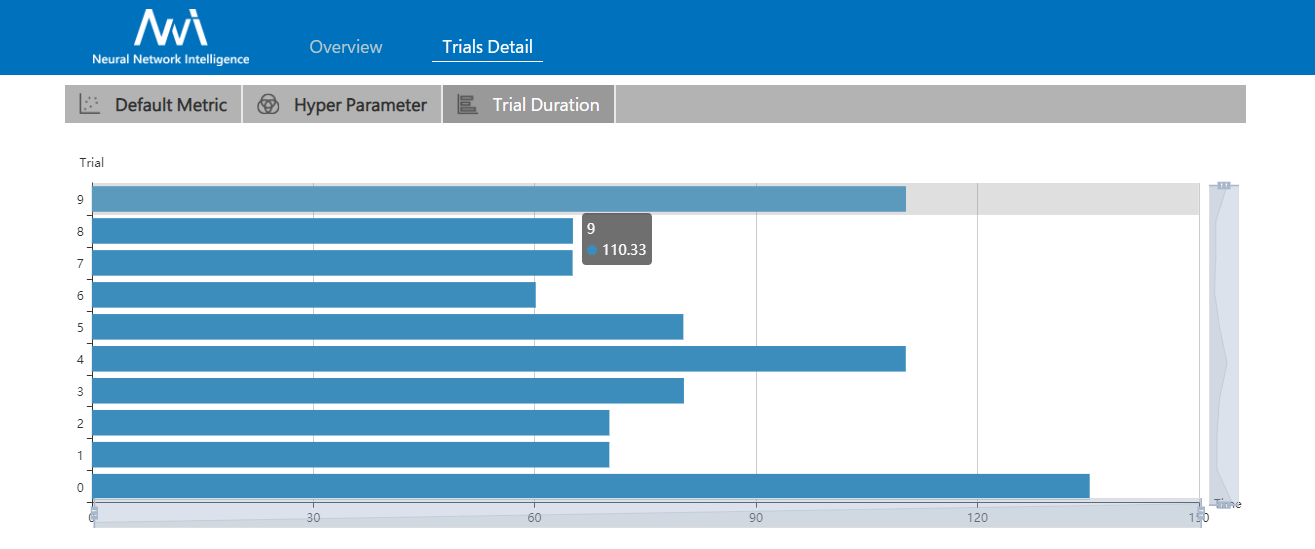

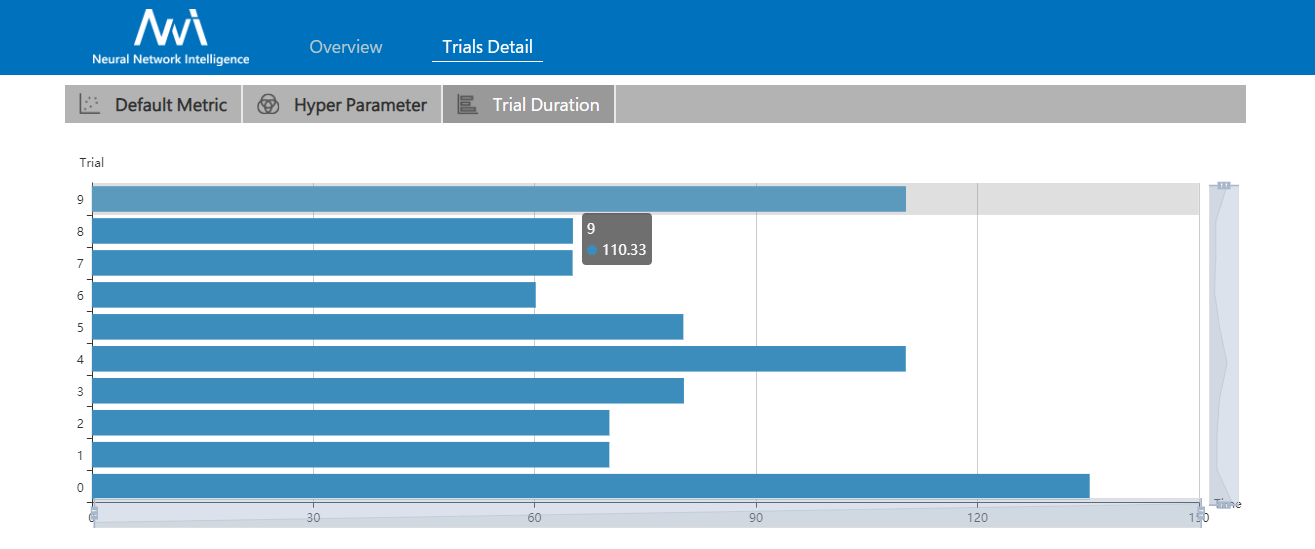

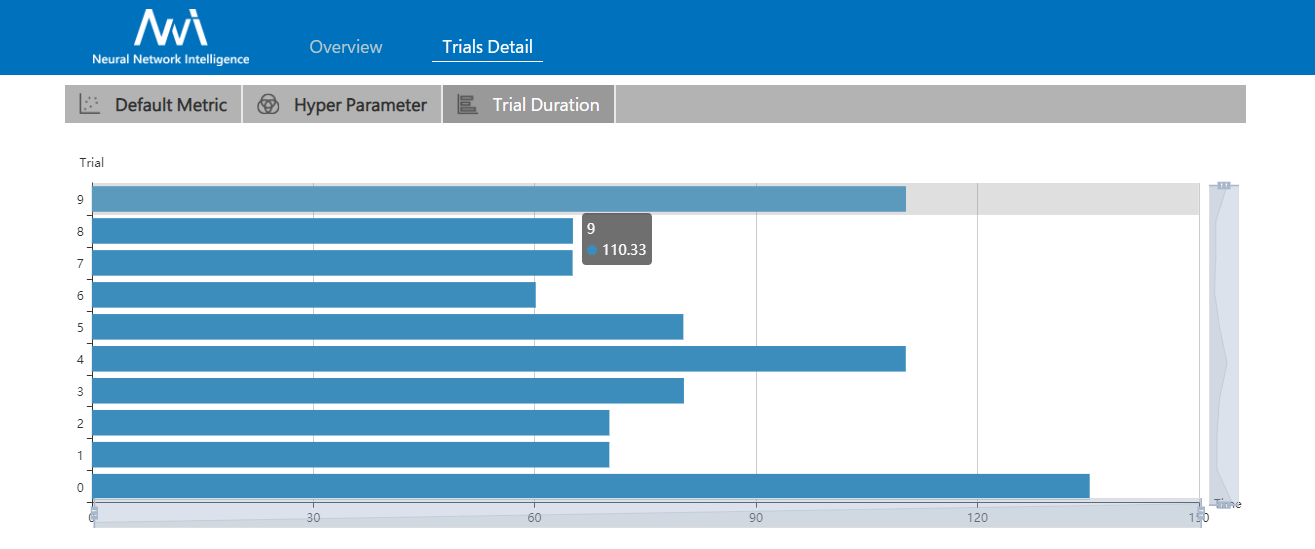

28.8 KB | W: | H:

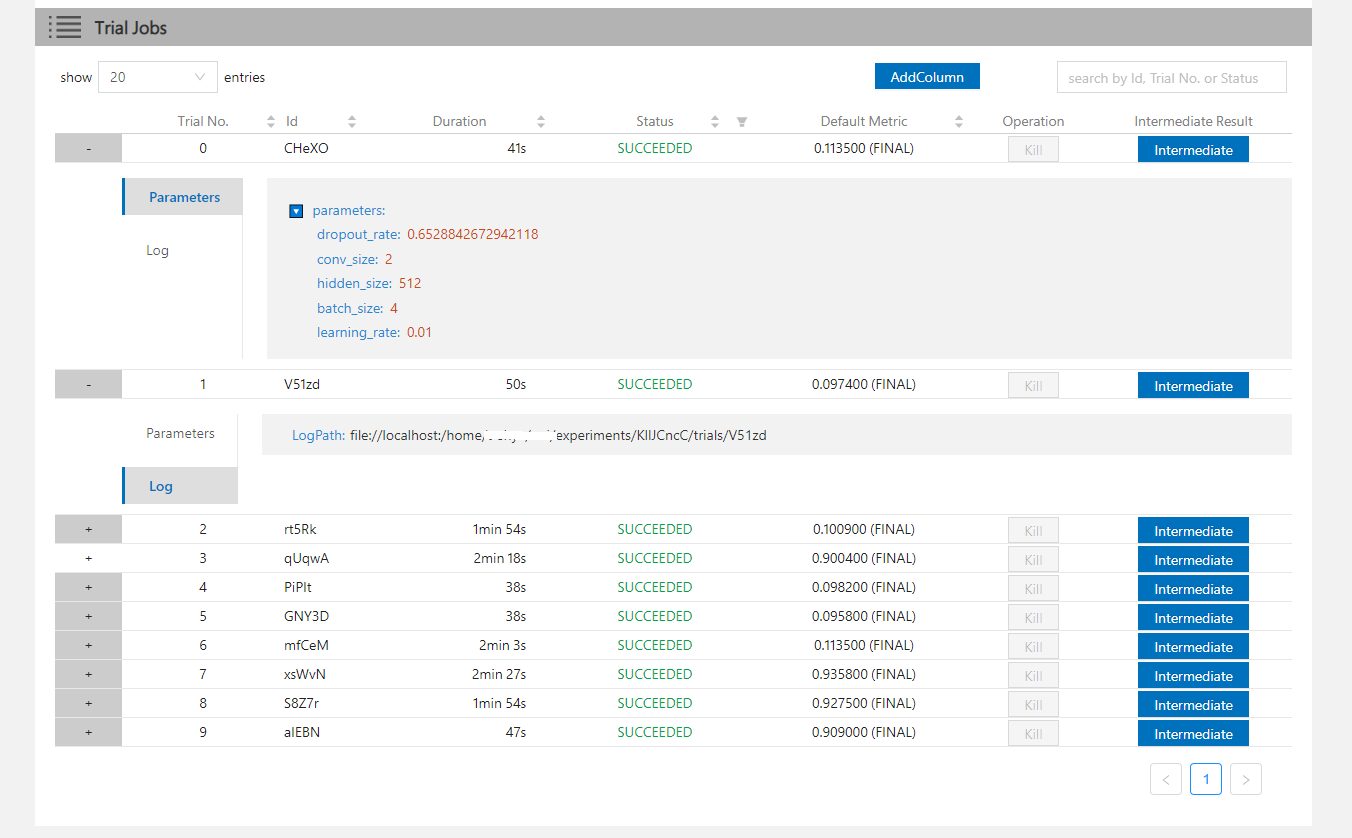

26.8 KB | W: | H:

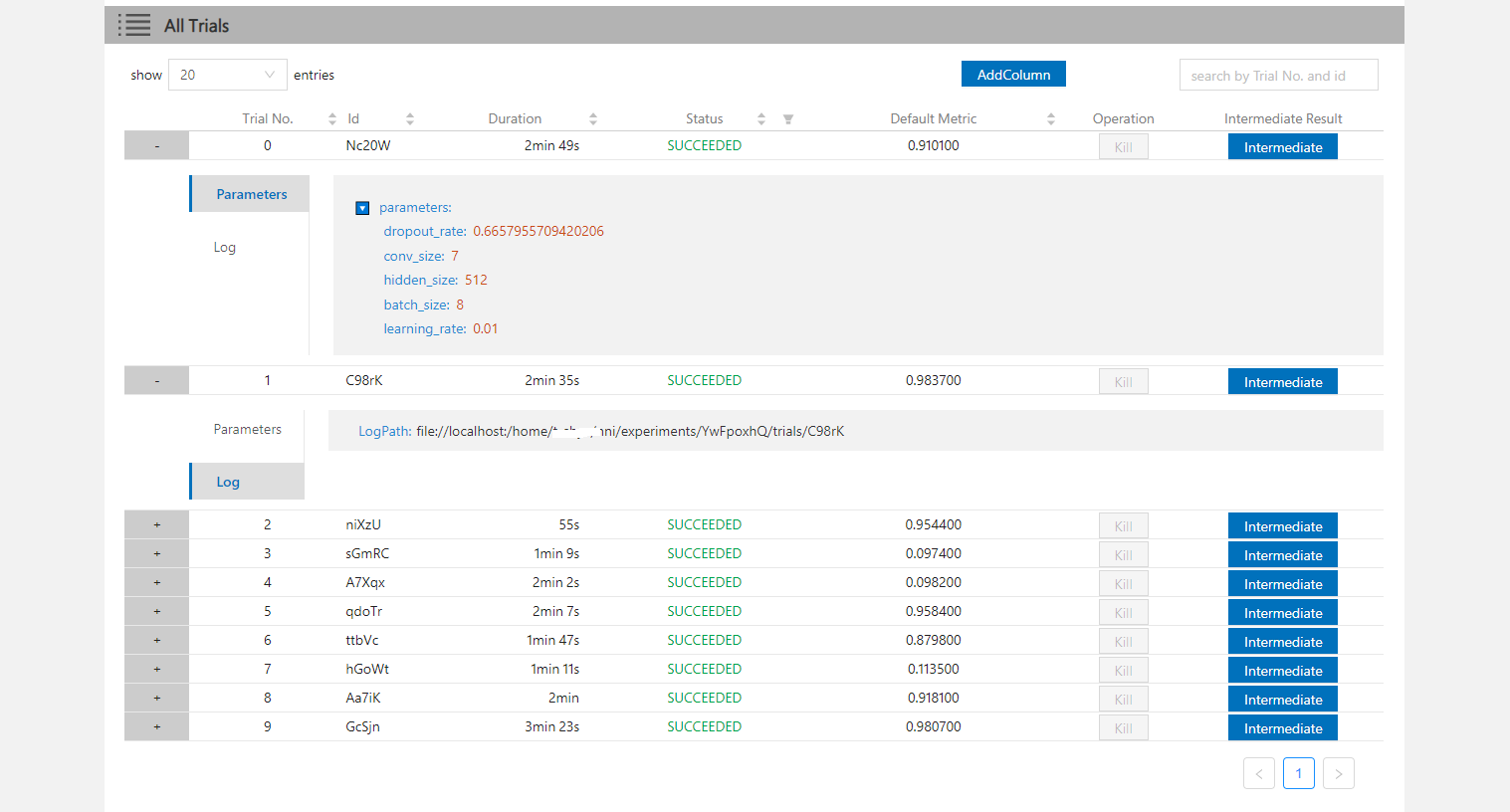

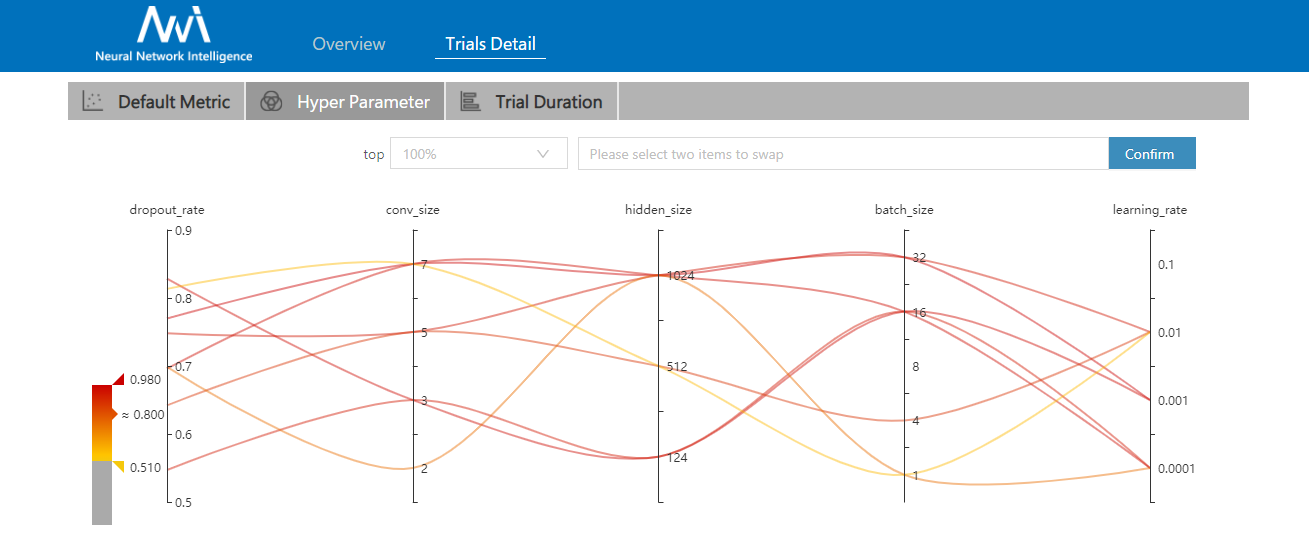

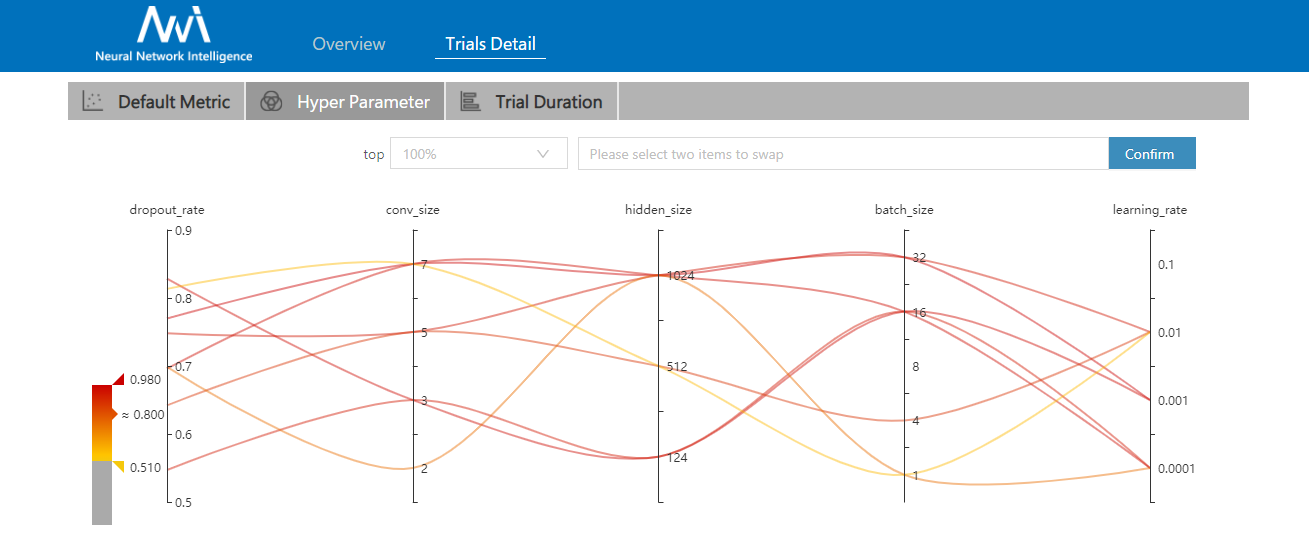

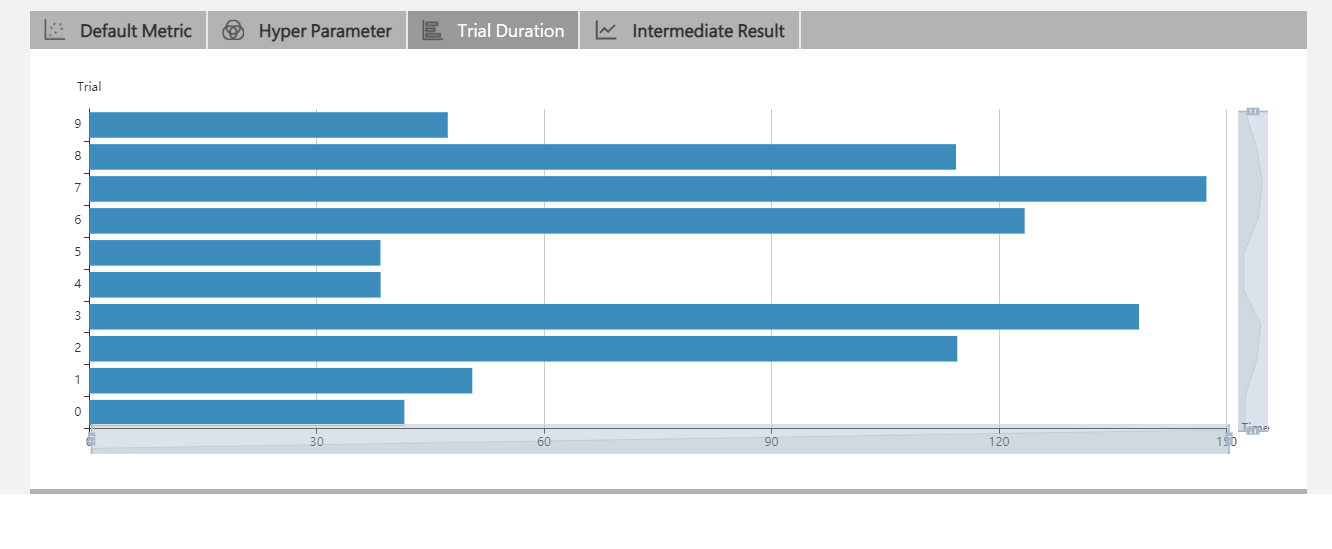

85.1 KB | W: | H:

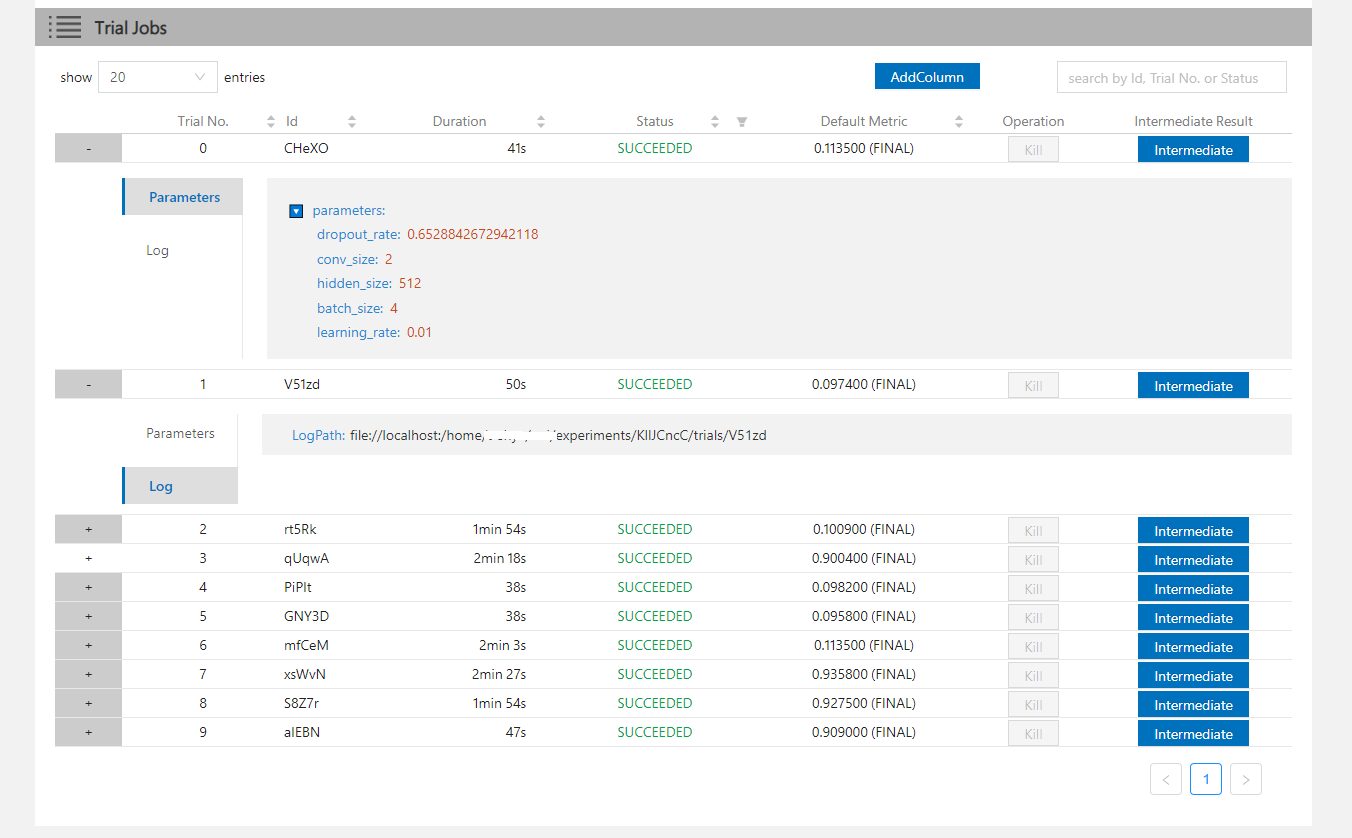

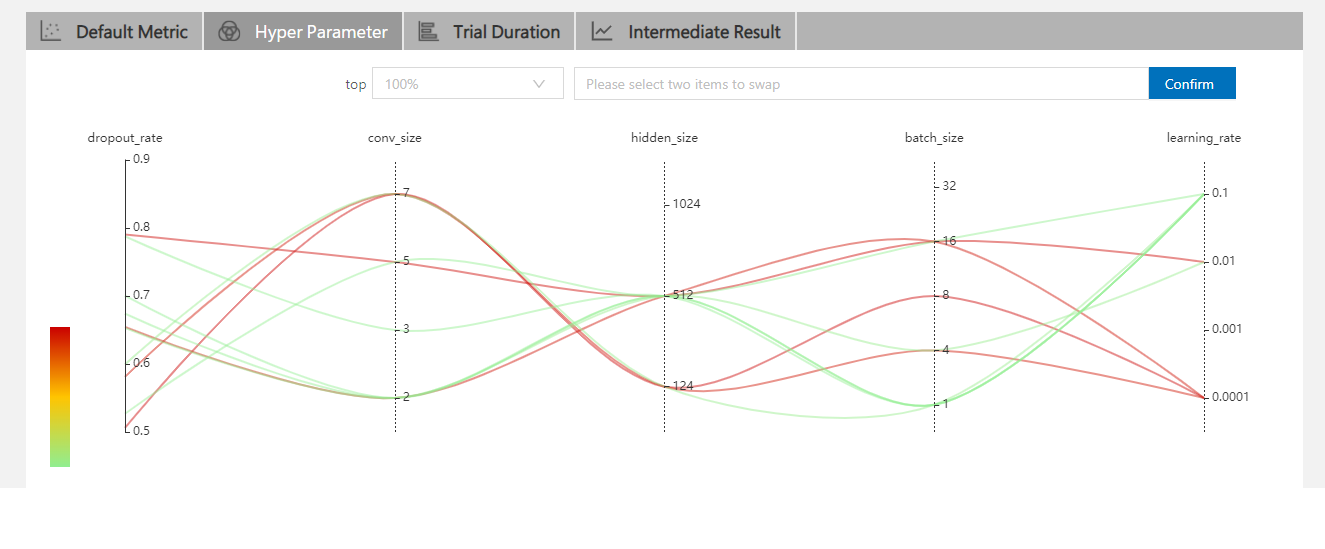

84.7 KB | W: | H:

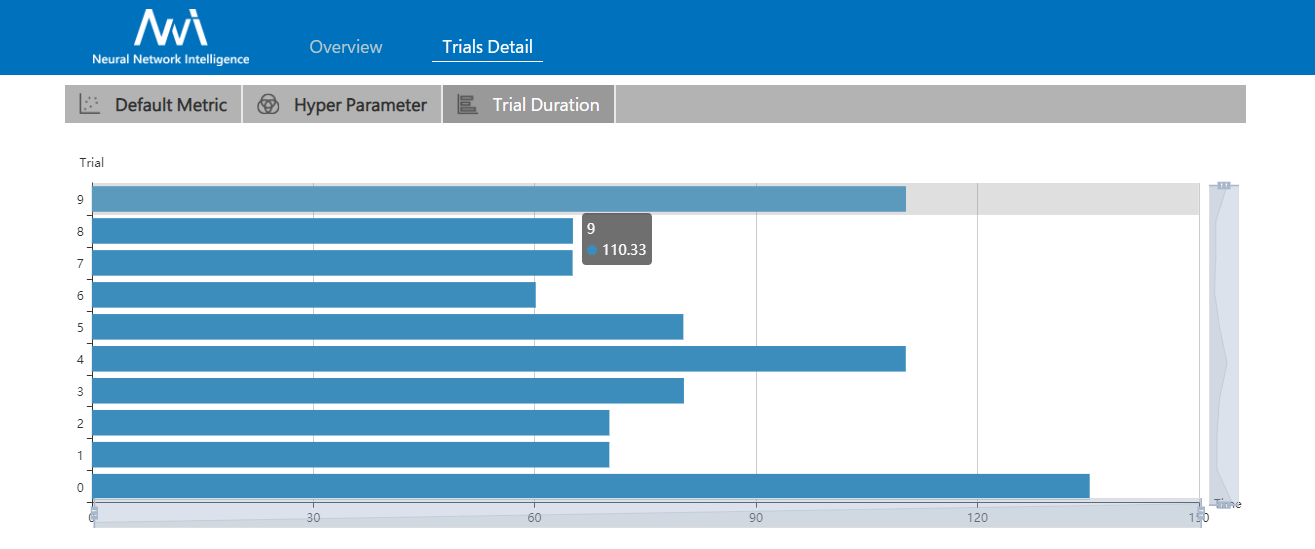

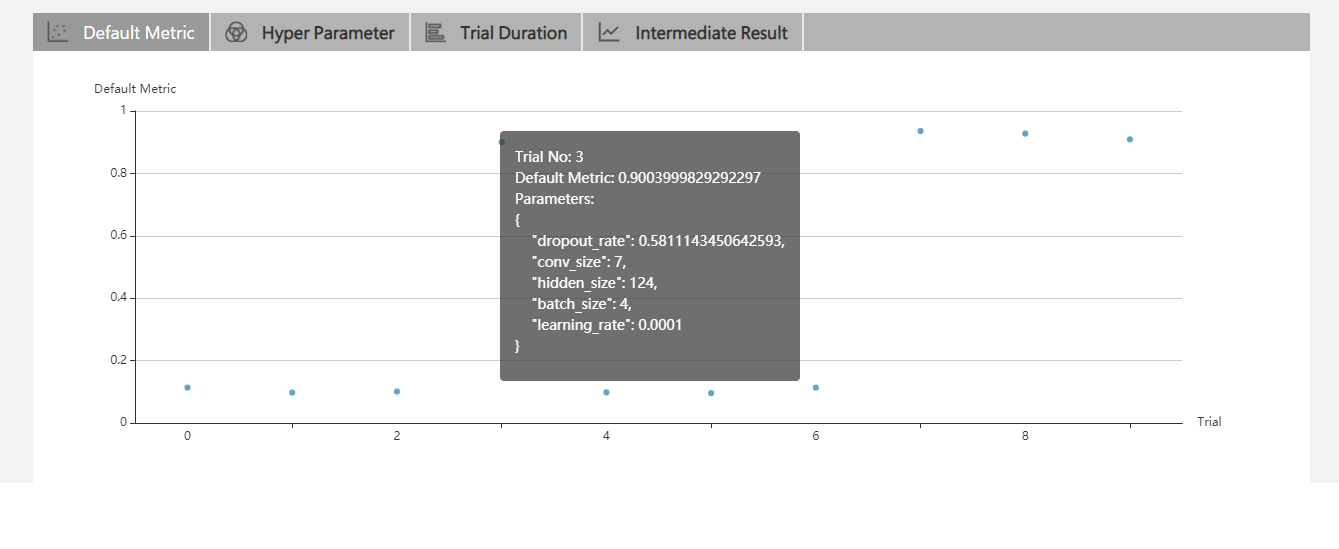

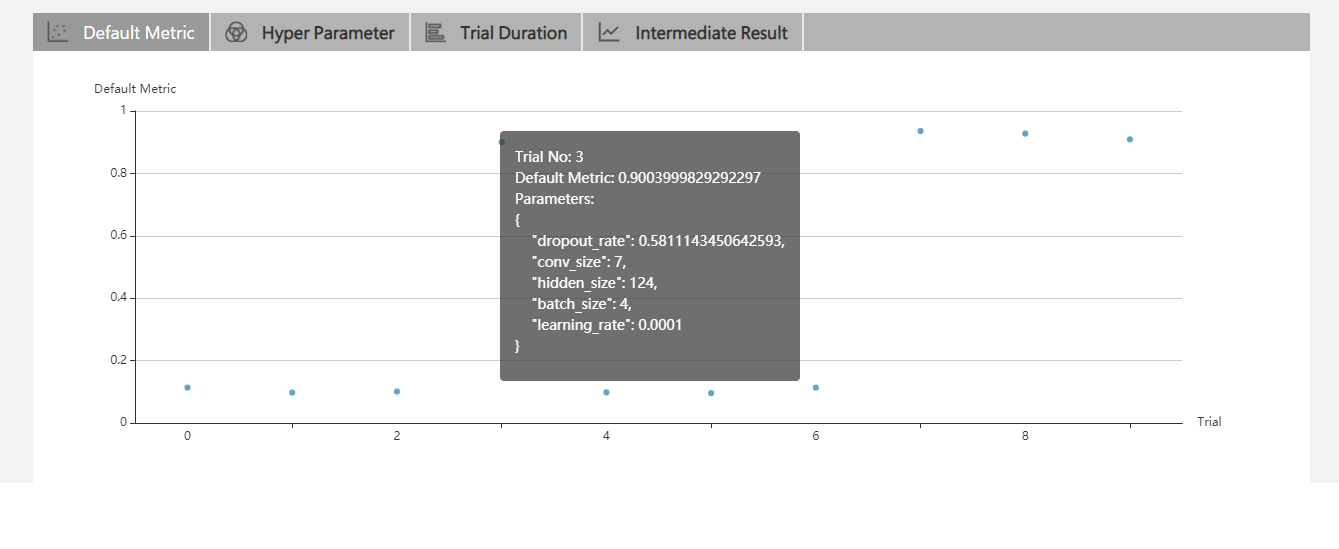

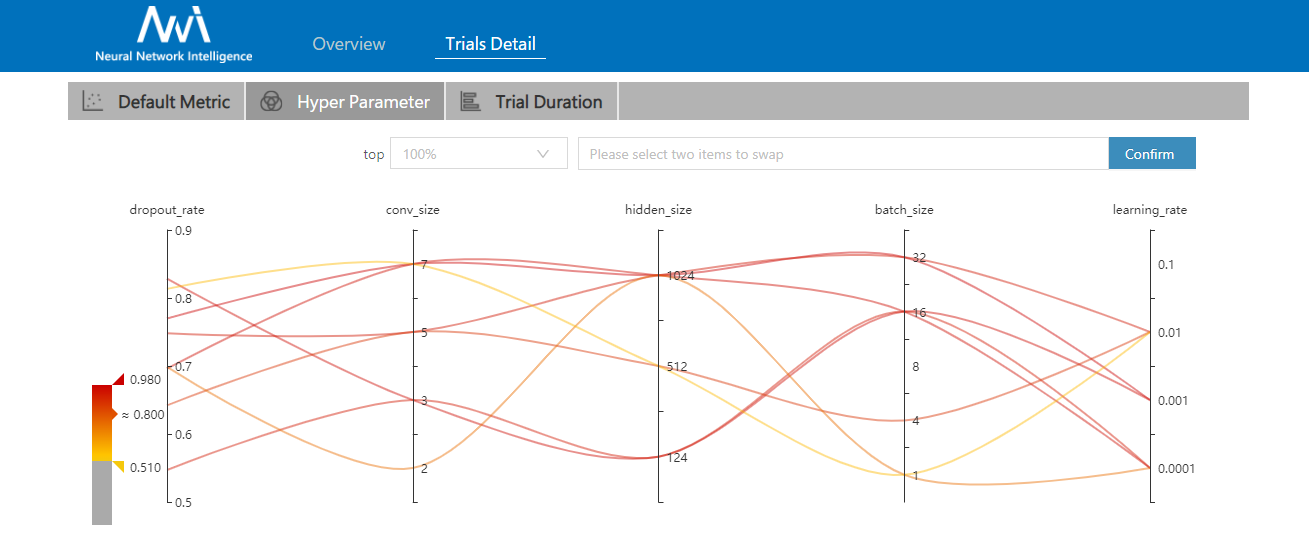

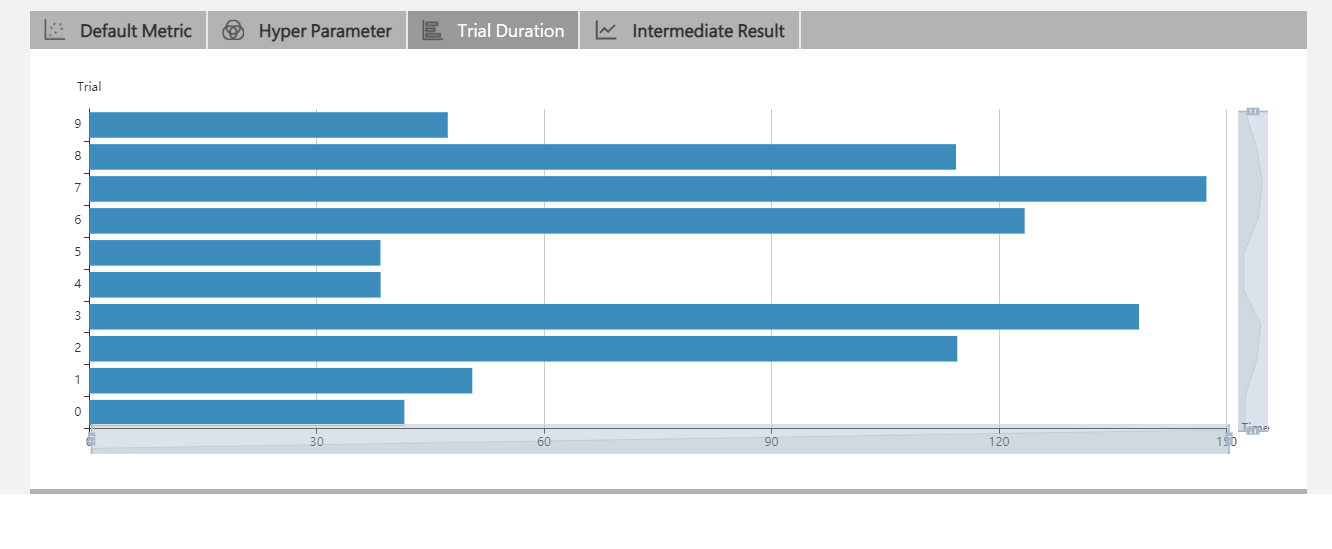

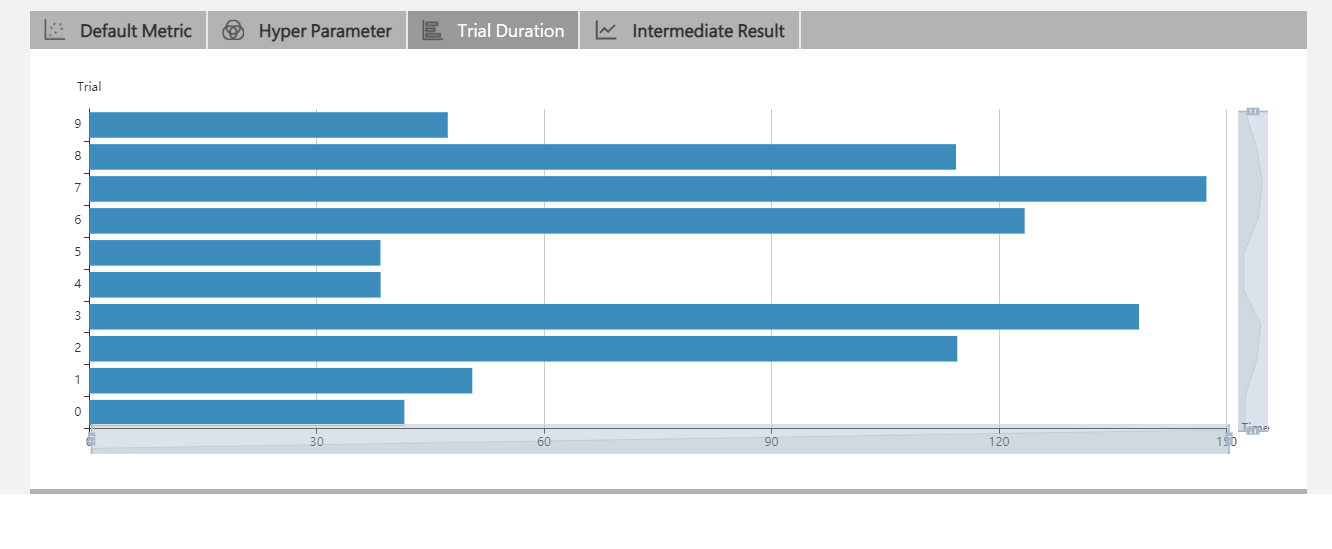

32.2 KB | W: | H:

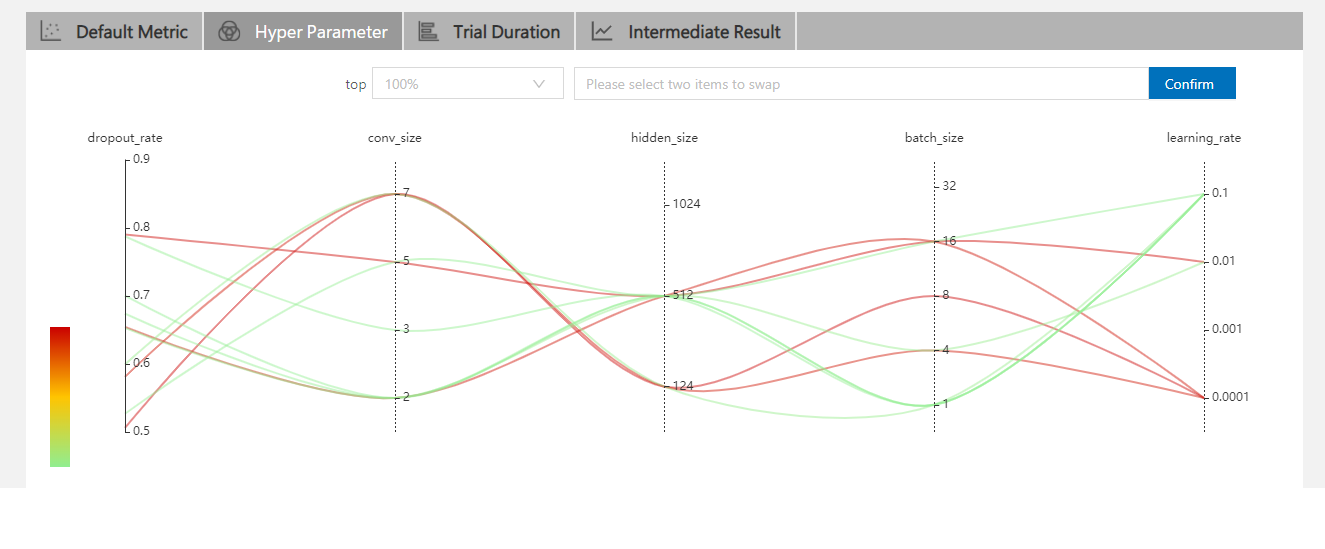

25.9 KB | W: | H:

19.2 KB

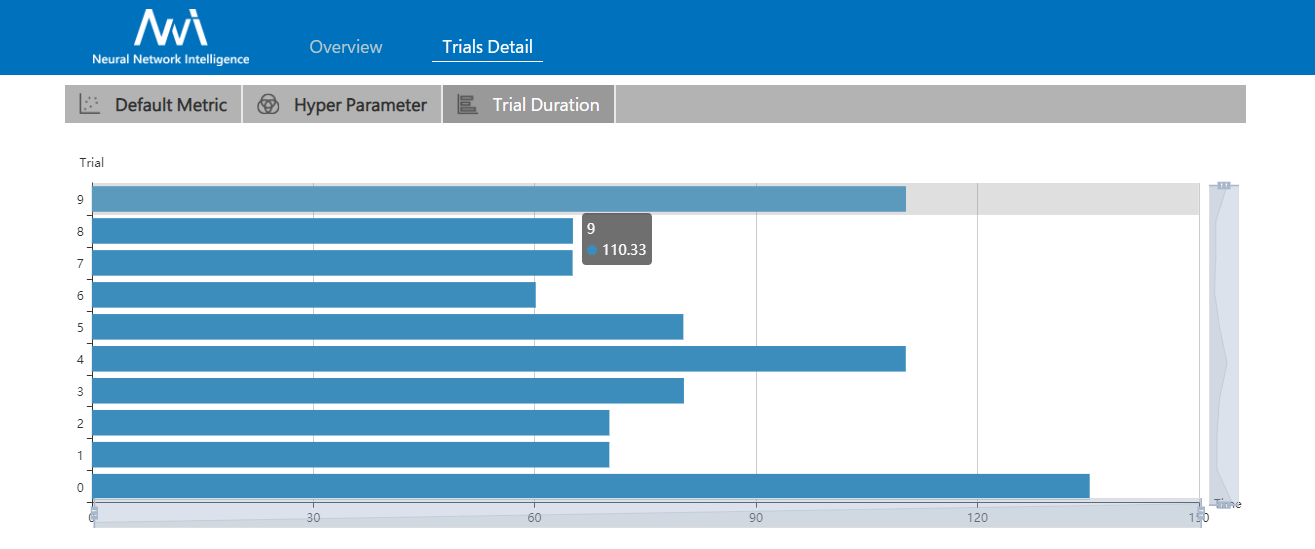

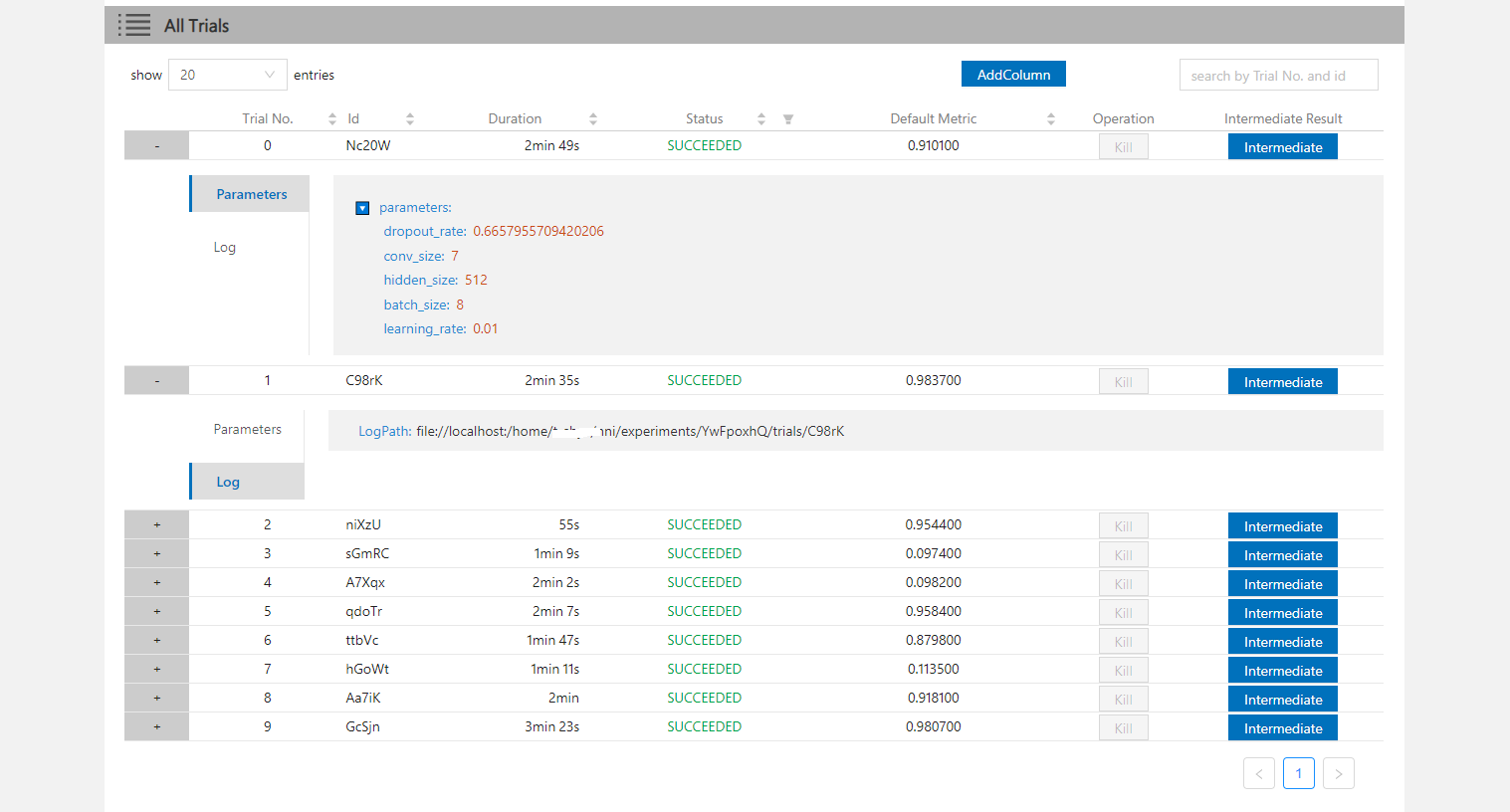

55 KB | W: | H:

53 KB | W: | H: