Merge pull request #611 from Microsoft/v0.5

Merge v0.5 back to master

Showing

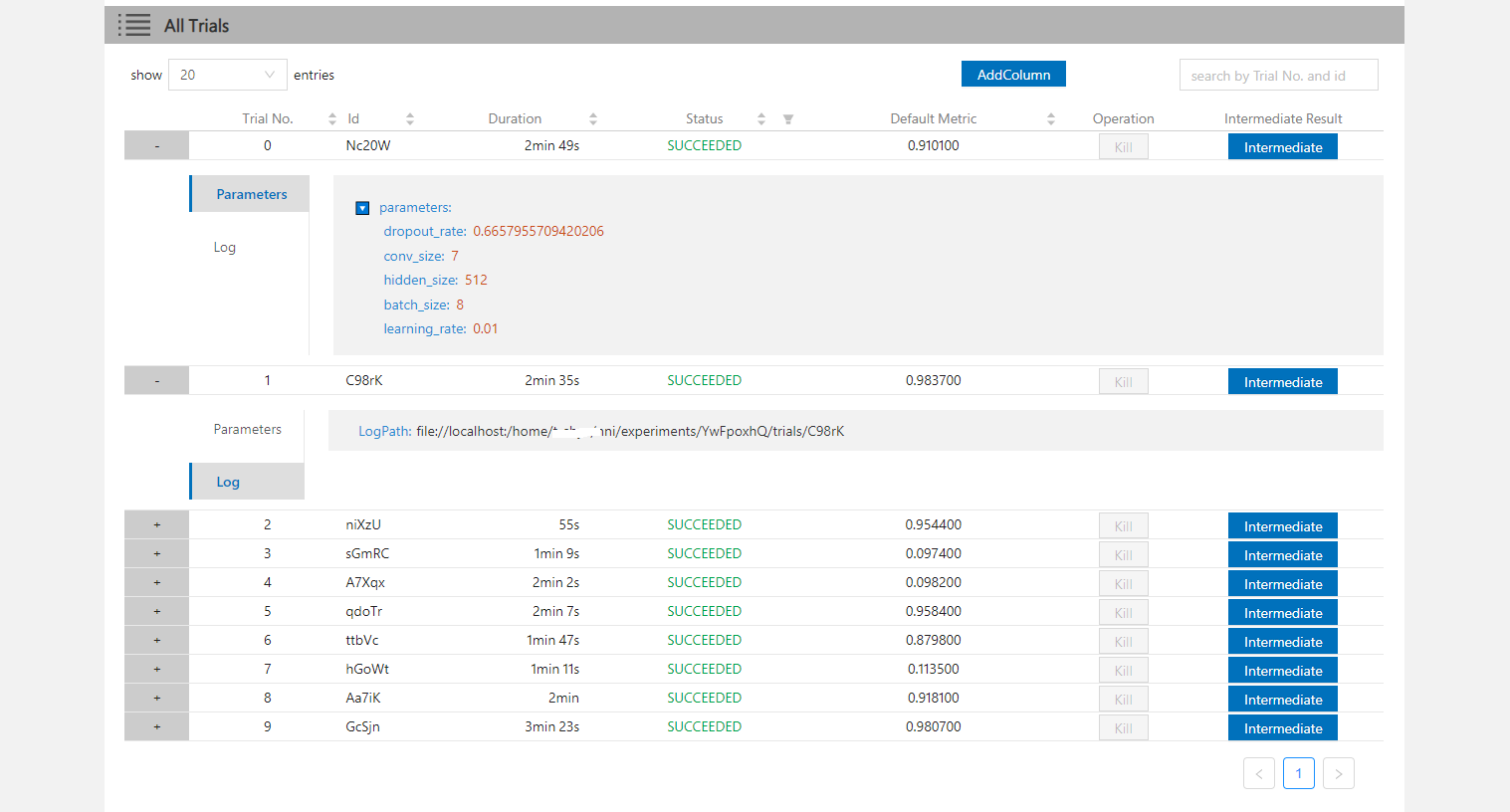

55 KB

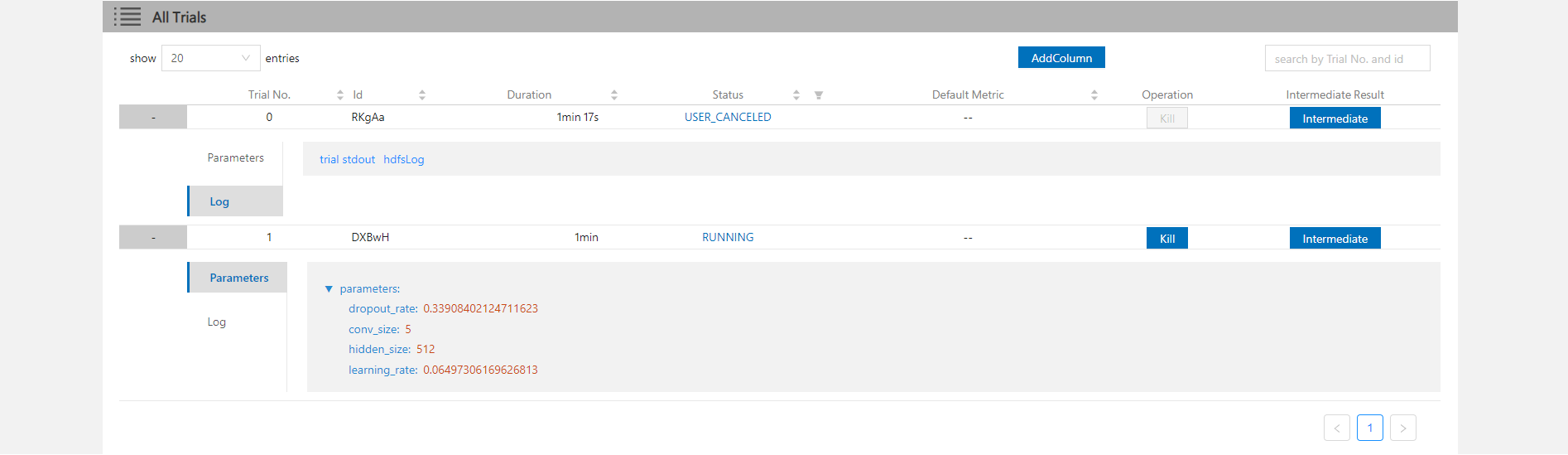

28.9 KB

docs/img/webui-img/over1.png

0 → 100644

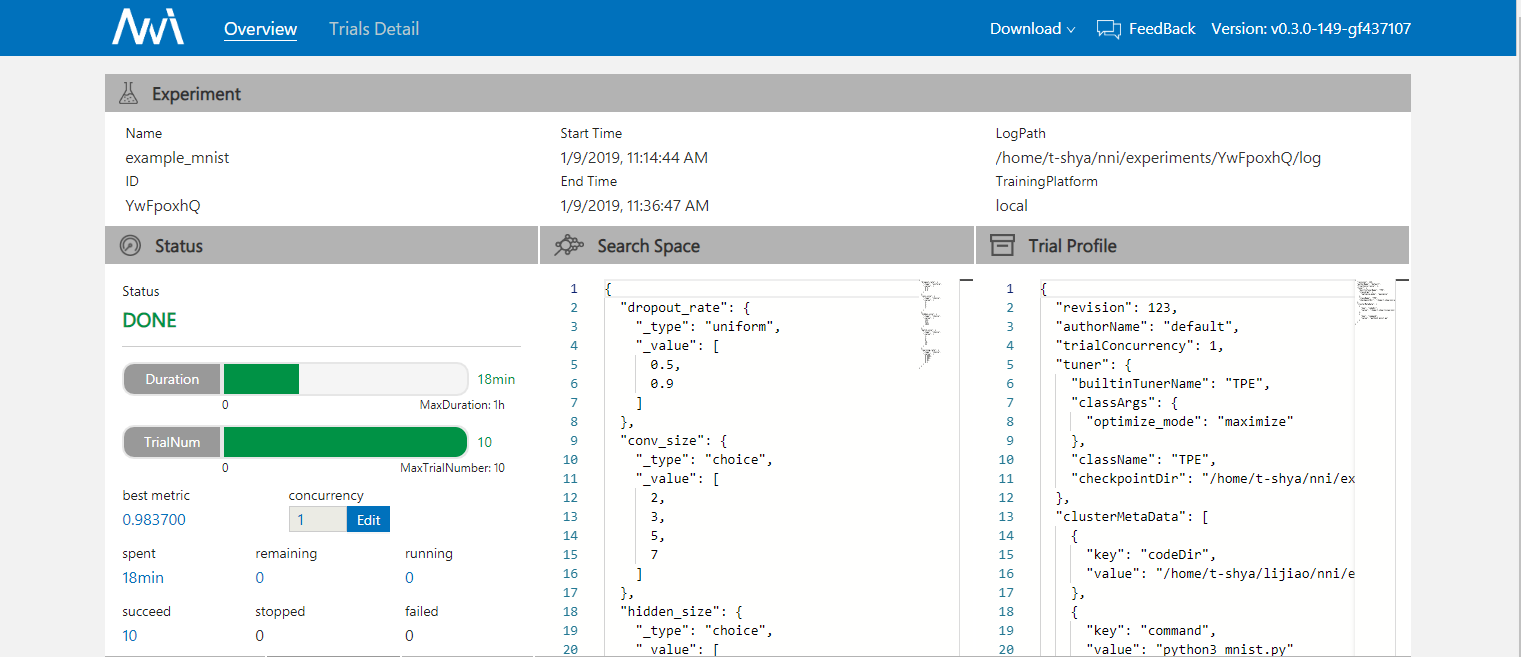

67.1 KB

docs/img/webui-img/over2.png

0 → 100644

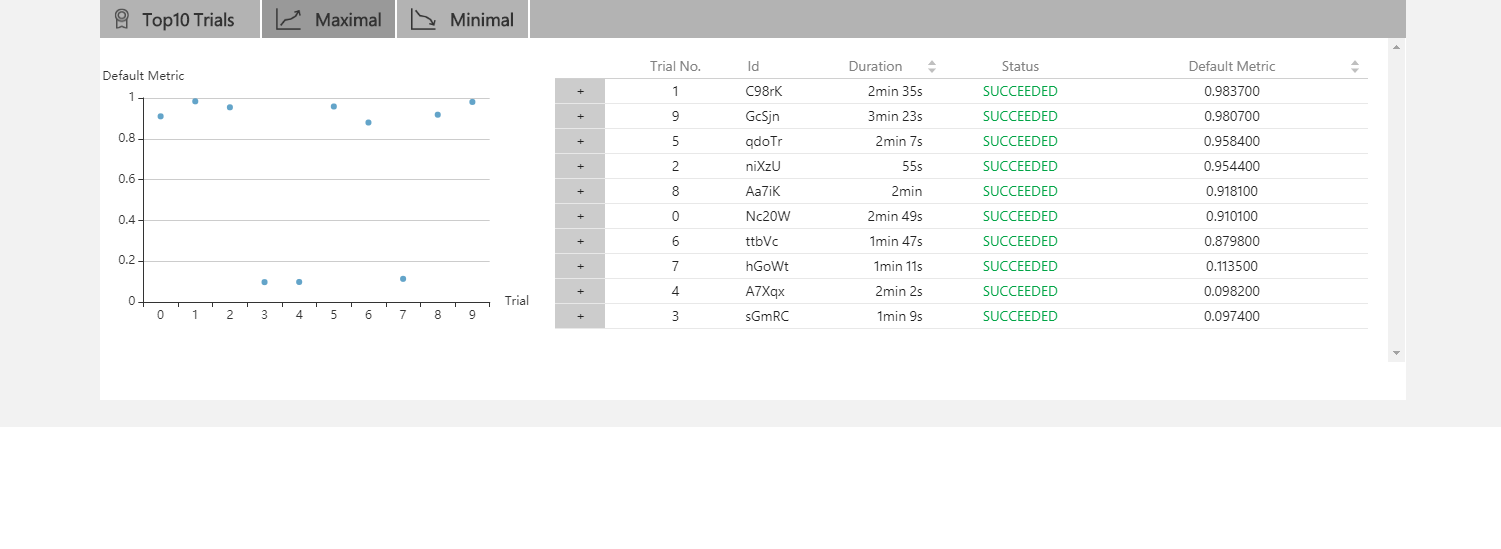

35.3 KB

Merge v0.5 back to master

55 KB

28.9 KB

67.1 KB

35.3 KB