Merge branch 'master' of github.com:Microsoft/nni into dev-retiarii

Showing

CONTRIBUTING.md

0 → 100644

docs/en_US/NAS/Cream.md

0 → 100644

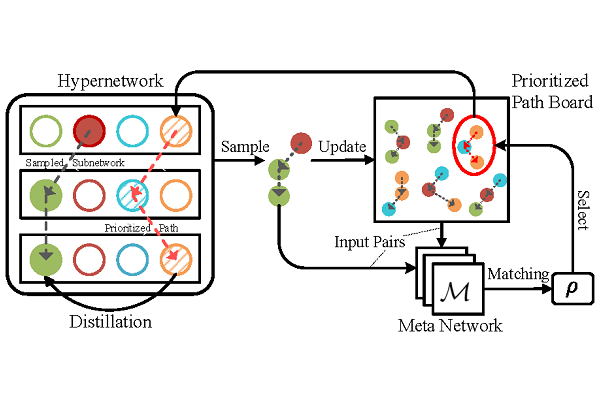

docs/img/cream.png

0 → 100644

63.6 KB

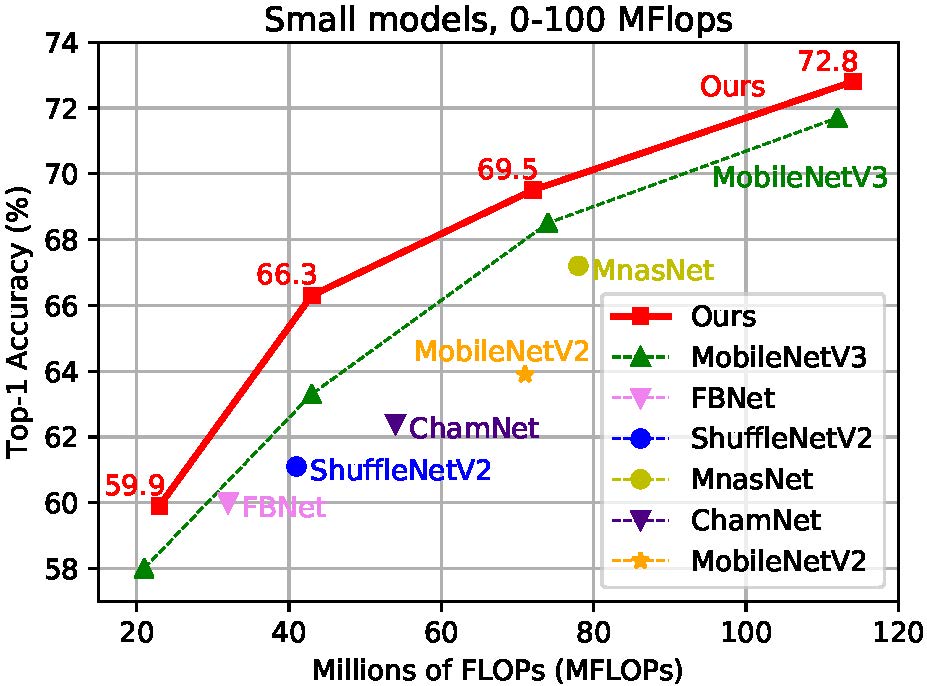

docs/img/cream_flops100.jpg

0 → 100644

103 KB