fix docs before r0.5.2 release (#783)

* fix sklearn dependency * add doc badge to readme * fix infinite toctree level

Showing

87.9 KB

docs/img/expression_xi.gif

0 → 100644

804 Bytes

docs/img/f_comb.gif

0 → 100644

1.17 KB

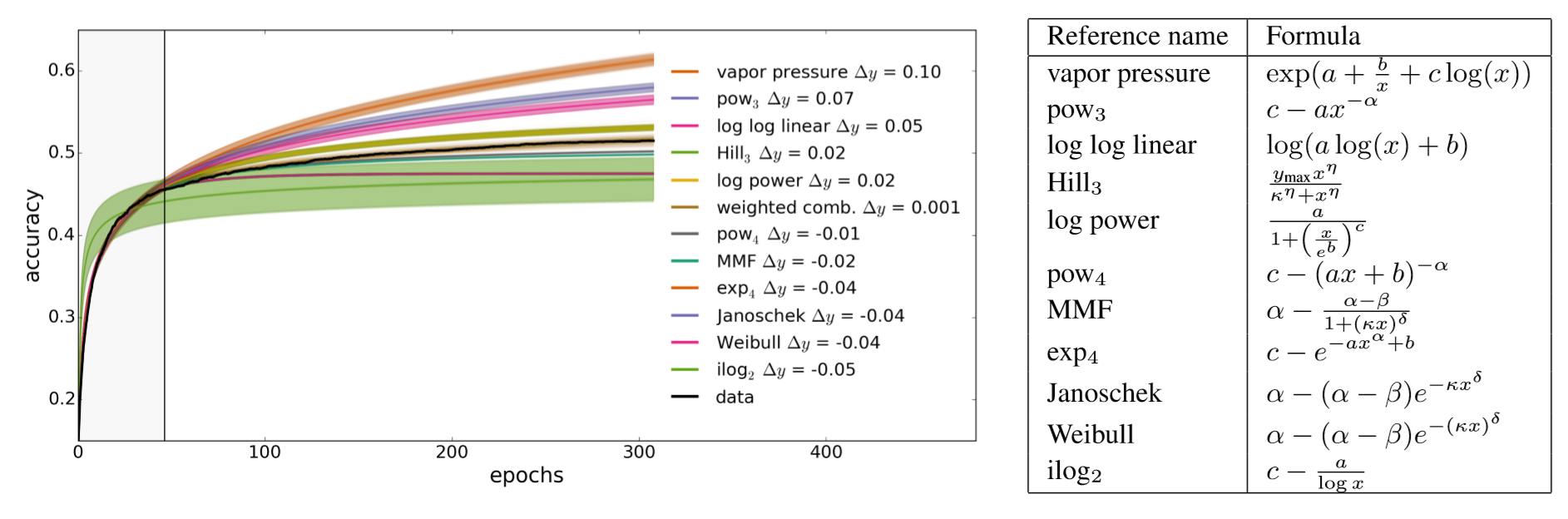

docs/img/learning_curve.PNG

0 → 100644

286 KB