"doc/vscode:/vscode.git/clone" did not exist on "43ccde7d882d145d0549b280f4aa5356ecd9082d"

Merge pull request #217 from microsoft/master

merge master

Showing

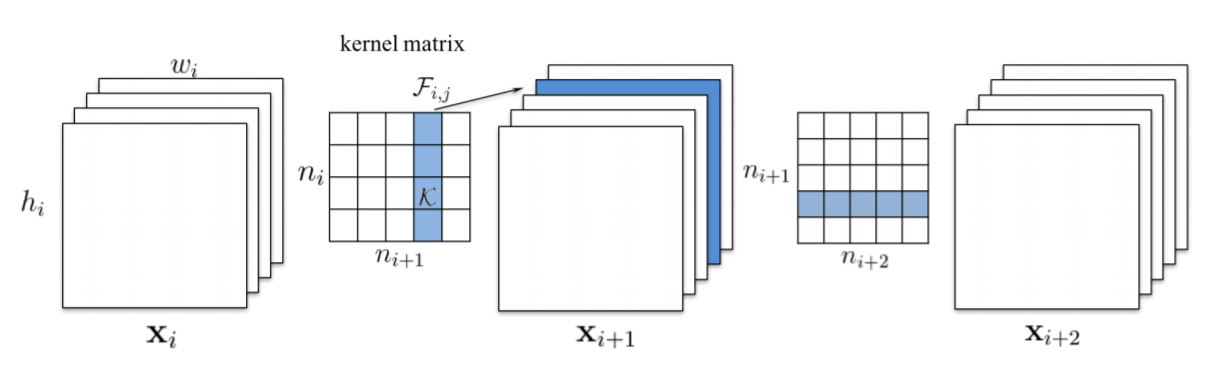

docs/img/l1filter_pruner.PNG

0 → 100644

66.3 KB

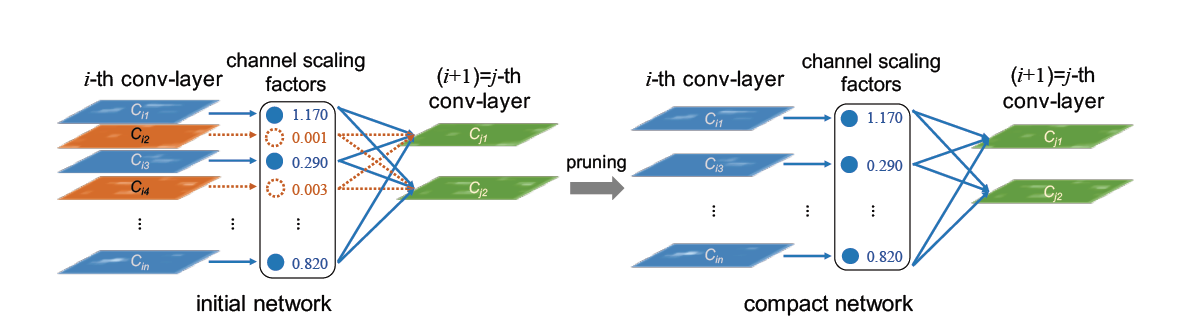

docs/img/slim_pruner.PNG

0 → 100644

61.6 KB