Merge pull request #3023 from microsoft/v1.9

[do not squash!] merge v1.9 back to master

Showing

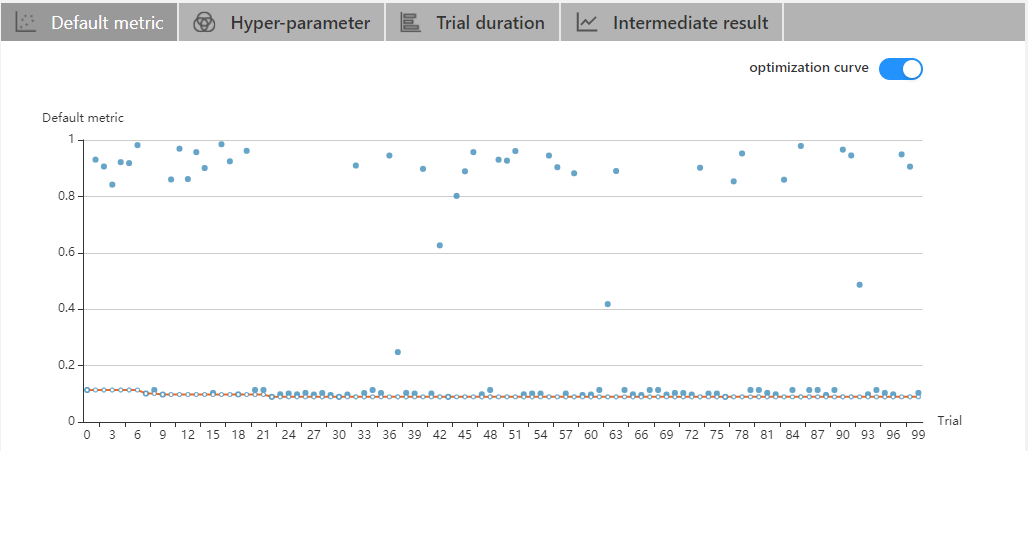

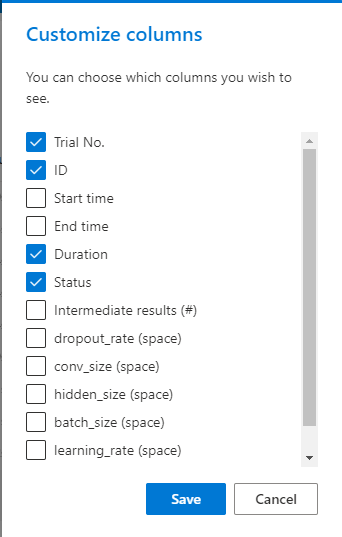

30.4 KB

| W: | H:

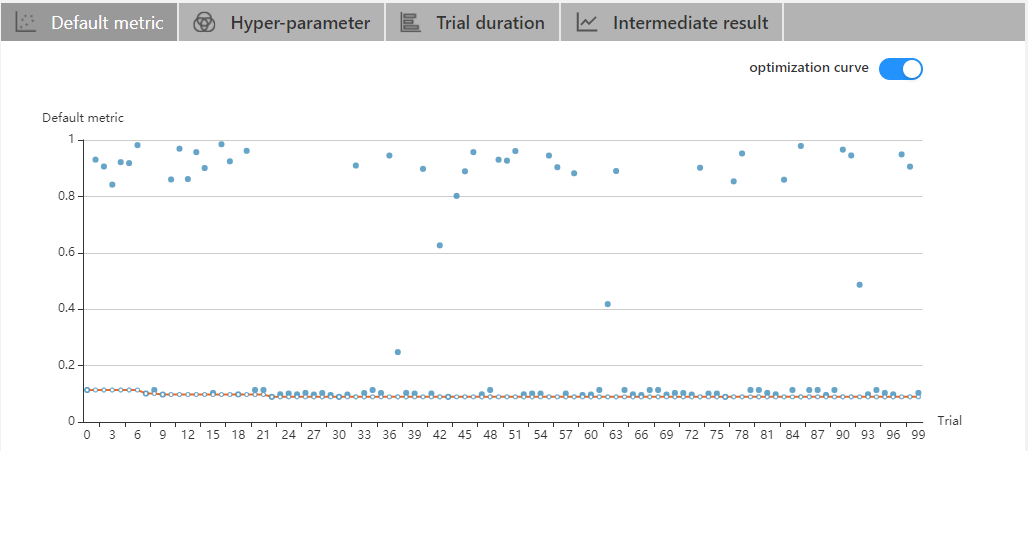

| W: | H:

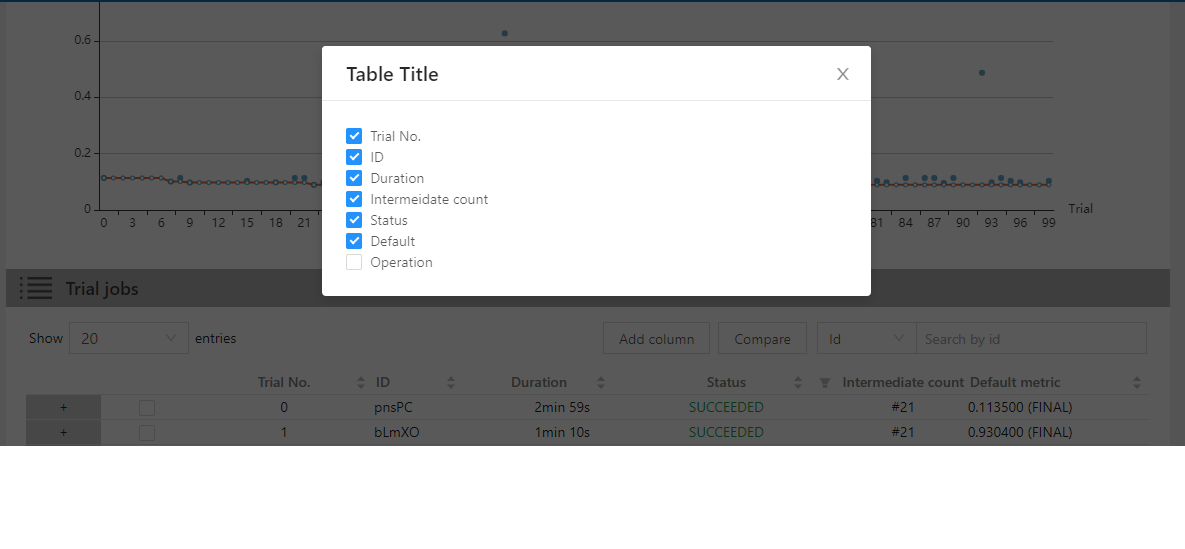

| W: | H:

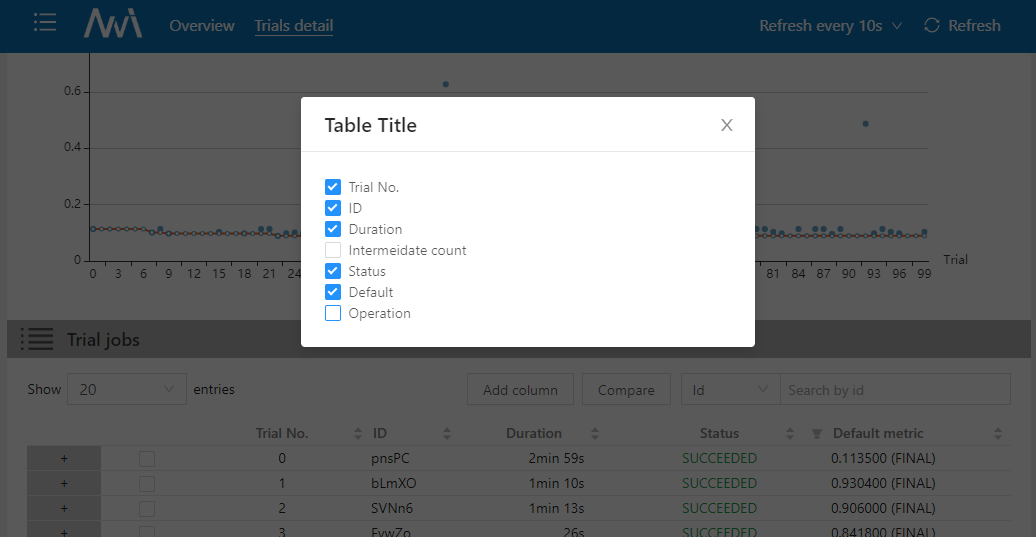

| W: | H:

6.97 KB

[do not squash!] merge v1.9 back to master

30.4 KB

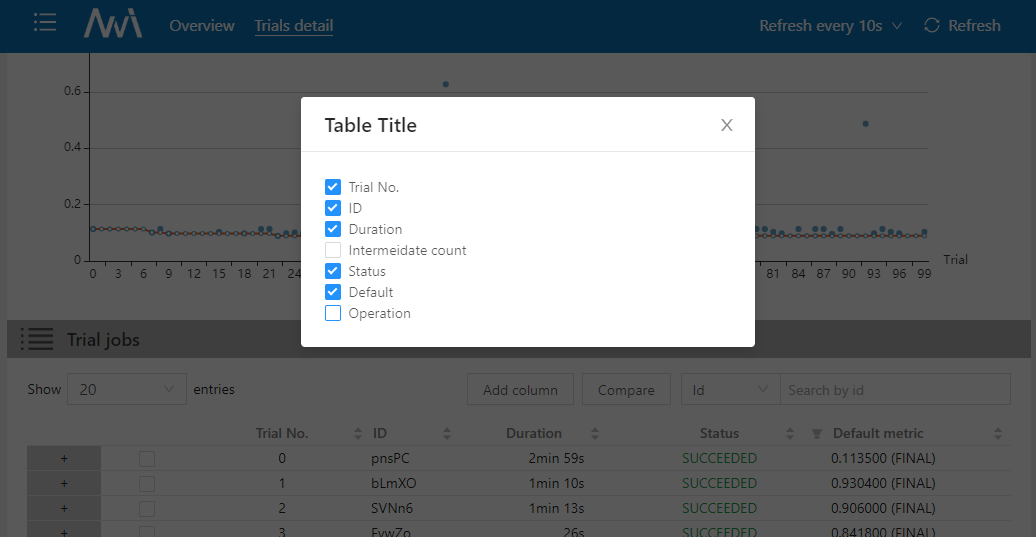

36.6 KB | W: | H:

15 KB | W: | H:

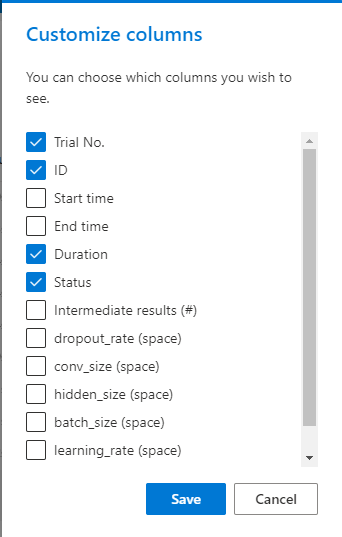

32.7 KB | W: | H:

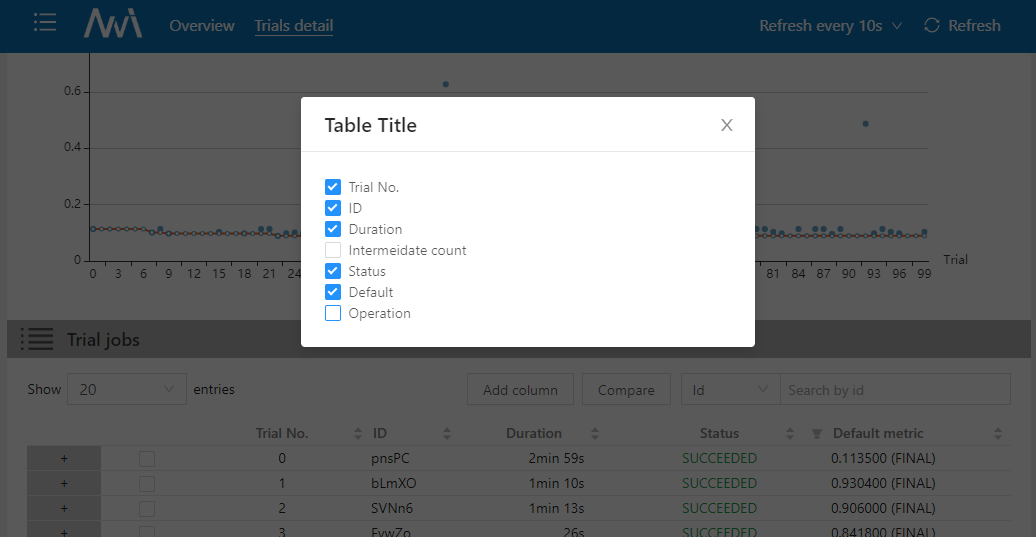

37.3 KB | W: | H:

6.97 KB