Merge pull request #235 from microsoft/master

merge master

Showing

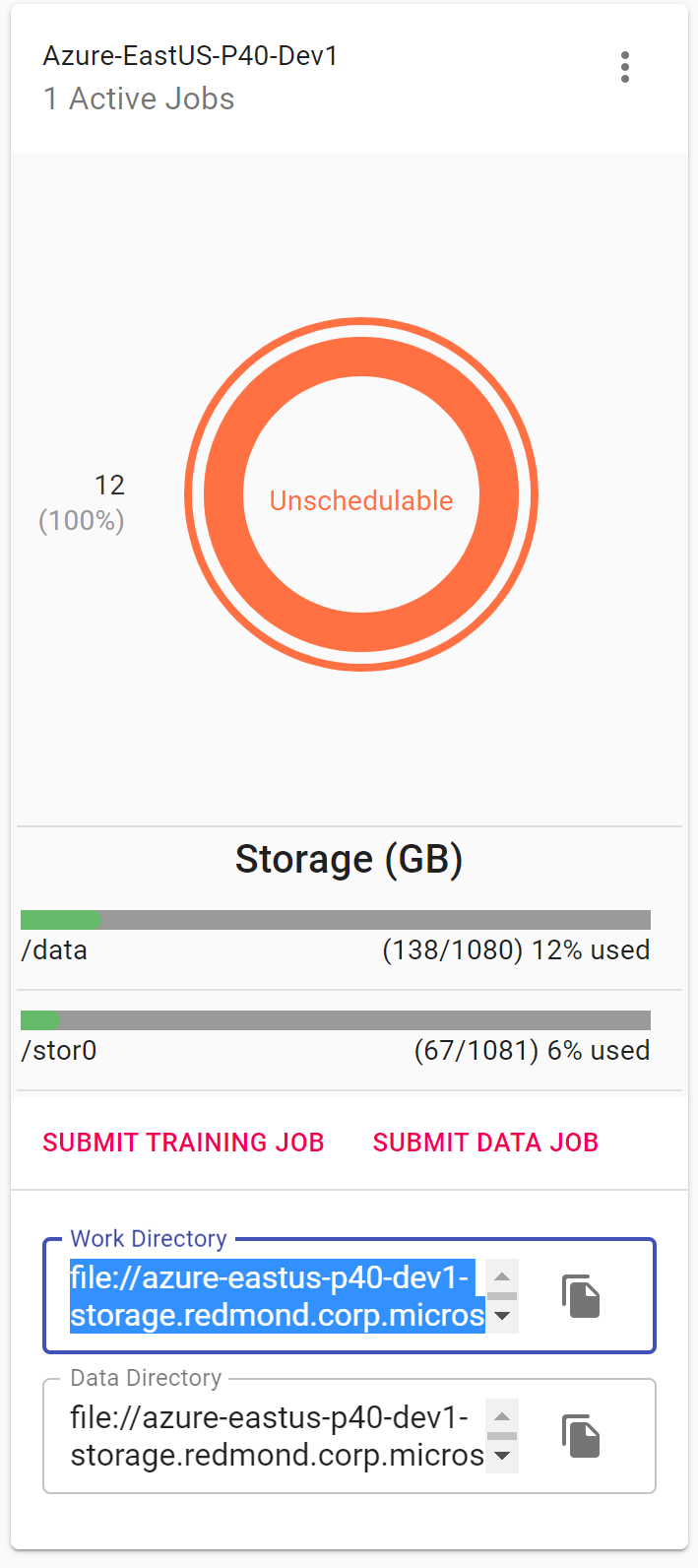

docs/img/dlts-step1.png

0 → 100644

83.7 KB

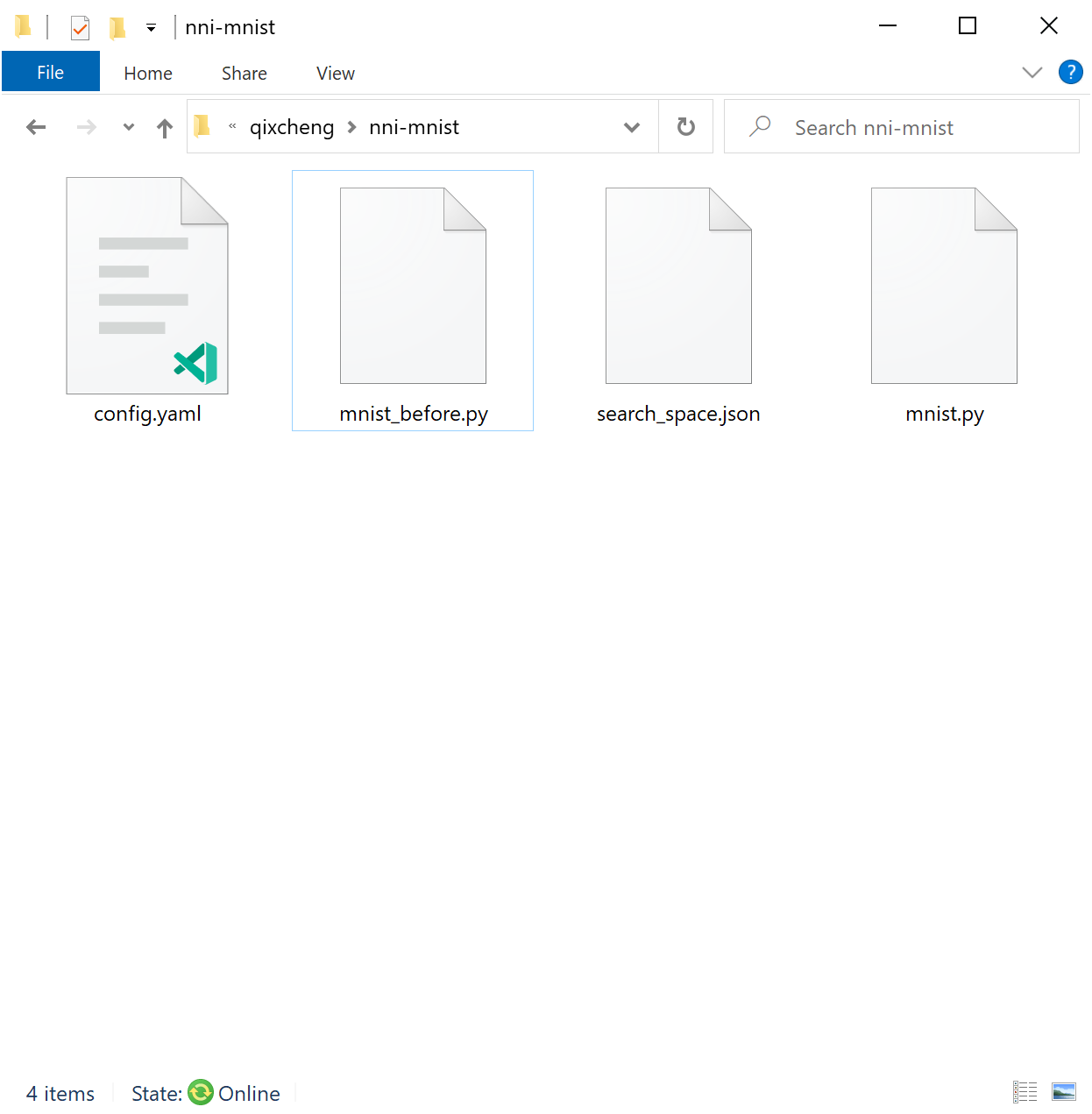

docs/img/dlts-step3.png

0 → 100644

56 KB

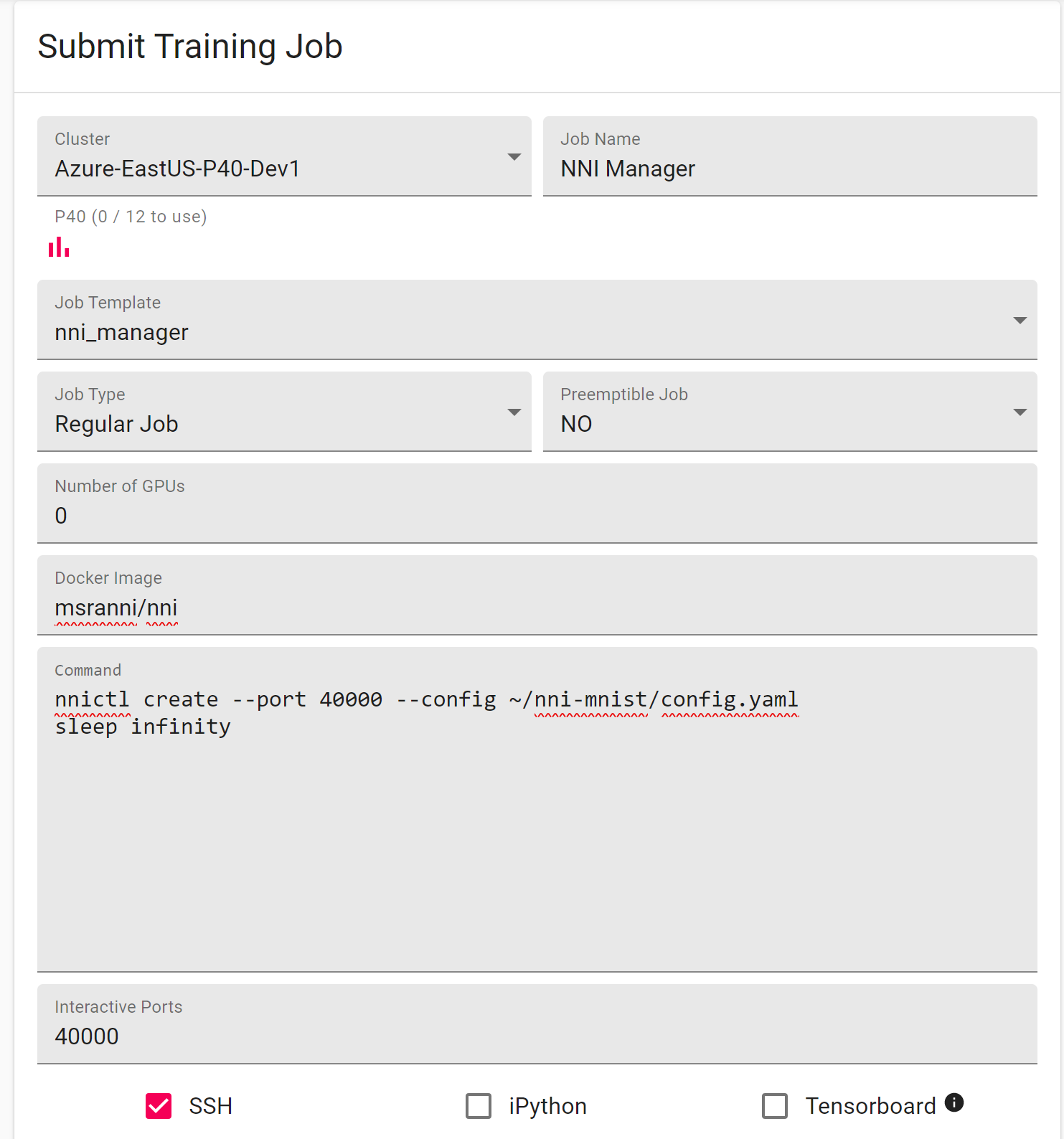

docs/img/dlts-step4.png

0 → 100644

73.9 KB

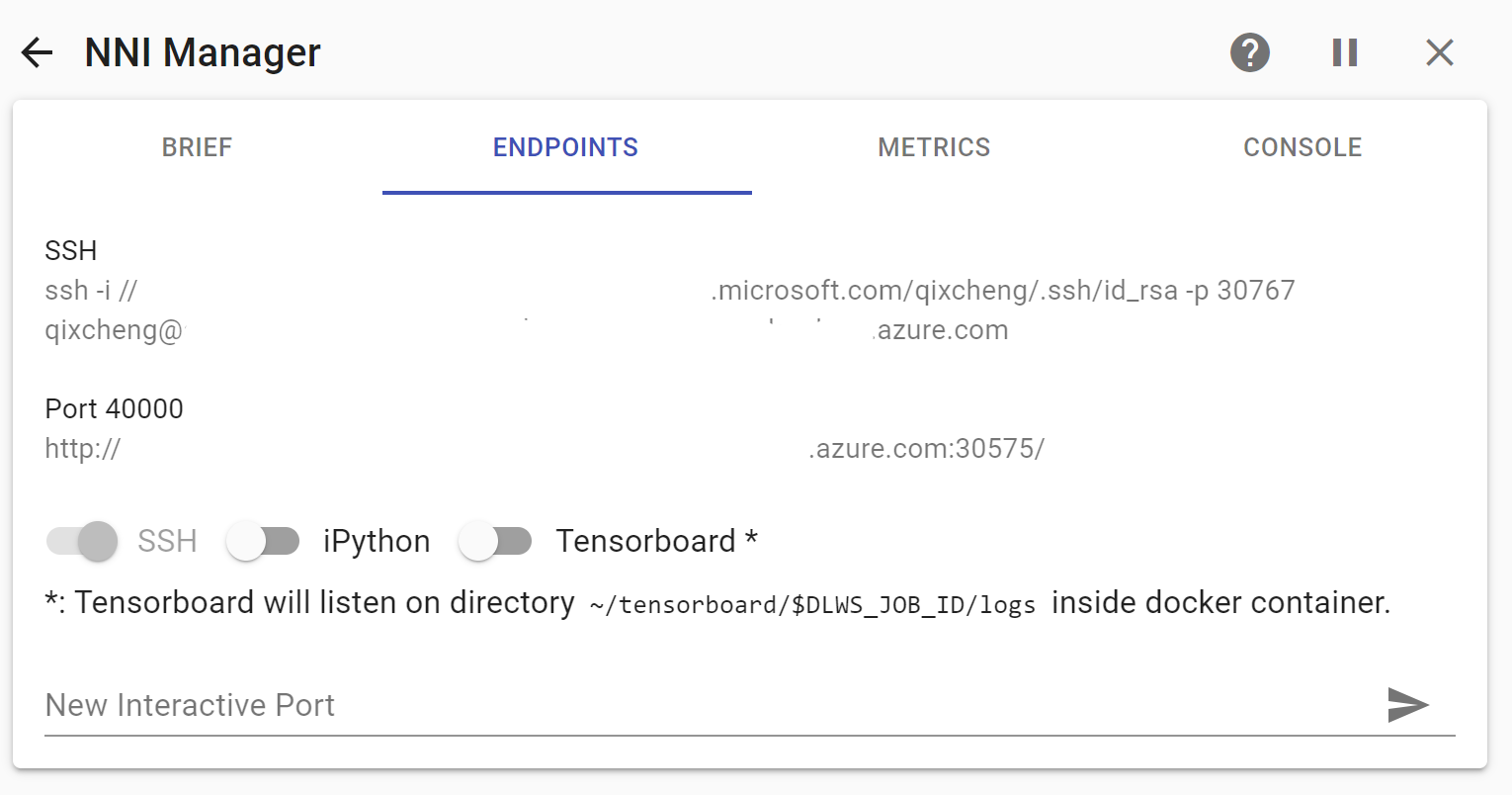

docs/img/dlts-step5.png

0 → 100644

77.5 KB